78 min read

The Metaverse, beyond fantasy

$ynth3t1( w0rld, r3al 3(0n0my

[Synthetic world, real economy]

Executive Summary

Join the author and a panel of industry experts for report highlights and Q&A, 28 November.

Register for our Webinar

"We are not on our screens; we are playing hide-and-seek with our friends in Roblox."

— Maya and Iseline, 12 years old, March 2020, first lockdown

For many, the term “Metaverse” first entered their consciousness when Facebook changed its name to Meta in later 2021. At the time, many people assumed it was merely a passing trend, focused on gamers and younger audiences, with little or no relevance to them or their businesses. However, key players and consultancies have since been falling over themselves to declare its huge potential, outdoing each other with the scale of their market forecasts. In this report we have sought to provide a realistic picture for businesses, focusing in particular on the technologies that are necessary to realize the Metaverse.

It is important to recognize that the Metaverse is not a new concept. The reason it is high on the agenda today is that we are seeing a rapid acceleration of development activity and usage adoption. This acceleration is driven by the convergence of three industries: gaming; collaboration and productivity tools; and social media and networks. The acceleration is also fueled by the confluence of key trends in user behaviors, software, and hardware development.

Businesses should not underestimate the importance and potential of the Metaverse. Put simply, it promises to be the future version of the Internet, powered with new properties that will open up new usages and business models — in a similar way to how the smartphone transformed the Web.

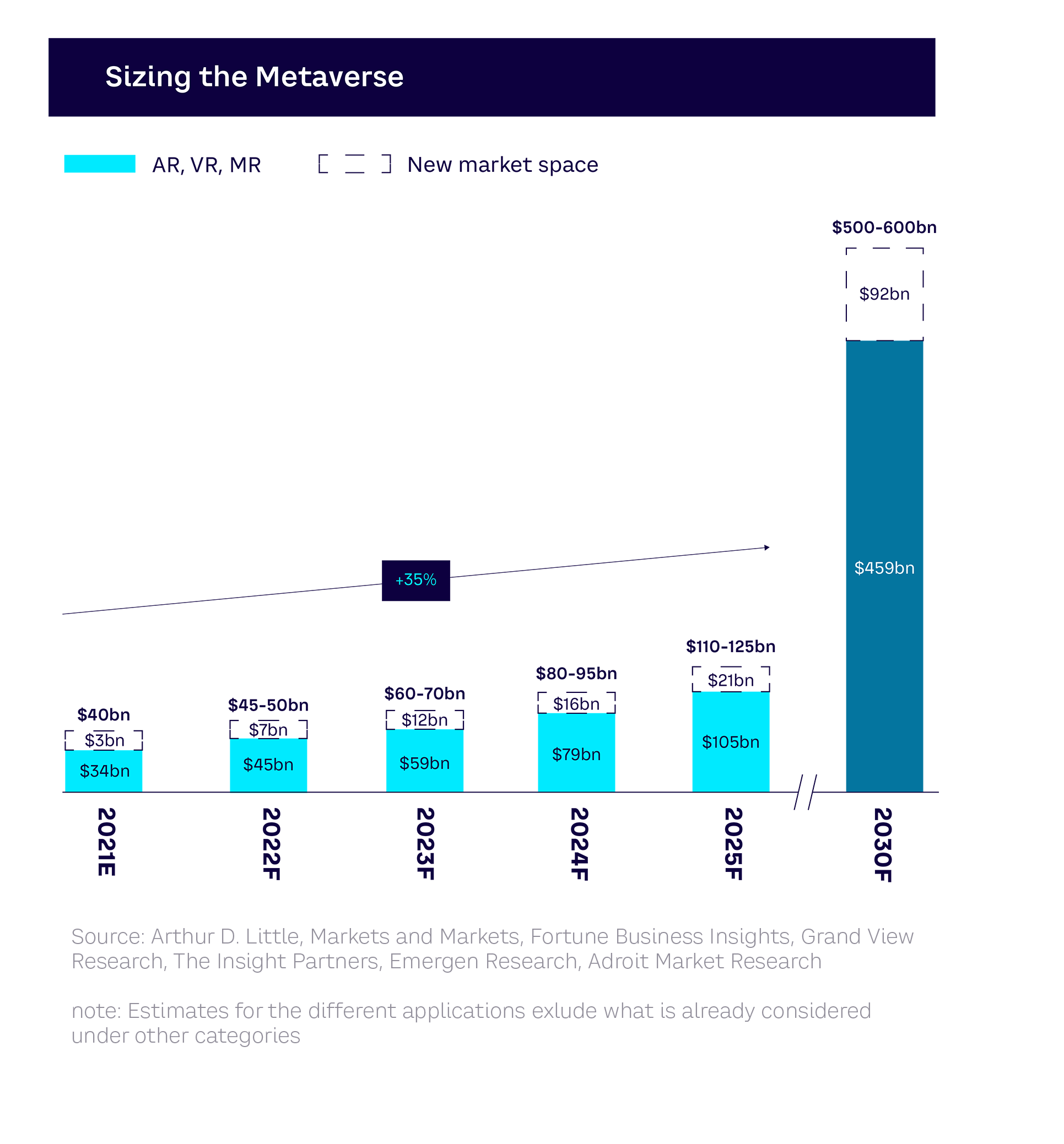

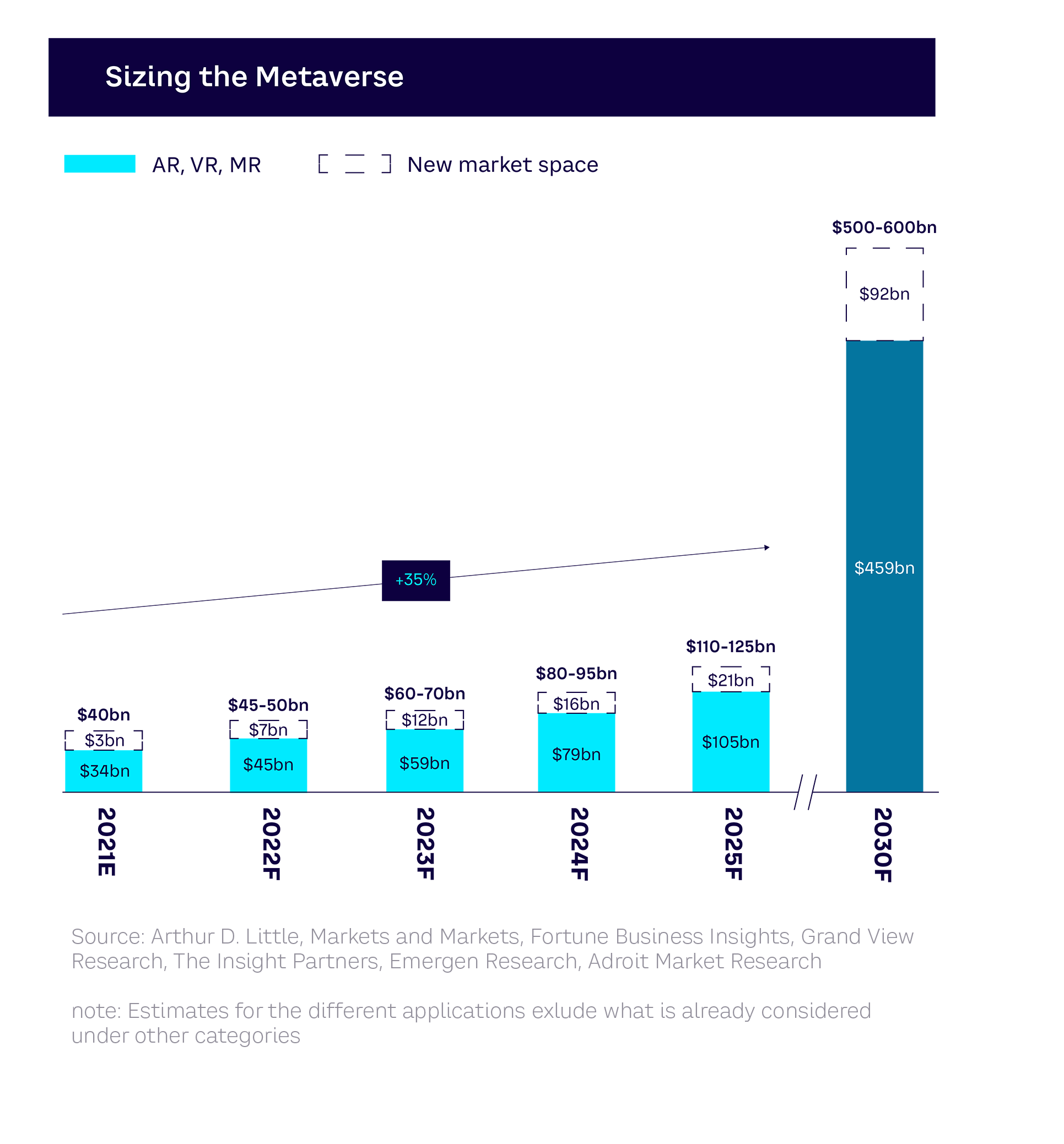

Forecasting the size of the market is difficult. If key enabling technologies are included, such as artificial intelligence (AI), Internet of Things (IoT), and blockchain, as well as the required digital infrastructure development, then the market could easily reach several trillion euros by 2030. However, we advise caution, as some of this market represents substitution rather than genuinely new market space. Our more conservative view suggests an incremental market, excluding infrastructure, of perhaps €500 billion by 2030, with a 30%-40% growth. In any case, however you define it, the Metaverse market is enormous and very dynamic.

To help understand the Metaverse and its current development status, we developed a six-layer architectural framework. Using this analysis, we concluded that, in contrast to what many observers are saying, the underlying technology to enable the Metaverse as the complete “future version of the Internet” won’t be fully available for around a decade. This is something that businesses need to be aware of.

Instead of a single, unified Metaverse, businesses face today a world of unconnected proto-metaverses. That said, there are still huge opportunities. Despite the remaining technological challenges, businesses need to take steps now to understand the current market and position themselves for the future.

In summary, we believe that among all the trends and factors currently shaping the Metaverse, three of them are especially critical because they combine high potential impact and high uncertainty. These three critical factors are:

- Immersivity. The development of new augmented reality/mixed reality (AR/MR) technologies that effectively overcome current technical obstacles would be a strong accelerator of new usages in the coming years. In the same way that smartphones made the digital economy shift from computers to mobiles, we believe that user-acceptable AR/MR glasses would drive a similar shift from screen to Metaverse.

- Interoperability. Interoperability is essential to provide a true seamless experience to users and to allow them to share resources, irrespective of their access platform. However, due to diverging interests between vendors, users, and other players in the value chain, there is no guarantee that this will be achieved.

- Abundance. In the physical world, scarcity drives the value of assets in a market economy. In the traditional digital economy, since a digital file can be duplicated at no cost, scarcity was reintroduced artificially through systems such as digital rights management. In a virtual world with blockchain and non-fungible tokens (NFTs), a new economic paradigm of “abundance” may appear, implying a more fundamental value shift from physical assets to experience and, perhaps, status. The extent to which this will happen, and its implications for business, are uncertain.

Preface by Primavera De Filippi

Having discovered the Internet at a very young age, I spent most of my childhood exploring the new opportunities of this virtual environment — socializing with people all over the world, traveling through the blue trails of hyperlinks, and making software to do things not previously possible. Similarly, today I am fascinated by the potential of the Metaverse. This new virtual environment in which anything is possible — a place where you can be anyone you want to be, do anything you want to do, and go anywhere you want to go.

I am confident that the Metaverse will eventually become an ineluctable component of our everyday reality — whether that is through virtual reality (VR), augmented reality AR, or extended reality (XR) — and that it will change the way we live, work, and play in ways that we cannot even imagine. As an artist, I am excited about all the new opportunities of artistic production and creative expression that this new medium will engender. In a world where there is no sky, imagination is the only limit that can hold us back.

But what is the Metaverse, exactly? Very few people can give a precise answer to this question, and those who do might possibly change their minds after reading this Report. Indeed, as this Report shows, the Metaverse as we envision it does not exist (yet). All we have are walled gardens — siloed virtual worlds competing with one another in order to become “the” Metaverse. But if the Internet has taught us anything, it’s that interoperability is crucial and that open permissionless innovation is key to any flourishing digital ecosystem.

All the commercial opportunities of the Metaverse have attracted the attention of many new businesses, eager to establish themselves in this new virtual landscape. Yet, the Metaverse can only be what we make of it. And as the short history of the Internet has shown, there will always be a battle between those who see the Metaverse as a new opportunity to build an open and participative society, and those who see it as a means to promote their own vested interests and economic profits.

As a legal scholar and digital activist, I am today committed to ensuring that the Metaverse — whatever direction it takes — will become a powerful tool for good that can help us build a better world, one where new communities can emerge and collaboration can strive. I hope this Report will inspire you to push toward the same direction.

Preamble

Over 20 years ago, without knowing it, I was contributing modestly to some of the technological bricks that underlie the Metaverse. I was finishing my studies as a telecom engineer and, in 2001, beginning my internship at the Australian National University (ANU), where I would explore the frontiers of VR, which already fascinated me at the time. A year later, I started my PhD in computational physics, still at the ANU. During a screening of the film The Matrix, I had the chance to meet the Australian philosopher David Chalmers, a specialist in the nature of reality and consciousness, who was giving a lecture entitled “The Matrix as Metaphysics.” Exciting!

Since that time, digital and synthetic worlds have never ceased to fascinate me. They fascinate me as technologies, and also as sources of disruption in terms of uses and business models. They fascinate me also as vectors of societal and anthropological transformation. And finally, they fascinate me as sources of dizzying ethical and philosophical questions.

In fact, some, like the philosopher Nick Bostrom[2] or the entrepreneur Elon Musk,[3] think that the world we live in — what we call reality — is in fact a simulation. In a nutshell, the argument goes like this: (1) if humans don’t become extinct and (2) if humans don’t decide against running so-called “ancestor simulations” (i.e., simulations aiming at simulating the apparition and evolution of life), then (3) there is a point in time when humanity will reach enough technological maturity to be able to run such simulations. In which case, there will be a lot more simulated worlds than there are real worlds. It follows that the probability that we are not in a simulation today is actually very small.

But back to our “real world.” During the first COVID-19 lockdown in March 2020, when my twin daughters were playing hide-and-seek with their friends on Roblox — which I thought was just a teen video game — I realized that the vast majority of young people stuck at home because of the pandemic were reproducing uses of the physical world in synthetic worlds, and that this shift from real to synthetic would be anchored for life.

This awareness, supported by an initial analysis, led me to write an article for Harvard Business Review France in early 2021:[4] I felt that the Metaverse was going to become “the place to be.” In response to the article, we received many requests from our ecosystem to give conferences or consulting on the topic. So, we decided to investigate the matter even further to help you navigate through the technological fog and make the right strategic decisions today. This study aims to answer three questions:

- What is the Metaverse?

- How mature is the Metaverse?

- What are the business opportunities of the Metaverse?

Before immersing ourselves in the Metaverse, however, I would like to share an anagram I discovered for “Metaverse Flippant” (Creepy Metaverse):

platement pervasif/flatly pervasive

This may sound a little scary or pessimistic but, as always, anagrams move in mysterious ways. What do you think?

– Albert Meige, PhD

1

The Metaverse: The future version of the Internet

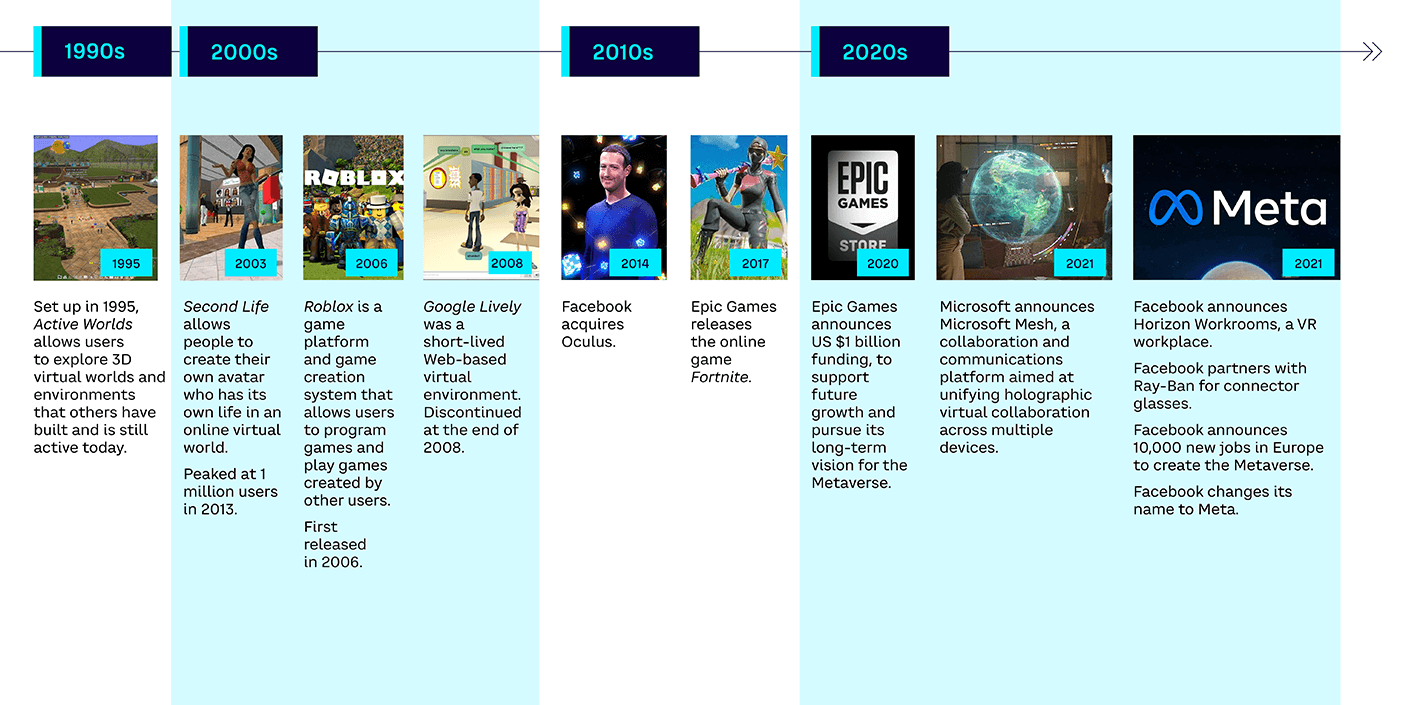

Despite what some would have you believe, the Metaverse is nothing new. The concept of synthetic or virtual worlds in which people connect has been around for at least 40 years in science fiction and 20 years in real life (see Figure 1). However, what is important is that there has been a strong acceleration of development activity around the concept over the last two or three years. This acceleration is due to two main reasons: convergence between three industries fighting for the same market, and a confluence of trends in users, software, and hardware coming together for the first time. Rather than a new concept, the Metaverse can be best considered as the future version of the Web, powered with new properties that will open up new usages and business models — in a similar way to how the smartphone transformed the Web.

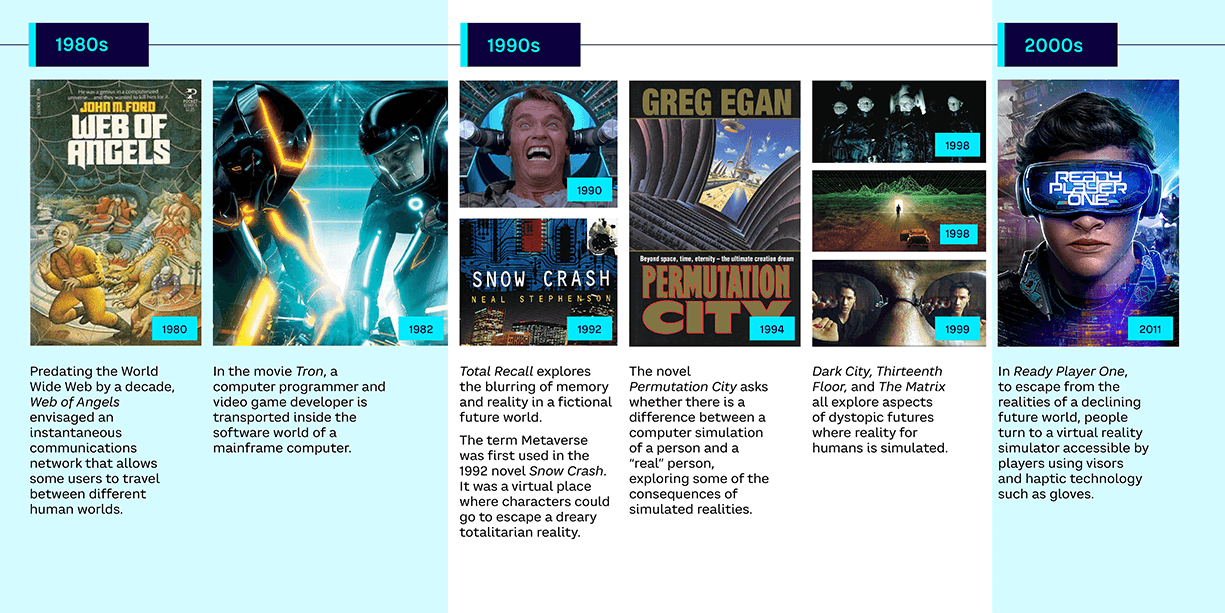

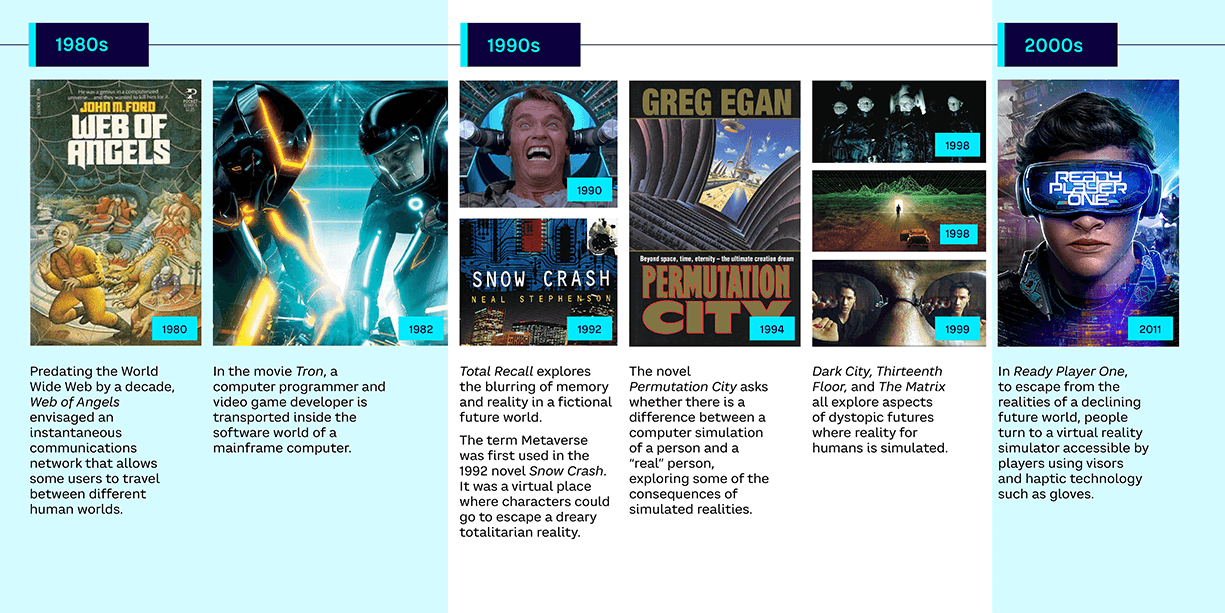

The concept of Metaverse has been around for 40 years

The Metaverse is not a new concept — it actually predates the Web itself. The idea of synthetic[5] or virtual worlds that people visit in order to interact with others dates back over 40 years in science fiction, becoming more mainstream thanks to films such as Tron and Total Recall in the 1980s and 1990s. The term itself was coined in Neal Stephenson’s 1992 novel Snow Crash, defining a virtual space where users could go to escape a dreary, totalitarian reality.

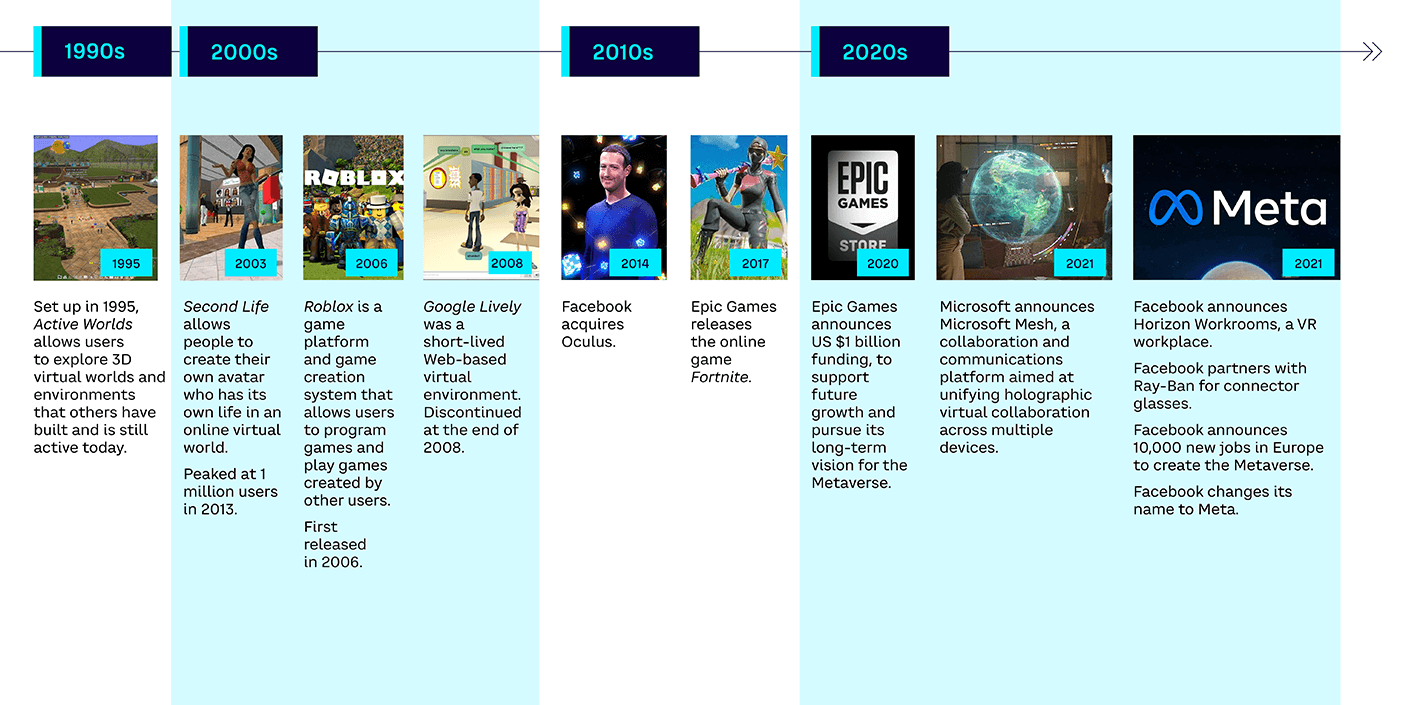

In real life, a Metaverse is not a new concept, either. As illustrated in the timeline in Figure 2, Active Worlds was created in 1995 and still exists today, allowing users to own worlds and universes, and develop custom three-dimensional (3D) content. Second Life followed in 2003, allowing players to create an avatar who lives another life using voice and text in a virtual world. The game generated substantial hype and high expectations, but usage peaked at less than one million in 2013, and declined gradually until the 2020 pandemic, when there was a large spike in new registrations. More generally, there has been a very strong acceleration of activity over the last two to three years. This acceleration is visible from various points of view, such as the frequency of announcements, investments by venture capitalists, startup creations, and number of users.

Today, the Metaverse is best considered as the future of the Internet at the convergence of three industries

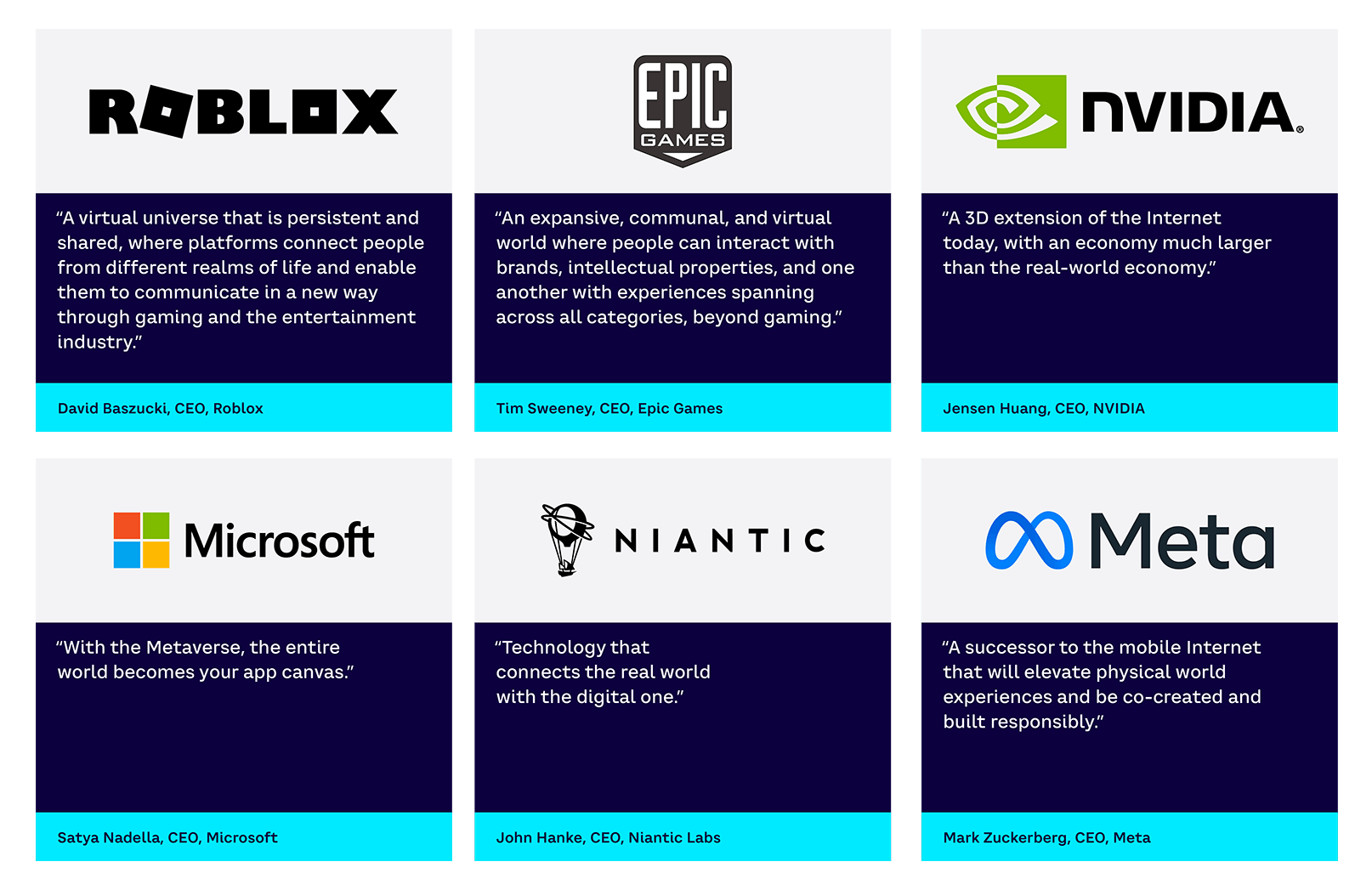

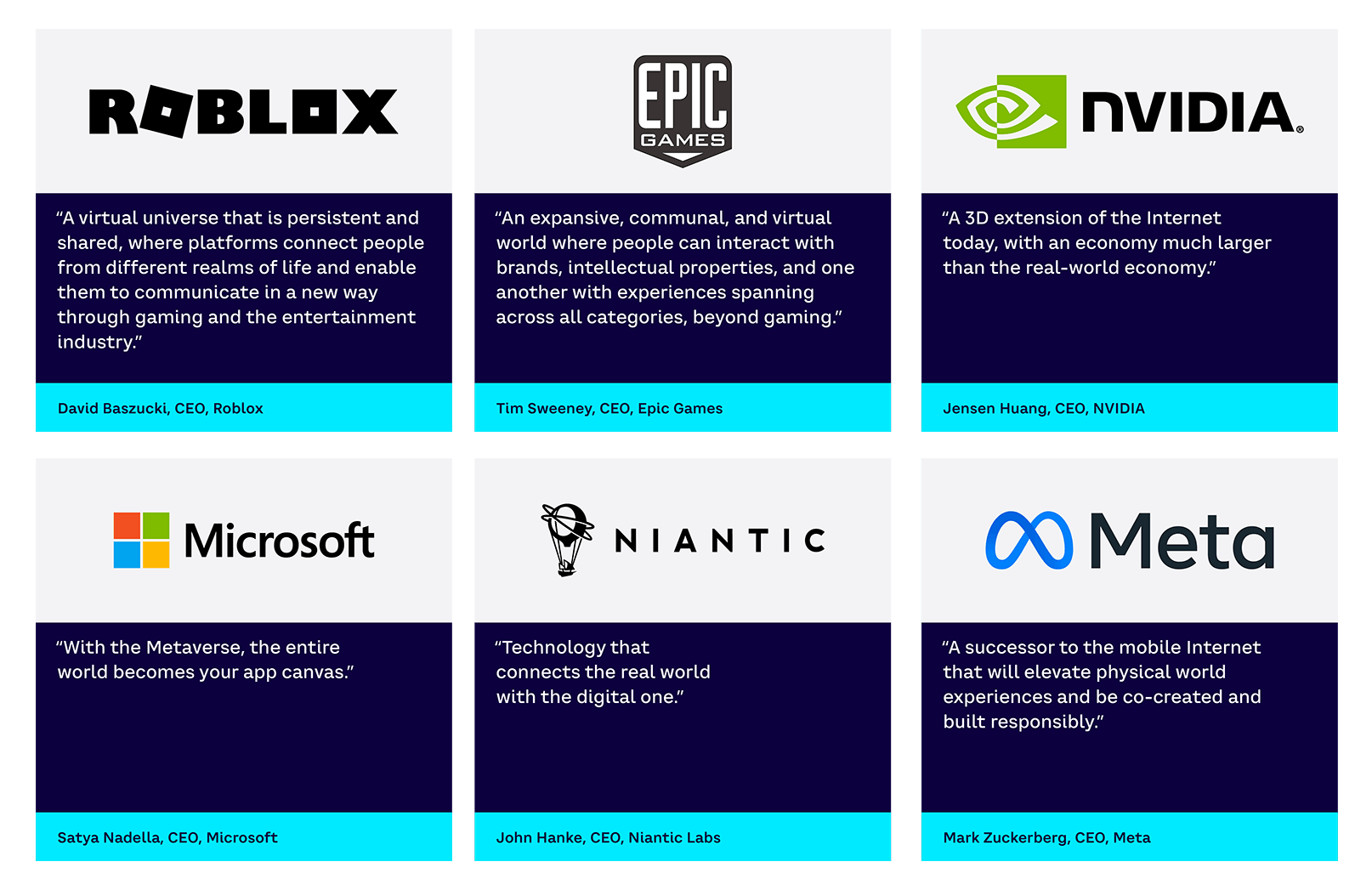

So, what is different about the Metaverse today and what does it mean for businesses? Why are the major players such as Meta (formerly Facebook), Microsoft and NVIDIA, Roblox, Epic Games (the creator of Fortnite), and Niantic (Pokémon Go) all heavily pushing the concept? What we observe today is better viewed as the result of various usage and technological trends that the key players want to accelerate. The term “Metaverse” is a useful wrapper around these trends to facilitate the understanding of what will soon be enabled.

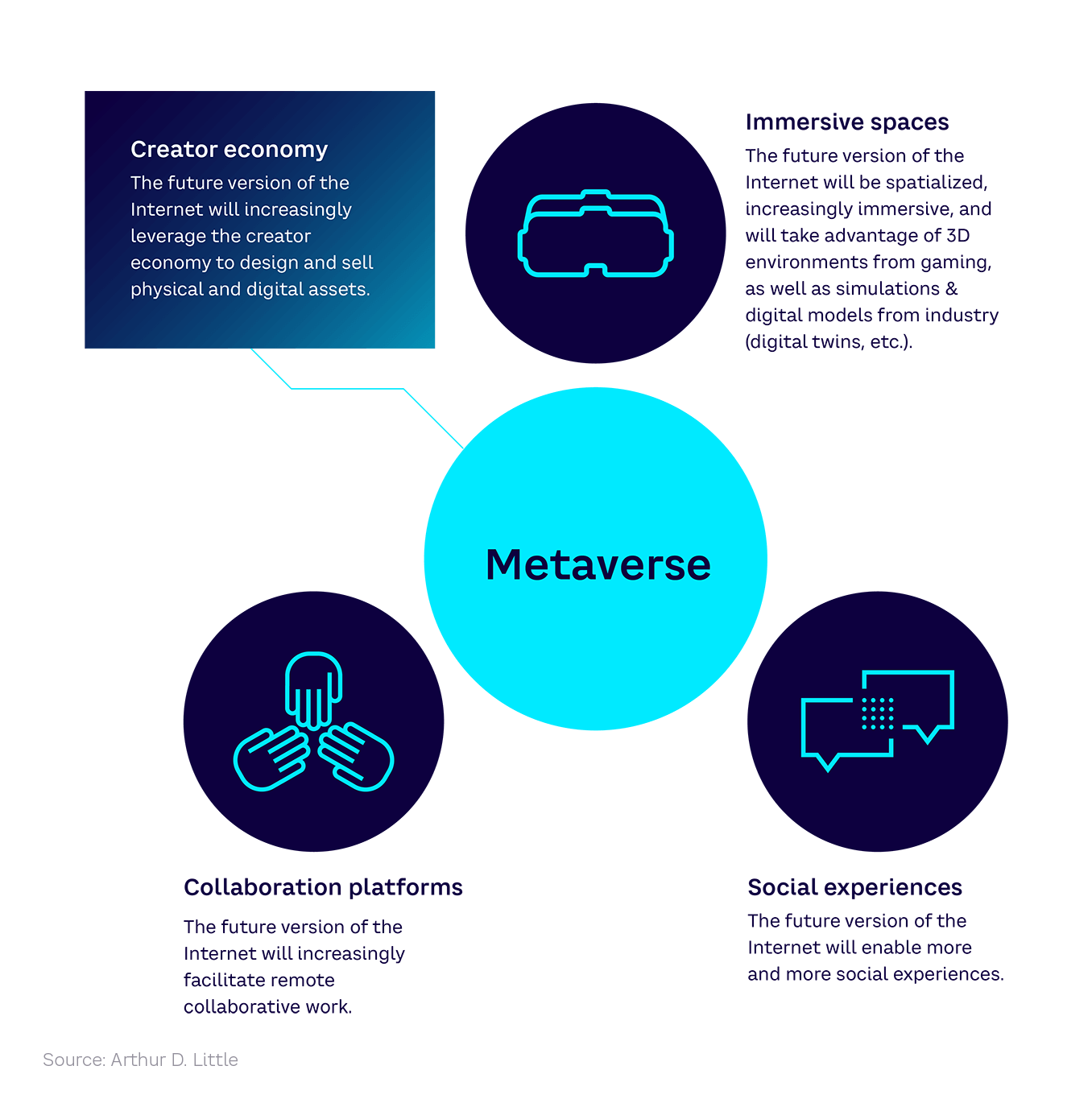

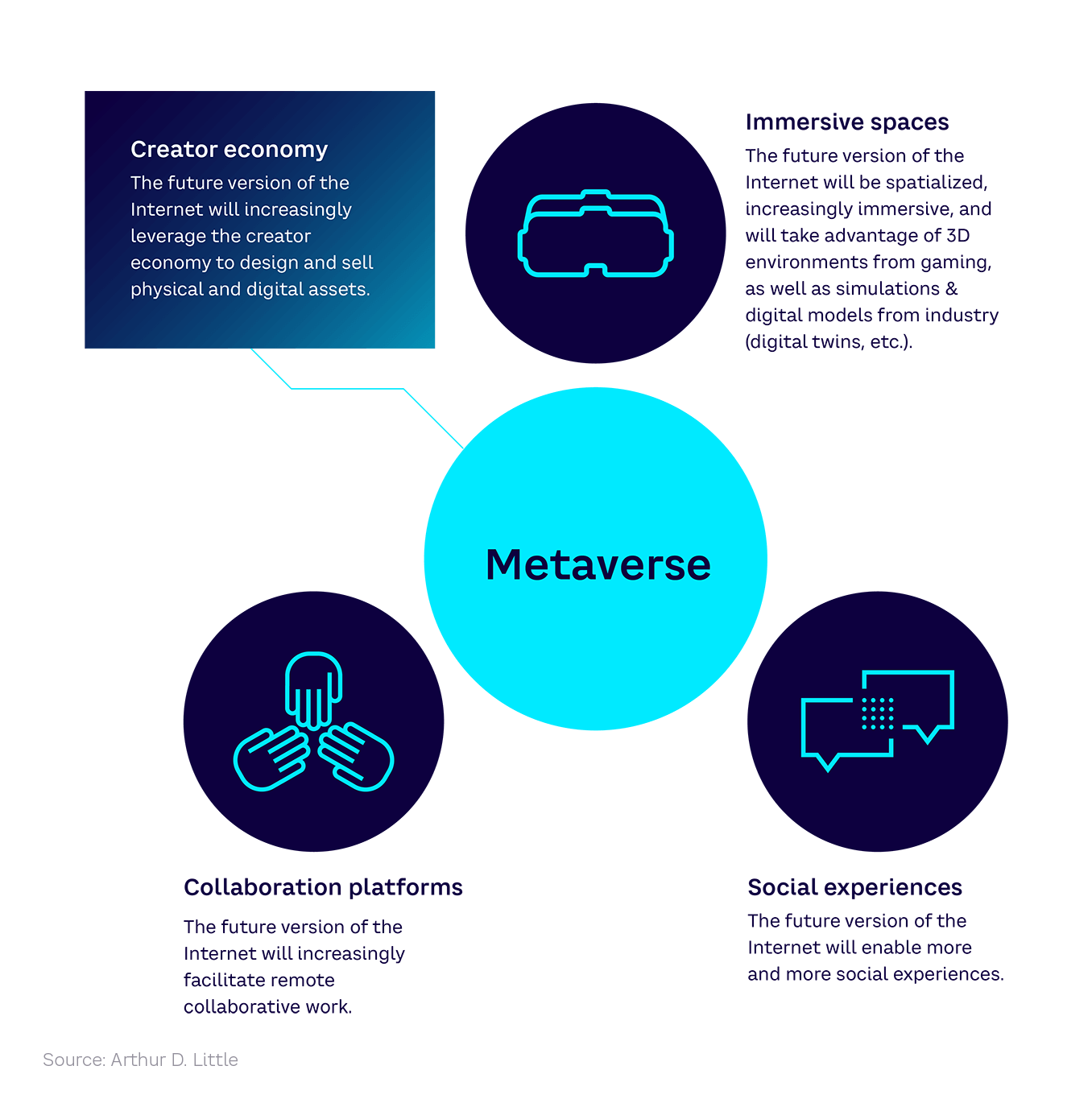

The Metaverse is the future version of the Internet, blending the frontiers between reality and virtuality, at the convergence of immersive spaces, collaboration platforms, social experiences, and leveraging the creator economy.

The Metaverse is generally described as a virtual world where people can interact, but as the words of various CEOs in the industry reveal (see Figure 3), leading players have their own slants and definitions of what it means. And while these definitions and visions converge to a large extent, there are a number of differences reflecting the players’ backgrounds and objectives.

It is therefore important to define precisely what we mean by the term before diving into our analysis. Taking a more holistic approach, we adopt the following definition (see Figure 4):

- “Future version of the Internet.” The Metaverse is not a collection of private platforms — it is a new evolution of the Internet, similar to what we saw with the advent of the smartphone.

- “Blending the frontiers between reality and virtuality.” What we call reality is, and increasingly will be, augmented by one or several layers of data, information, or representations. The real world becomes the screen on top of which digital layers are superimposed — think “augmented reality” to get a sense of what we mean.

- “At the convergence of immersive spaces, collaboration platforms, social experiences.” Three industries are converging and are fighting for the same market:

- The gaming industry — ignored for decades by the rest of the world, it is now taking center stage due to the amazing technologies it has developed.

- Collaboration platforms & tools — producing the technologies and applications that allow individuals and companies to collaborate, communicate, or work remotely.

- Social networks & media — generating the technologies and applications that allow people to connect, socialize, and share experiences.

- “Leveraging the creator economy.” We have seen over the last two decades the explosion of the digital creator economy (platforms allowing creators to create and users to consume). The creator economy will take on another dimension in the Metaverse as the same principles will apply to both virtual and physical products.

Now let’s look in more detail at the convergence that is enabling the Metaverse.

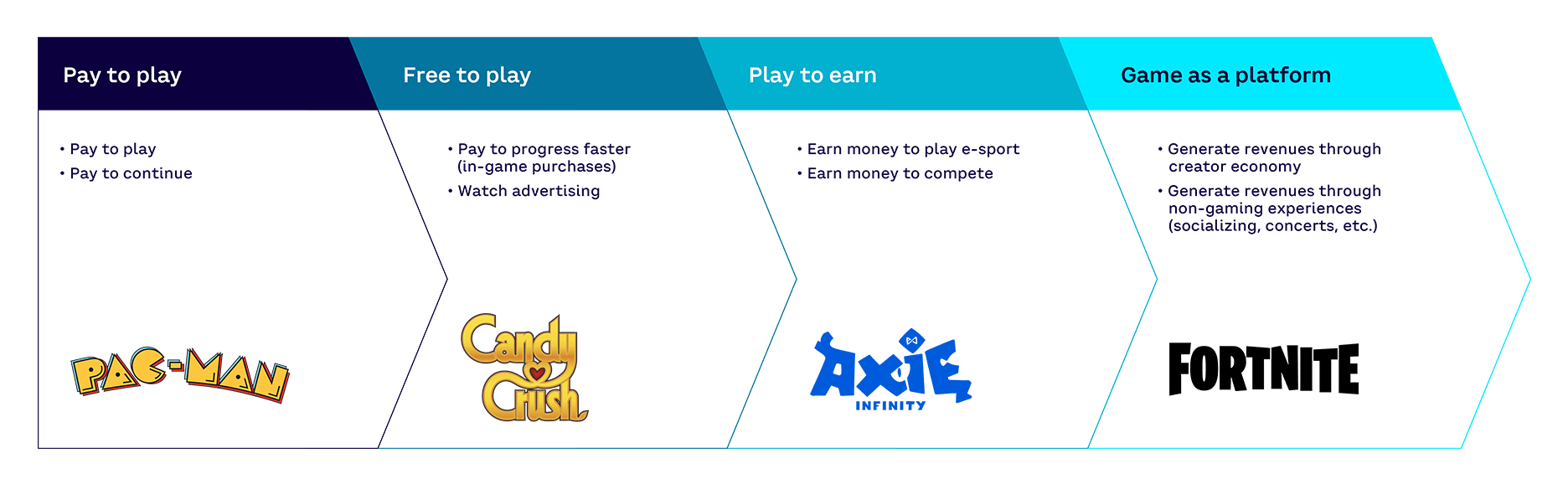

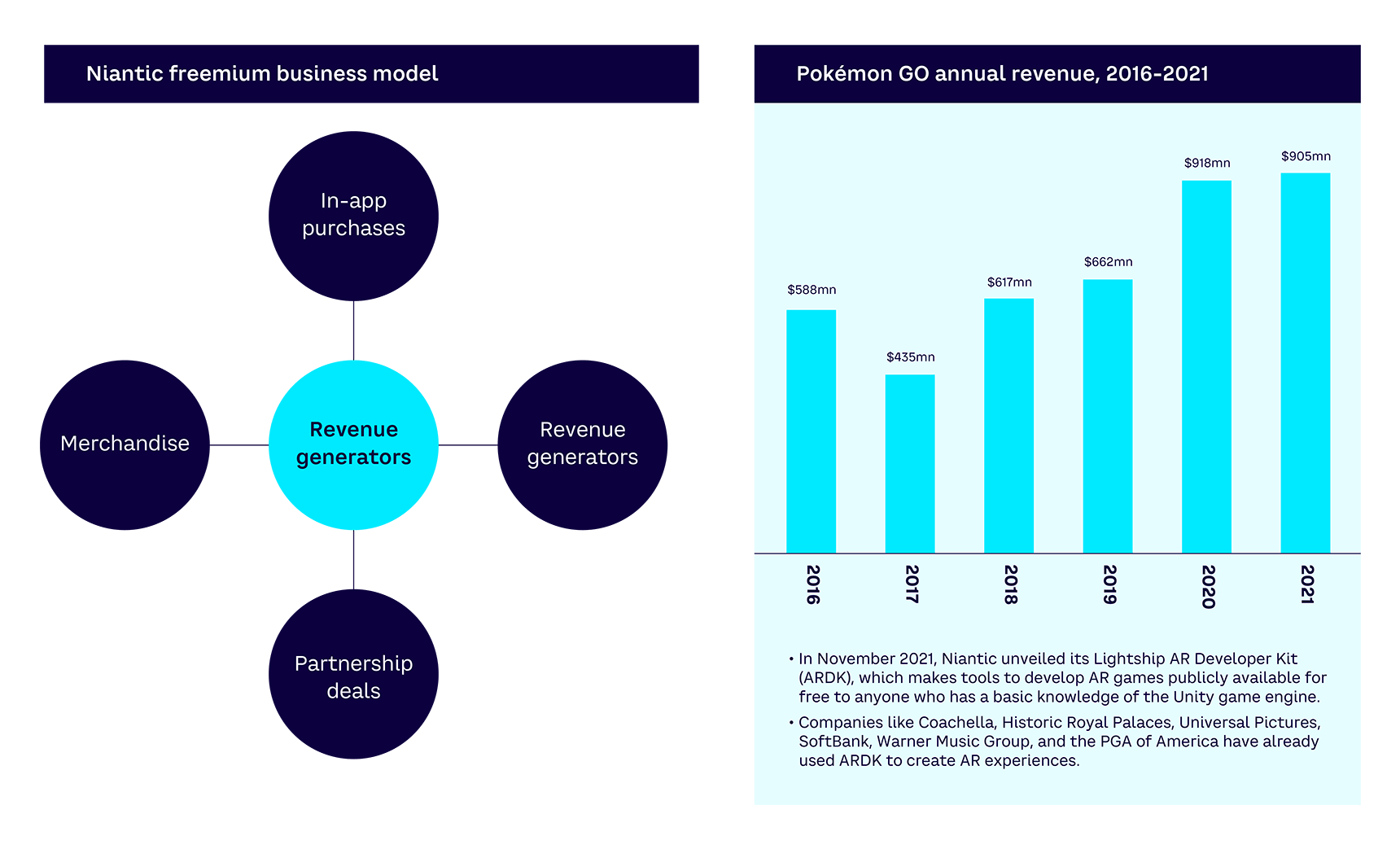

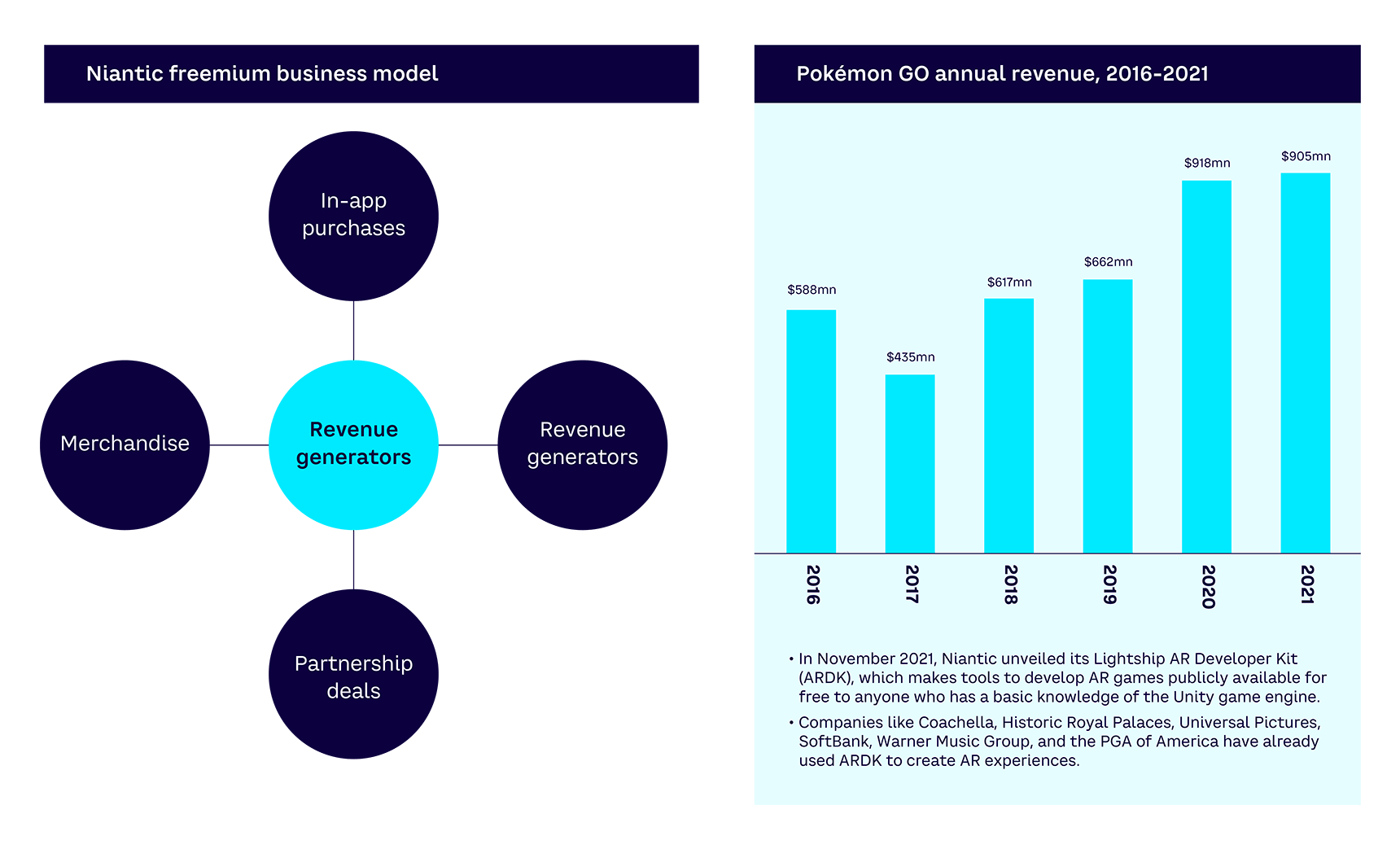

Gaming industry: More and more nongaming experiences are moving toward the social network & media and collaboration spaces

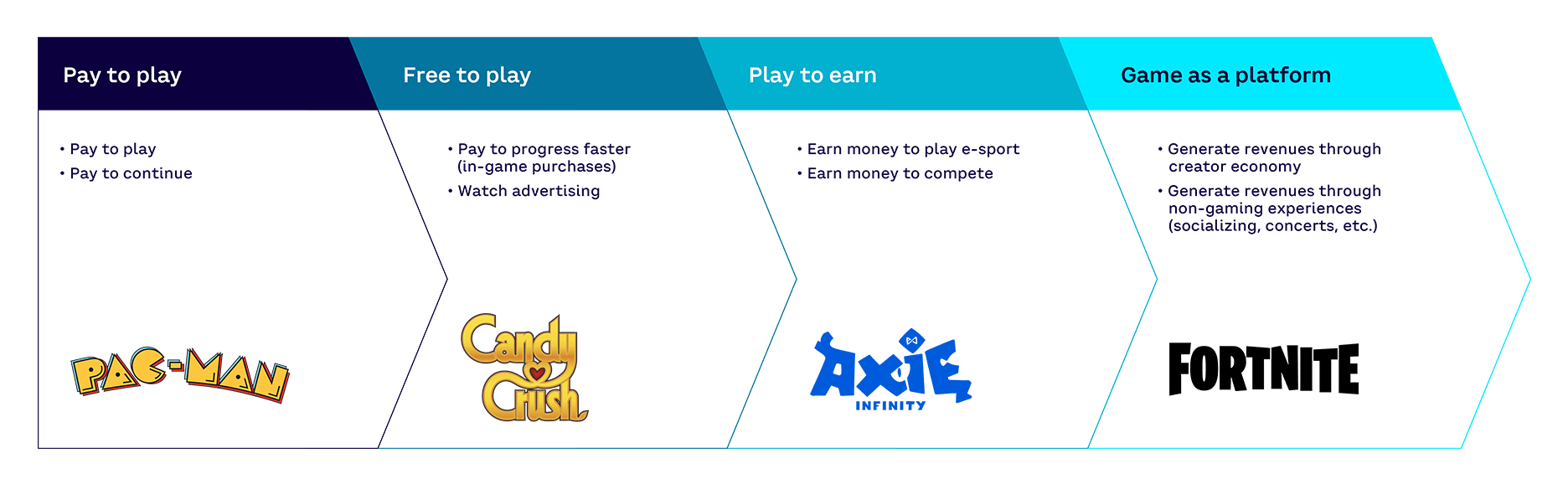

Over its relatively short life, the gaming industry has undergone a series of transformations (see Figure 5). It has moved from its initial “pay to play” model (with famous games such as Pac-Man) to encompass “free to play” models (i.e., freemium/ad-supported games such as Candy Crush), and “play to earn” models to enable players to actually earn money through e-sports competition. The most recent transformation of the gaming industry, as it becomes increasingly immersive, finds a growing portion of its revenues coming from nongaming experiences. These experiences include social events, music concerts, and e-commerce.

"Most industries have ignored the game culture and industry. This is changing. It’s an industry that’s becoming mainstream and relevant to all the others." — Morgan Bouchet, VP/Global Head of XR, spatial computing & Metaverses, ORANGE

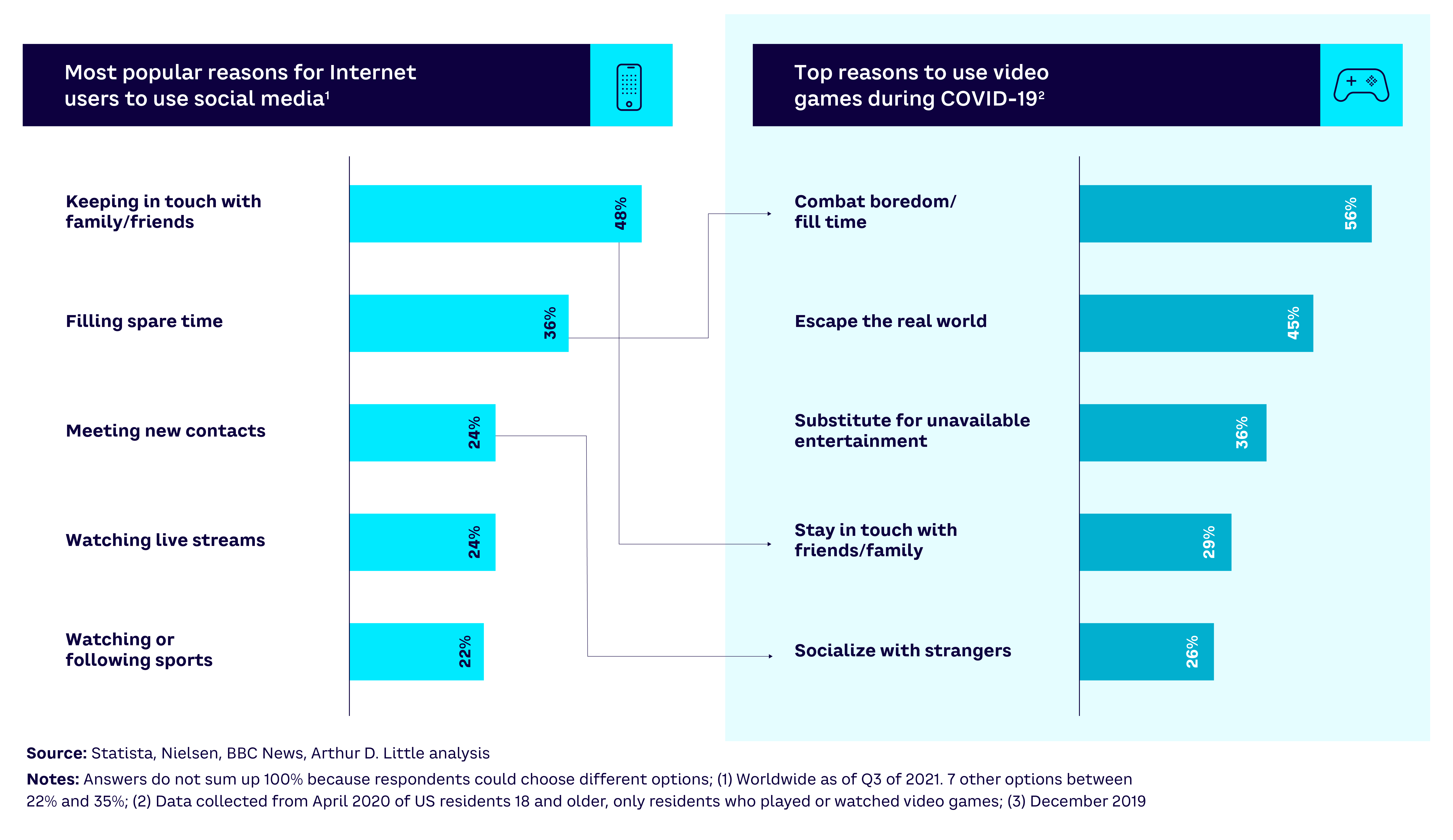

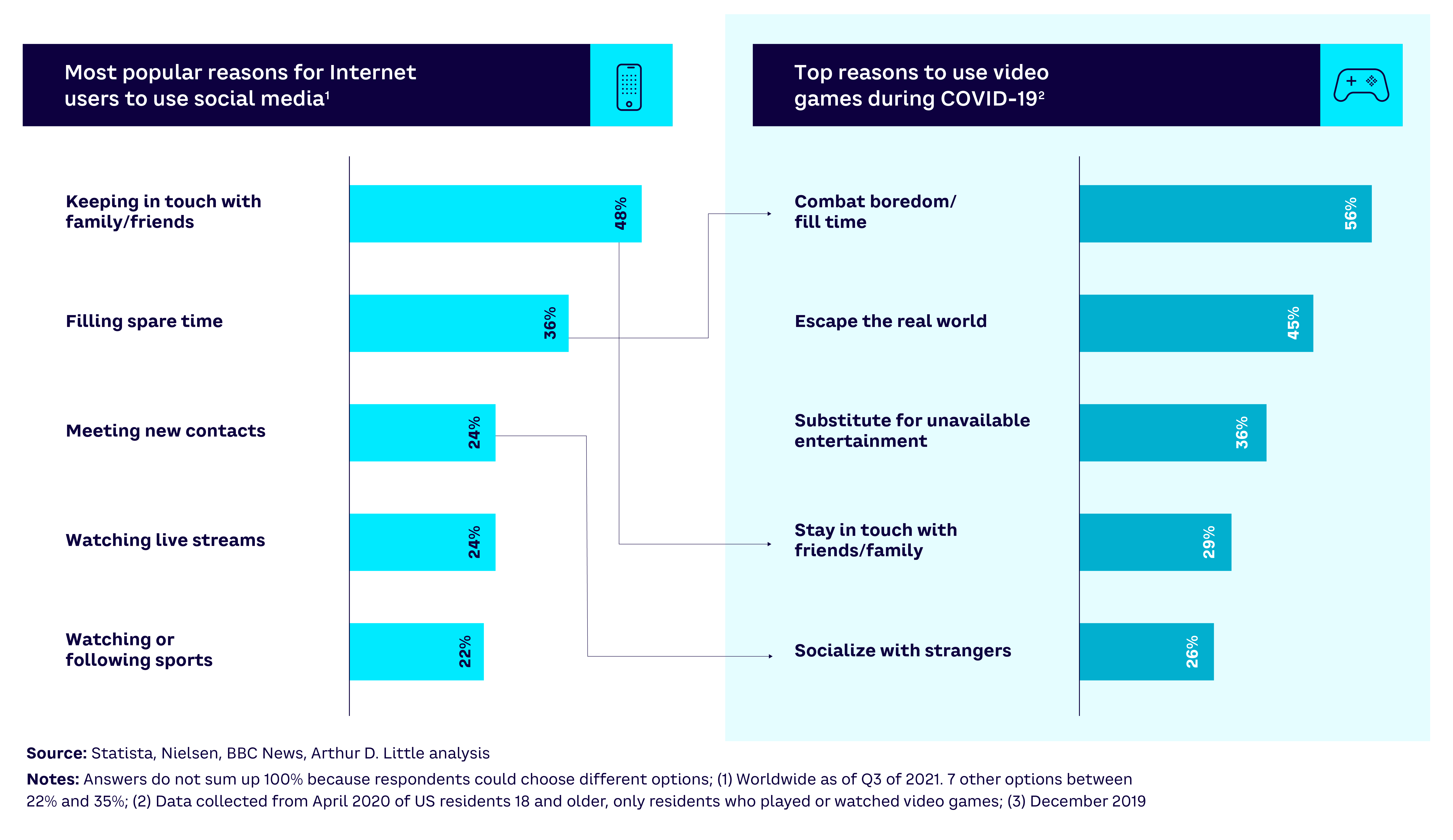

Games are becoming social platforms that players use to interact with their friends and share experiences. For example, 29% of gamers surveyed by Nielsen in April 2020 said they used games to stay in touch with friends and family, and 26% to socialize with strangers (Figure 6).[6] Showing the growing convergence between gaming and social platforms, 250 million people are registered players on Fortnite — around the same size as Snapchat’s user base. Each of these steps has brought in new audiences and revenues.

Gaming platforms are also increasingly becoming collaborative platforms. Some companies are allowing third parties to develop their own games and virtual assets using their engines.

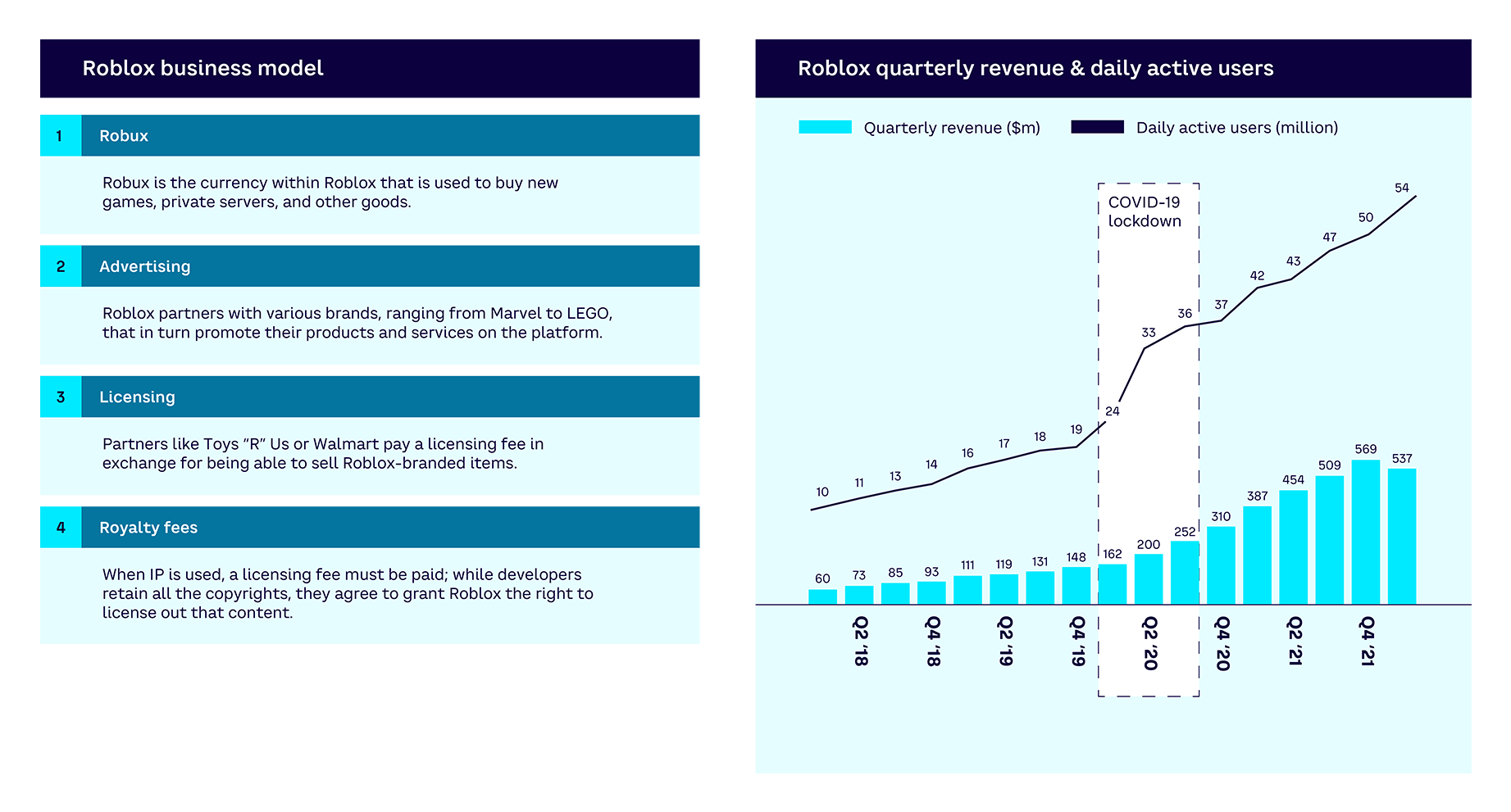

From the outset, Roblox has been a platform where developers and users can build and publish their own games or other virtual assets. Demonstrating the increasing interest in virtual assets, in May 2021 Gucci released a limited edition of in-game virtual bags that sold for US $4,115 on the platform (more than the physical equivalent!), as part of a wider partnership.[7]

Other games and video creation platforms have also opened up their technology. Epic Games’ Unreal Engine, which powers Fortnite, can be used to create 3D environments, while Unity’s real-time content development engine enables the development and creation of films, as well as games and high-quality, immersive architectural and automotive renders.

Social network & media industry: From social experience to collaboration platforms

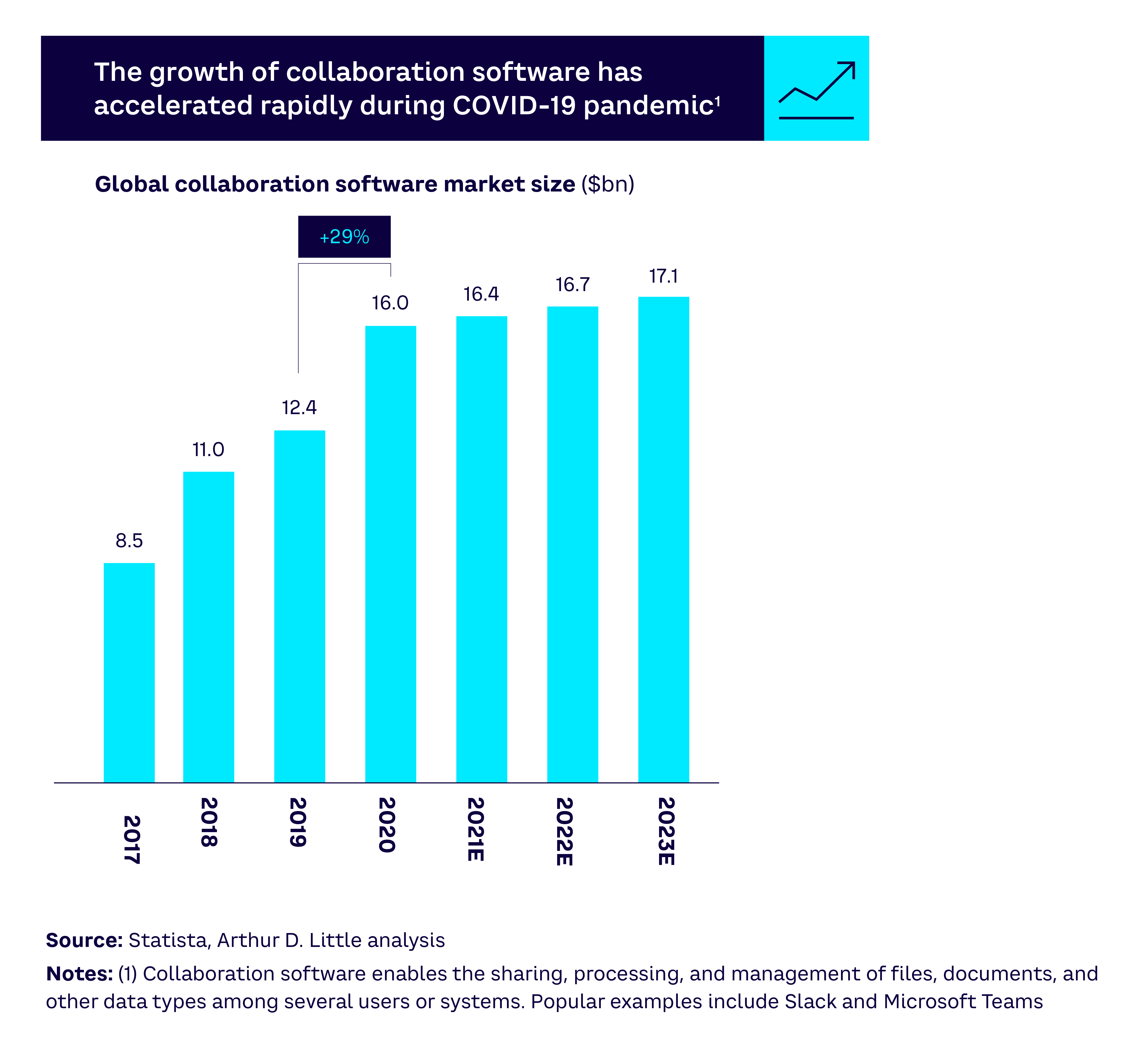

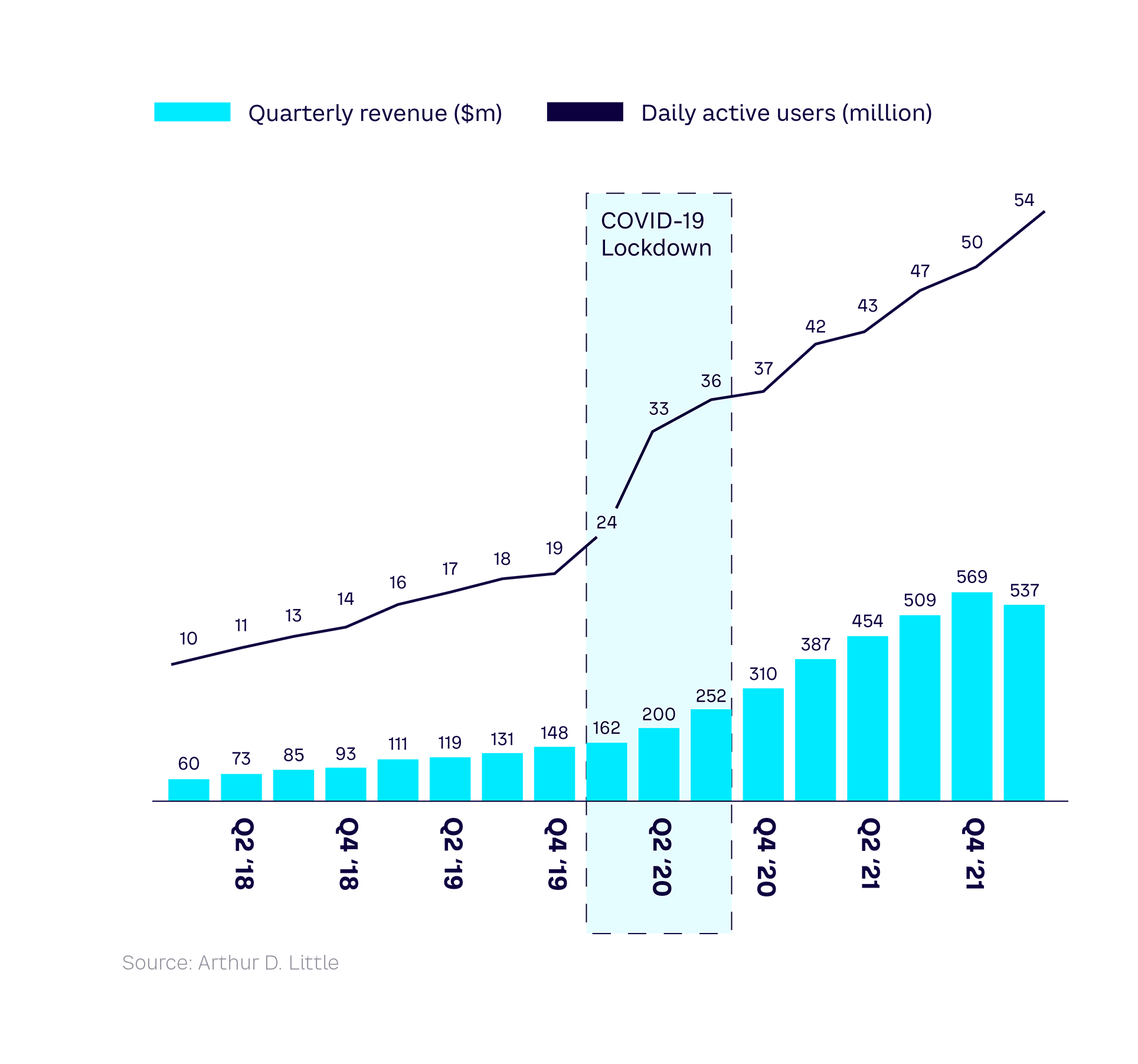

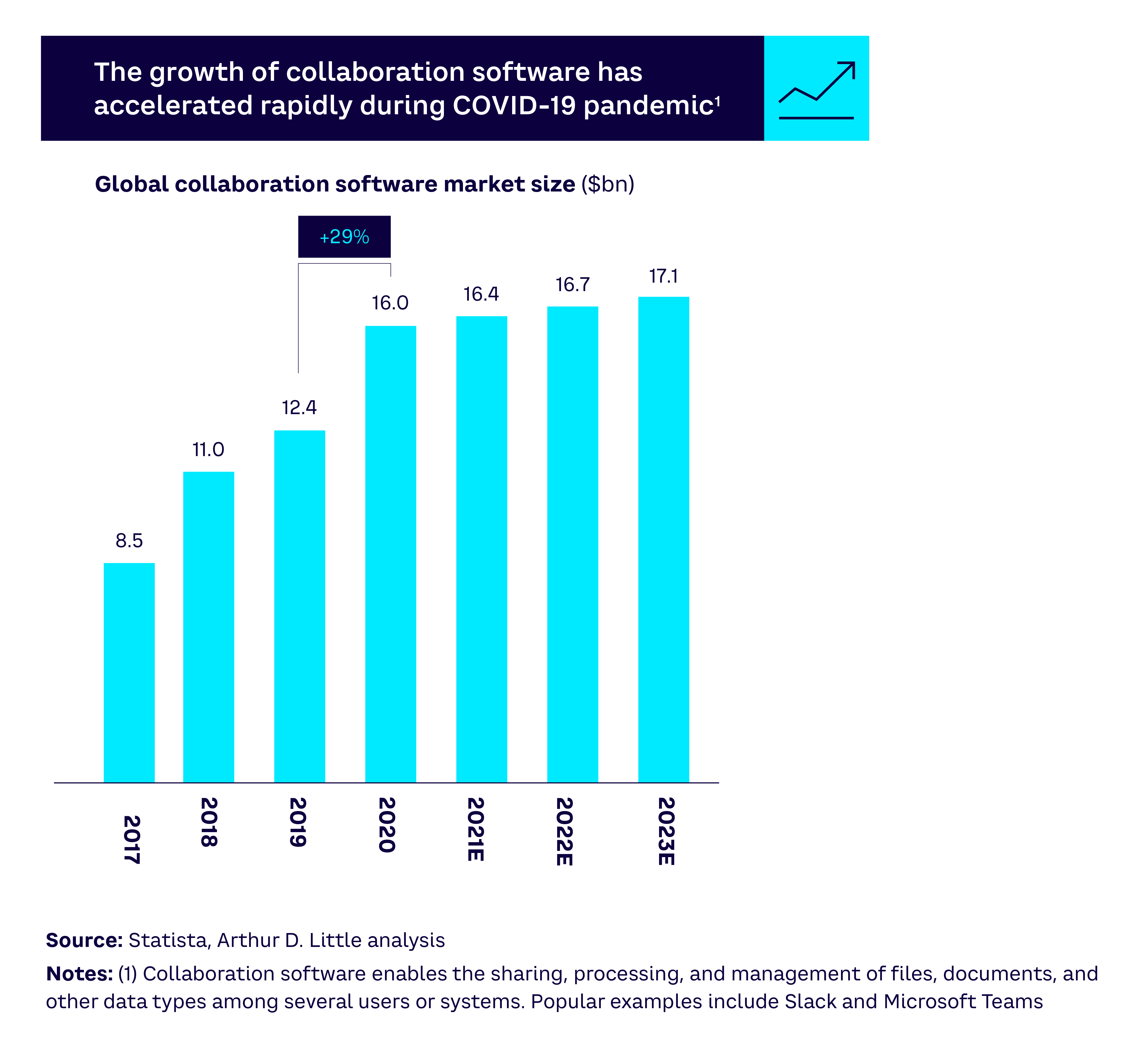

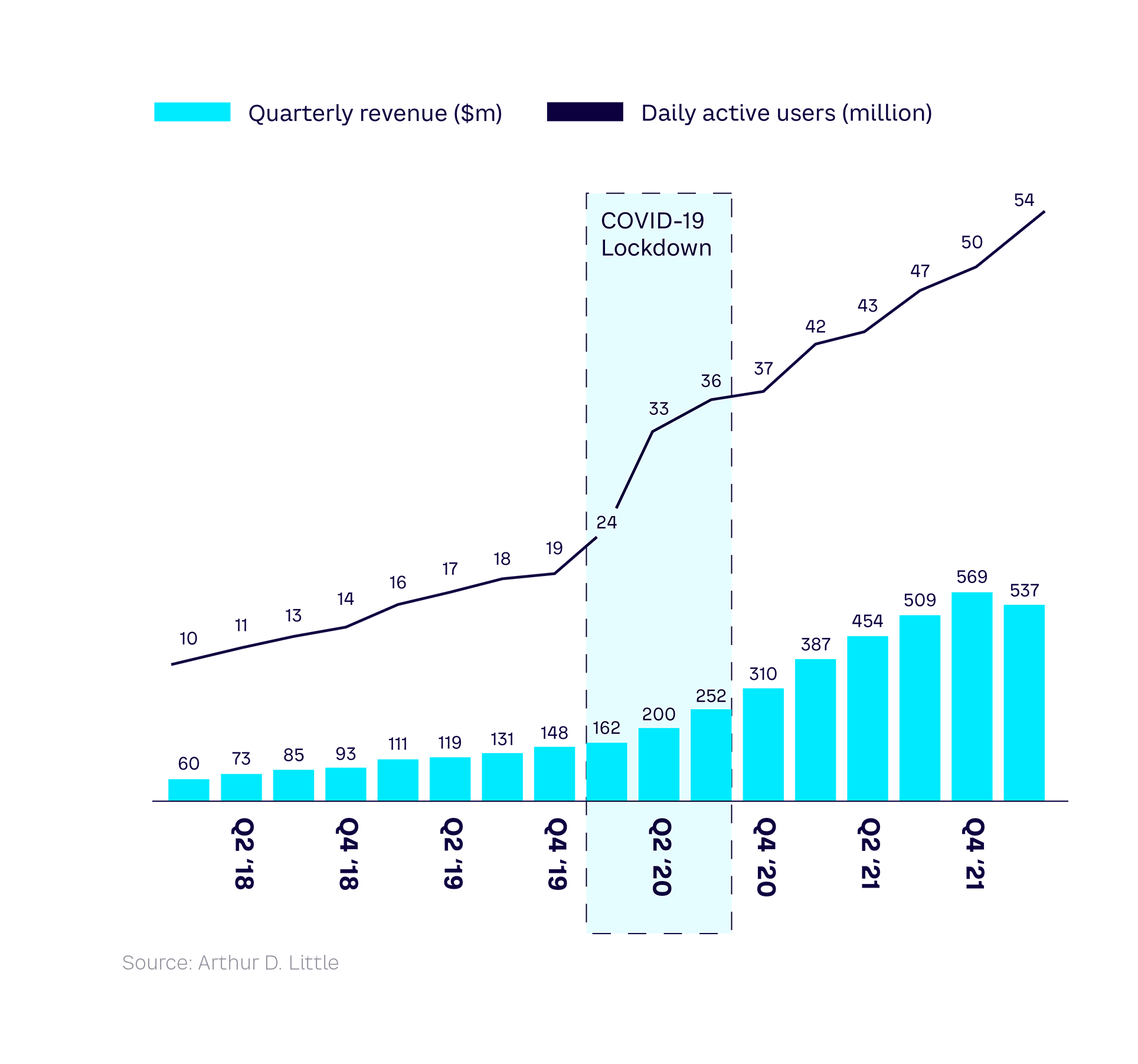

The pandemic turbocharged the adoption of collaboration platforms such as Microsoft Teams or Mural (see 2019-2020 bump in Figure 7). Helped by the shift to hybrid working, these tools are now standard for most organizations. Returning to voice-only telephone conference calls feels as if it would be a step back in time.

Collaboration platform features such as real-time chat and multi-person video calls enable social interactions. Social media platforms are aggressively moving into the collaboration space themselves to combat this potential threat to their usage (and revenues).

In August 2021, Meta (Facebook) released the open beta of Horizon Workrooms, a collaboration app targeted at teams managing remote work environments, designed to improve their ability to collaborate and connect remotely. The app offers virtual meeting rooms, whiteboards, and video call integration for up to 50 people. It works across both virtual reality and the Web, with users able to bring their desks, computers, and keyboards into VR. Avatars and spatial audio aim to deliver an immersive experience, with gesture-based control rather than a need for controllers or keyboards.

Horizon Workrooms aims to compete directly with players such as Microsoft. Coming from the collaboration platform space, Microsoft has already developed the Mesh collaboration toolset, which aims to provide more immersive virtual meetings by enabling presence and shared experiences from anywhere — on any device — through MR applications.

Three main properties of the Metaverse distinguish it from today's Internet

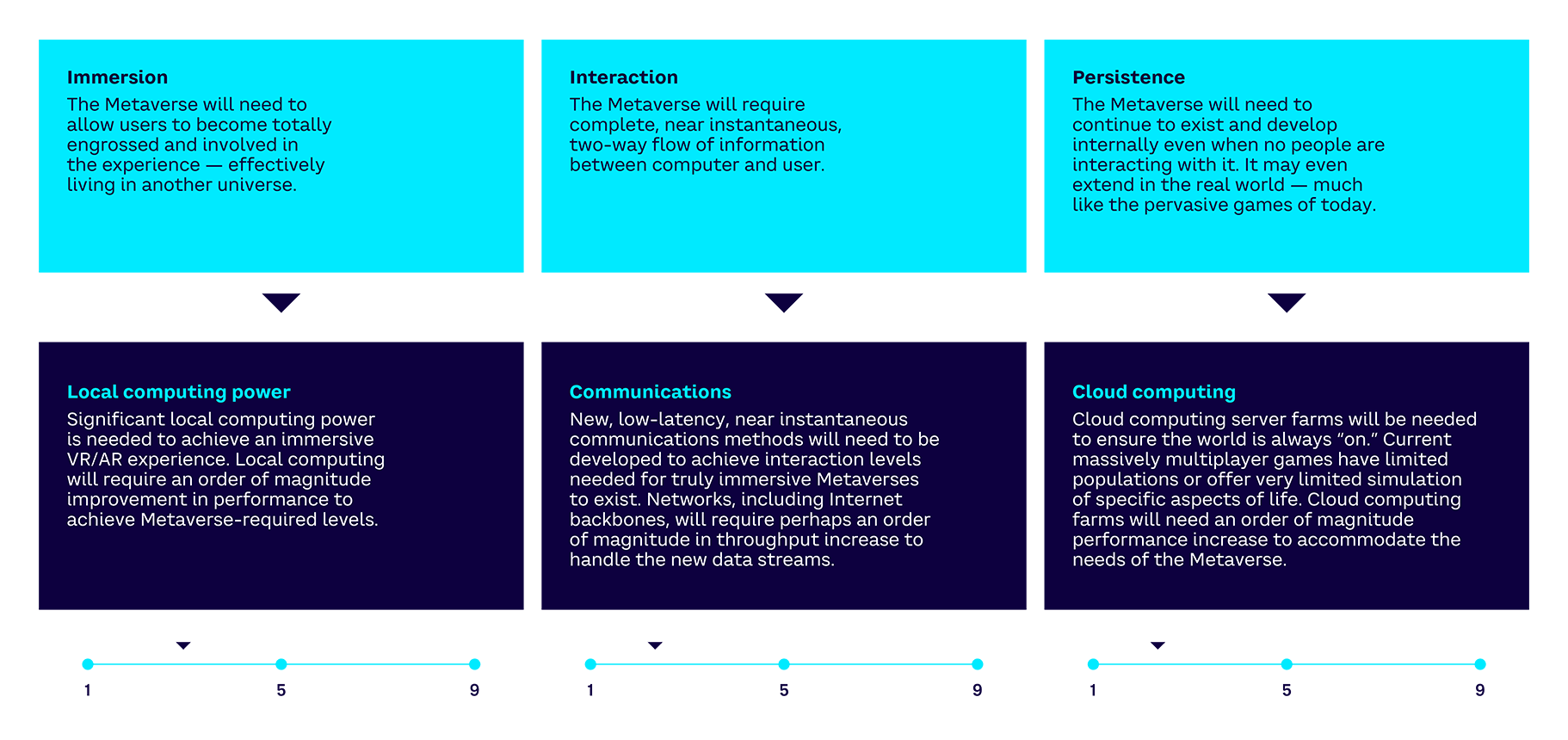

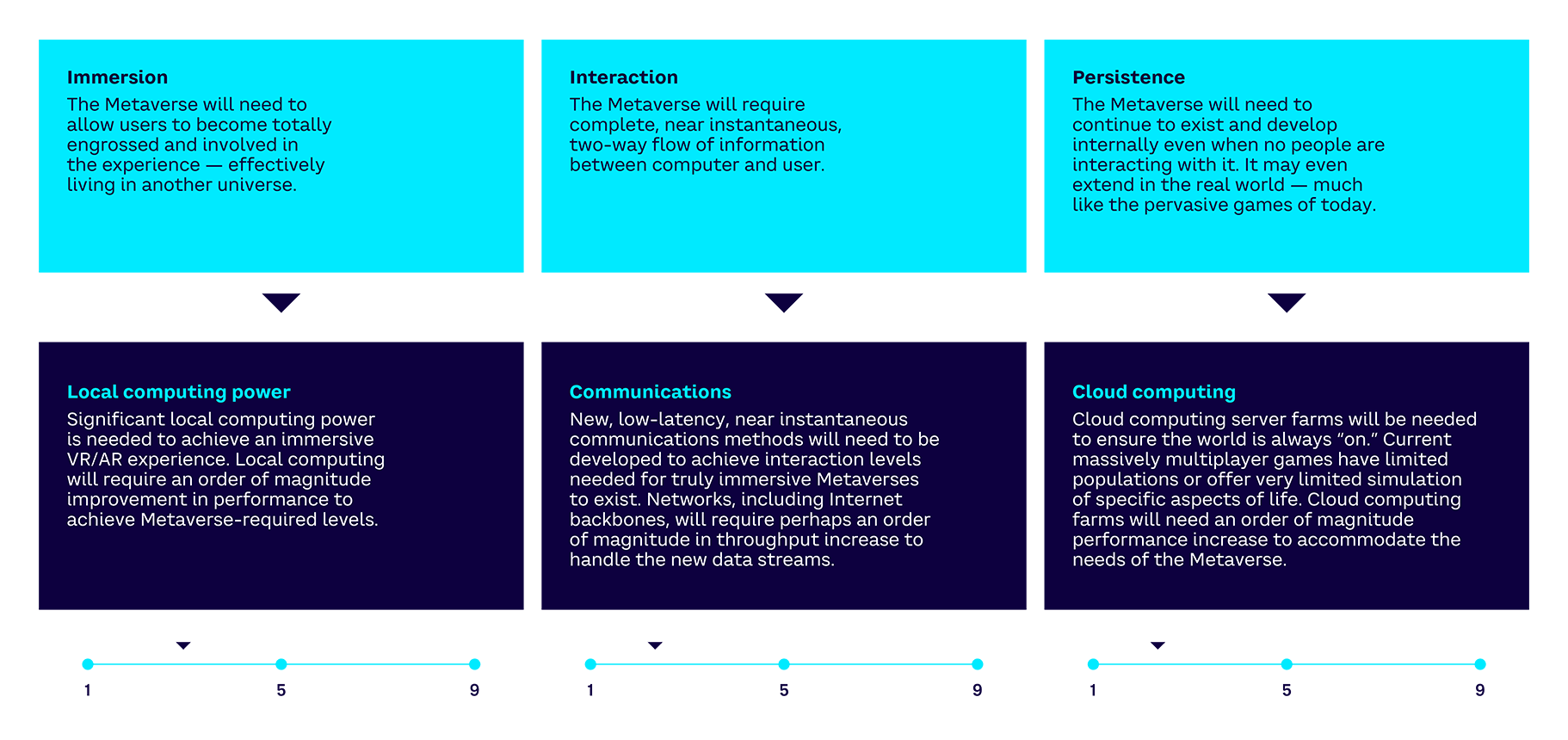

The Metaverse has three main properties that distinguish it from today’s Internet: immersion, interaction, and persistence:

- Immersion. The Internet is becoming spatialized and immersive — the real world is becoming the screen. Users can become totally engrossed and involved in the experience, effectively living in another universe or in an augmented universe, where one or several layers of data, information, or representations are superimposed on the real world. Today’s Internet is cognitive, meaning it gives access to knowledge, whereas the Metaverse also provides perspective, and will increasingly involve all our five senses.

- Interaction. Real-time interaction between users (or between users and machines) is becoming increasingly natural. For example, today, a video conference with more than three or four people provides a very degraded experience compared to a real-life meeting. In particular, the timing of how people speak does not occur exactly as it would naturally, which leads to significant cognitive fatigue. Close to real-life, real-time interactions will be at the heart of the Metaverse.

- Persistence. The synthetic world, objects, and people will continue to exist and develop internally even when users don’t interact with them. It may even extend to the real world, much like in today’s pervasive games.

The confluence of recent trends in users, software, and hardware is making the revolution possible for the first time

As we have shown, the concept of the Metaverse has been around for many years. Previous attempts at creating virtual worlds, such as Second Life, have faltered. So, what has changed? Essentially, three ingredients are now coming together to provide the building blocks of the future Metaverse: software, hardware, and users. Just like with other digital technology domains in the past, this confluence provides a good indication that we could possibly be at the inflection point in an exponential growth curve.

Users: A very rapid growth of the user base and corresponding revenues

The user base for synthetic worlds is growing dramatically, and although it is no longer limited to younger people, more than a quarter (26%) of teens say they own a virtual reality headset, according to research from Piper Sandler.[8]

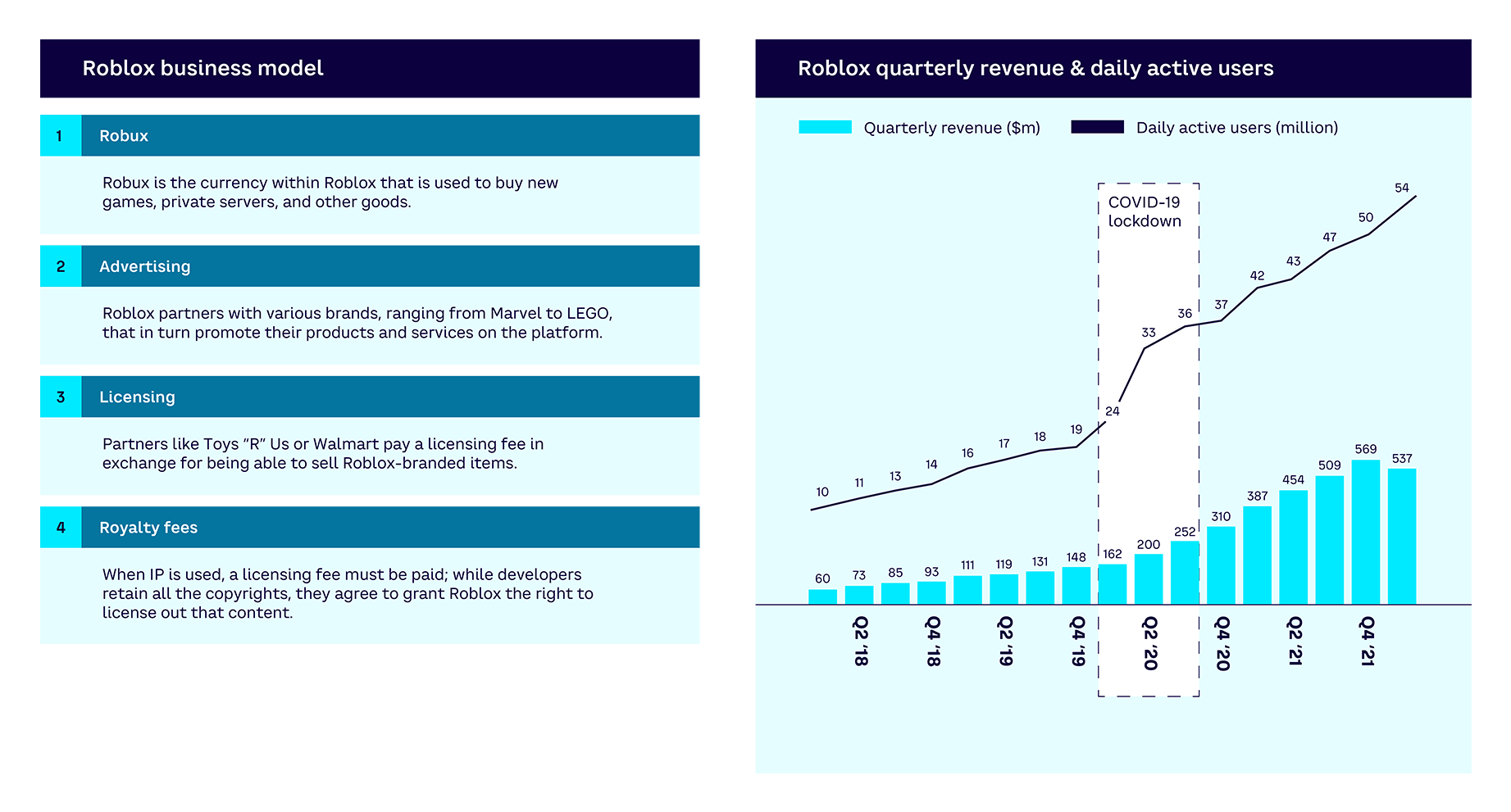

Taking the example of Roblox, which was established in 2006 as a video game mainly for teenagers and pre-teenagers, Roblox generated revenues of $2 billion in 2021 by selling virtual assets in the game. These numbers are significant, even if small compared to the $300 billion generated by the whole gaming industry. Roblox’s growth is even more impressive: the number of daily users has increased from 10 million to more than 55 million in the past four years. This growth was accelerated by the COVID lockdown, as can be seen in Figure 8.

Another company that has seen significant growth is Epic Games. Epic released the online game Fortnite in 2017, which became something of a cultural phenomenon. The game generated over $5 billion in revenues in 2020, including proceeds from a live performance by Travis Scott that drew an audience of 12 million people.[9] Young people, the consumers of tomorrow, already spend considerable sums of money to dress up their Fortnite avatar in a fashionable skin. In April 2020, Epic Games announced that it had completed a $1 billion round of funding, which will allow the company to support future growth and pursue its long-term vision for the Metaverse. Epic announced another $1 billion round of funding in 2022, half of which comprises investments from Sony Group Corporation and KIRKBI, the holding and investment company behind The LEGO Group. Another live Fortnite performance in 2021 — the Rift Tour, headlined by Ariana Grande — attracted an audience of nearly 78 million.[10]

Familiarity with virtual worlds undoubtedly accelerated with the pandemic, as locked-down consumers of all ages were forced to switch from physical to virtual interactions and companies found ways to enable their homeworking employees to communicate and collaborate online. Even Second Life saw a spike in new registrations in 2020 after years of decline.

Software: Major platforms are allowing third parties to form new value chains

Previously, we described Roblox as a video game for teenagers, although in reality, Roblox is a platform, not a video game. On one side of the platform, users (mostly preteens and teens) can access various experiences: play, meet, socialize, listen to music together (through a recent partnership with Deezer), and go to concerts, among other things. On the other side of the platform, third parties, individuals, or companies create and sell experiences such as virtual worlds or games, as well as various digital assets such as skins to customize avatars. Roblox is thus a platform similar to Apple’s App Store, in that it allows developers to develop applications and users to purchase these applications. It is also like a YouTube of video games, bringing creators and consumers together.

With the infrastructure and the ecosystem that it has built, Roblox has become a major player in developing the Metaverse. It has also managed to attract the attention of many brands, including luxury players. For example, Gucci opened its Gucci Garden virtual space on Roblox for two weeks at the end of May 2021, and, as previously mentioned, released a limited edition of in-game virtual bags that sold for $4,115 each. Cosmetic brands have also started to offer their own virtual beauty products. At the end of April 2020, for example, L’Oréal allowed Snapchat users to virtually try the products of several of its brands, such as Garnier and Lancôme.

Epic Games is also a platform in the sense that its Unreal Engine technology is used by third parties to develop synthetic worlds, experiences, and games. For example, Epic has worked with NASA on VR simulation for Mars exploration and with LEGO to create a child-friendly Metaverse space. (Further details on these collaborations and use cases can be found in Appendix 1: Experience continuum use cases.)

The bottom line is that despite the fact that Roblox and Epic are known for their games, they are in fact infrastructure platforms. By making the development environment and data available, anyone can develop things on these platforms, such as worlds, games, virtual assets, or social experiences in fields as diverse as e-commerce, entertainment, social interaction, and enterprise services.

Hardware: The hardware required to power and access the Metaverse is developing rapidly

Up until now, the required hardware to power and access the Metaverse was not sufficient to enable the three main properties of immersion, interaction, and persistence that we described above.

However, there are signs that accelerating advances in hardware, at both an infrastructure and man-machine interface level, will reduce some of the barriers to wider adoption in the years to come. Major players are entering the market. Meta bought VR headset developer Oculus in 2014 and is now selling its Quest 2 headset from new, dedicated, physical stores.[11] Apple has filed multiple patents over the last 10 years related directly to VR headsets, as well as increasing hiring and acquisitions in this area. On the infrastructure side, faster fiber networks and 5G rollouts will reduce the impact of latency and increase available bandwidth.

Technologies that enable more convenient and effective high-quality AR and immersivity, such as lightweight glasses and headsets that provide easy transition between VR, AR, and the physical world without bulky equipment, will be transformative in terms of adoption.

Looking further into the future, rapid advances are being made in brain-computer interfaces (BCIs). Elon Musk’s startup, Neuralink, has already successfully implanted AI microchips in the brains of a pig and monkey, and released a video of the monkey playing the classic video game Pong solely using its brain.[12] Another startup, NextMind, is already offering a noninvasive BCI device that can read brain waves from the visual cortex of the brain to enable direct control of functions in games.

INTERLUDE #1 — DEFINING THE METAVERSE

Although the Metaverse is still strongly associated with science fiction, it already raises new questions and solutions.

Even if it is difficult to visualize what it will be, we know that the Metaverse is about to play a decisive role for businesses, society, and humans. The mesh of the movie Tron inspired me for this illustration, which aims to define the Metaverse. In this isometric view, some people design an airplane (left), and individuals collaborate with avatars from this virtual universe (middle) to produce this airplane in reality (right).

— Samuel Babinet, artist

2

The Metaverse: Not for another decade

While some analysts claim that the underlying technology for the Metaverse “already exists,”[13] a more detailed analysis of the technology shows that this is not true. The Metaverse, as envisioned by the main players and as we defined it in the previous chapter, does not yet exist — and we forecast that it won’t be fully available for another decade. There are two main reasons for this: first, the various platforms that exist today are not interoperable, which means that it is not yet possible to share experiences, data, information, or other resources across these platforms; and second, even though both hardware and software are getting closer to maturity, closer analysis of the full architecture tells us we are not there yet. We are currently in an era of proto-metaverses.

“People frequently conflate one domain of interoperability with another,

and that adds confusion to how people think about the challenges and opportunities.”

— Jon Radoff, CEO, Beamable

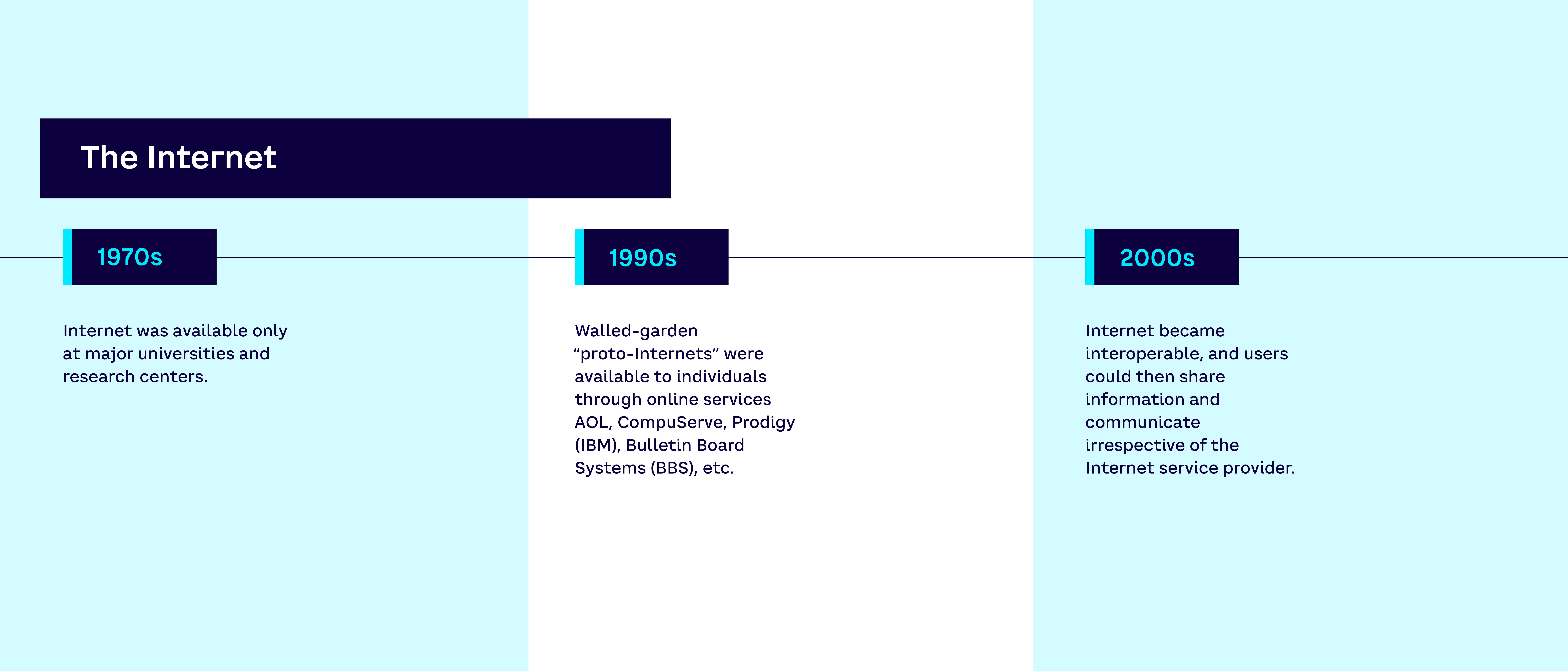

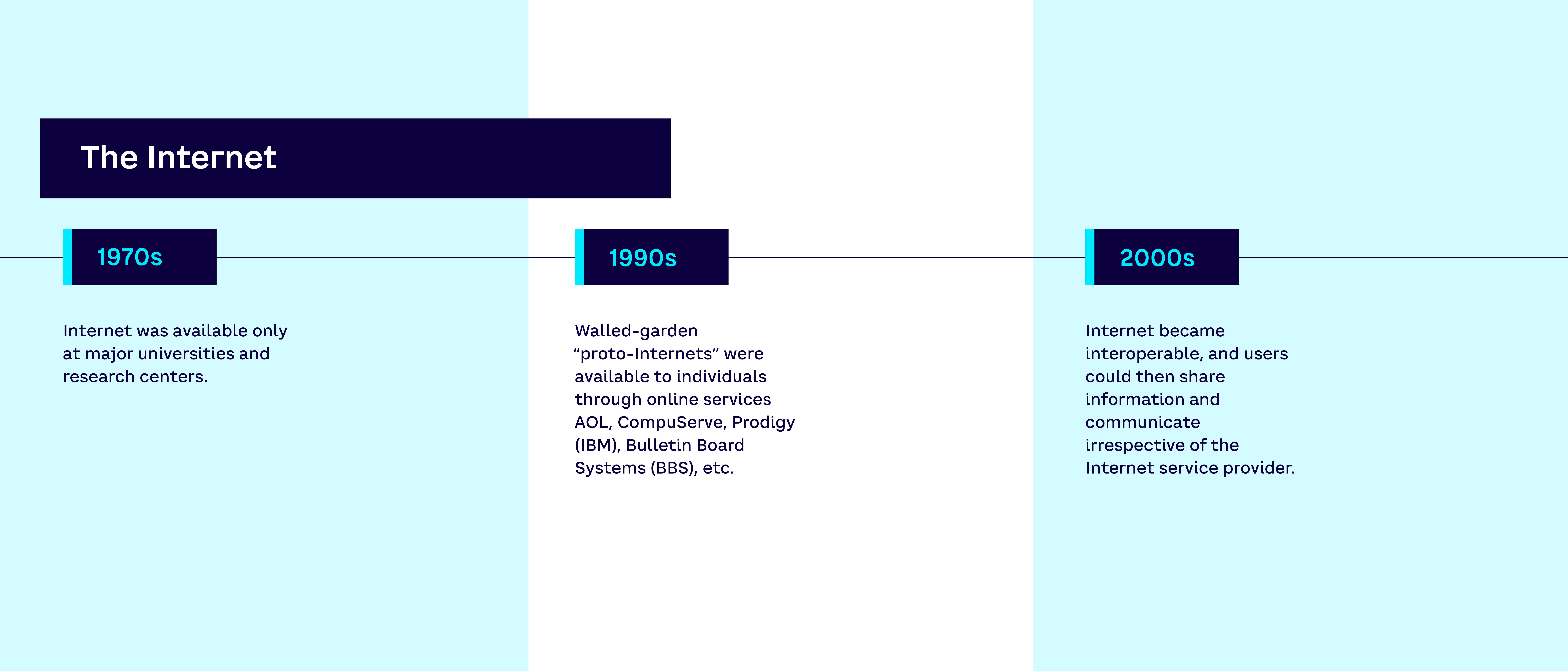

There is no Metaverse until there is interoperability

Try to remember the Internet in the mid-1990s (see timeline in Figure 9). Many readers may remember the mythical sound of the 56k modem. Or AOL and its famous “You’ve got mail.” Once connected to the Internet via AOL, it was possible to access all sorts of strange things. Unfortunately, this door to the Internet was in fact only a door to a proto-Internet surrounded by impassable walls. It was a walled garden without any walkways to other gardens; an Internet bubble not connected to other bubbles. Users were unable to share resources or communicate with other walled gardens such as CompuServe, Prodigy, and so forth.

Toward the end of the 1990s, it became clear that the Web browser had to allow communication and exchange of information with any other user, regardless of their Internet service provider. Users and usage defeated the walled garden model and the Internet became interoperable — at least to some extent.

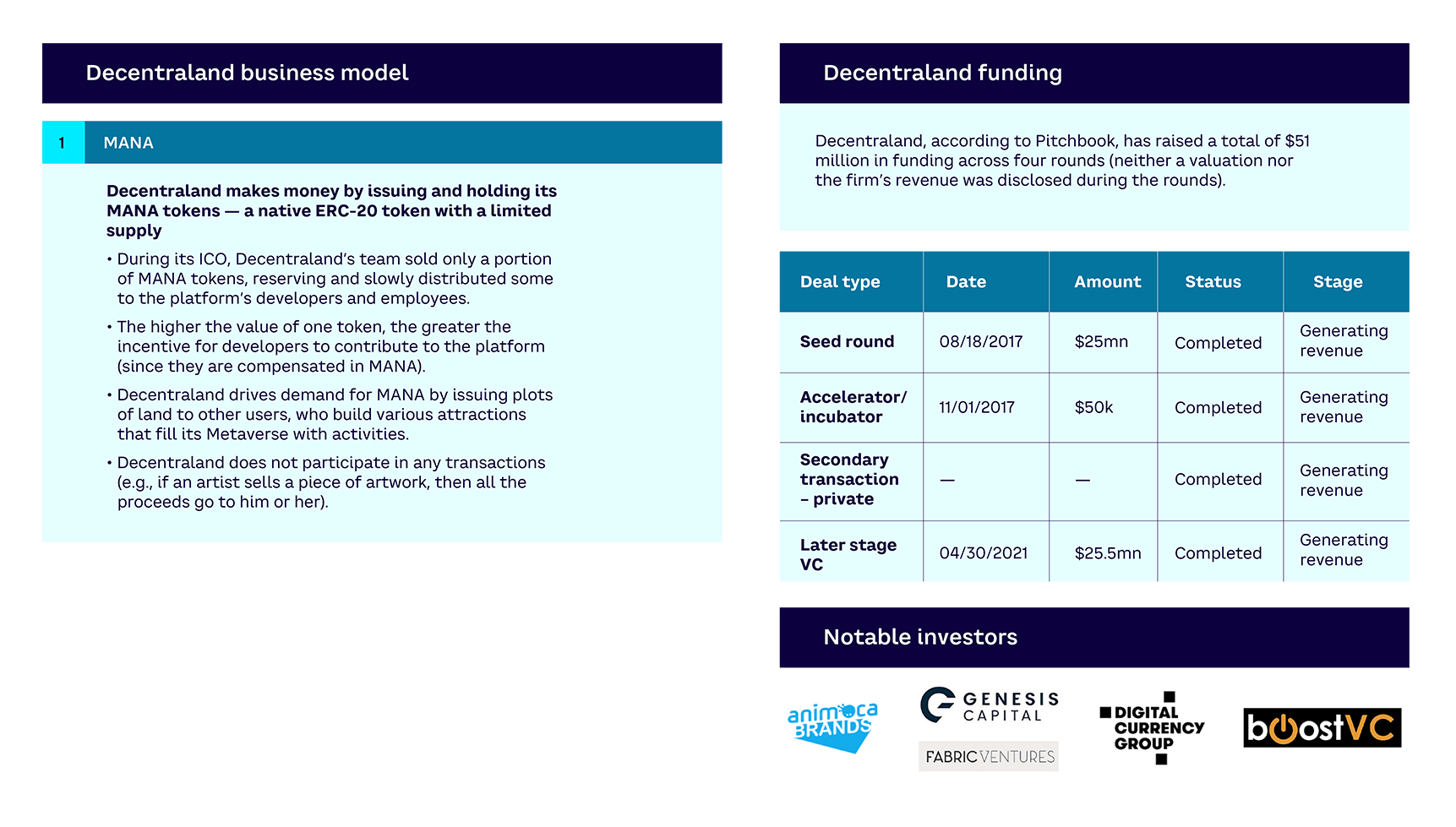

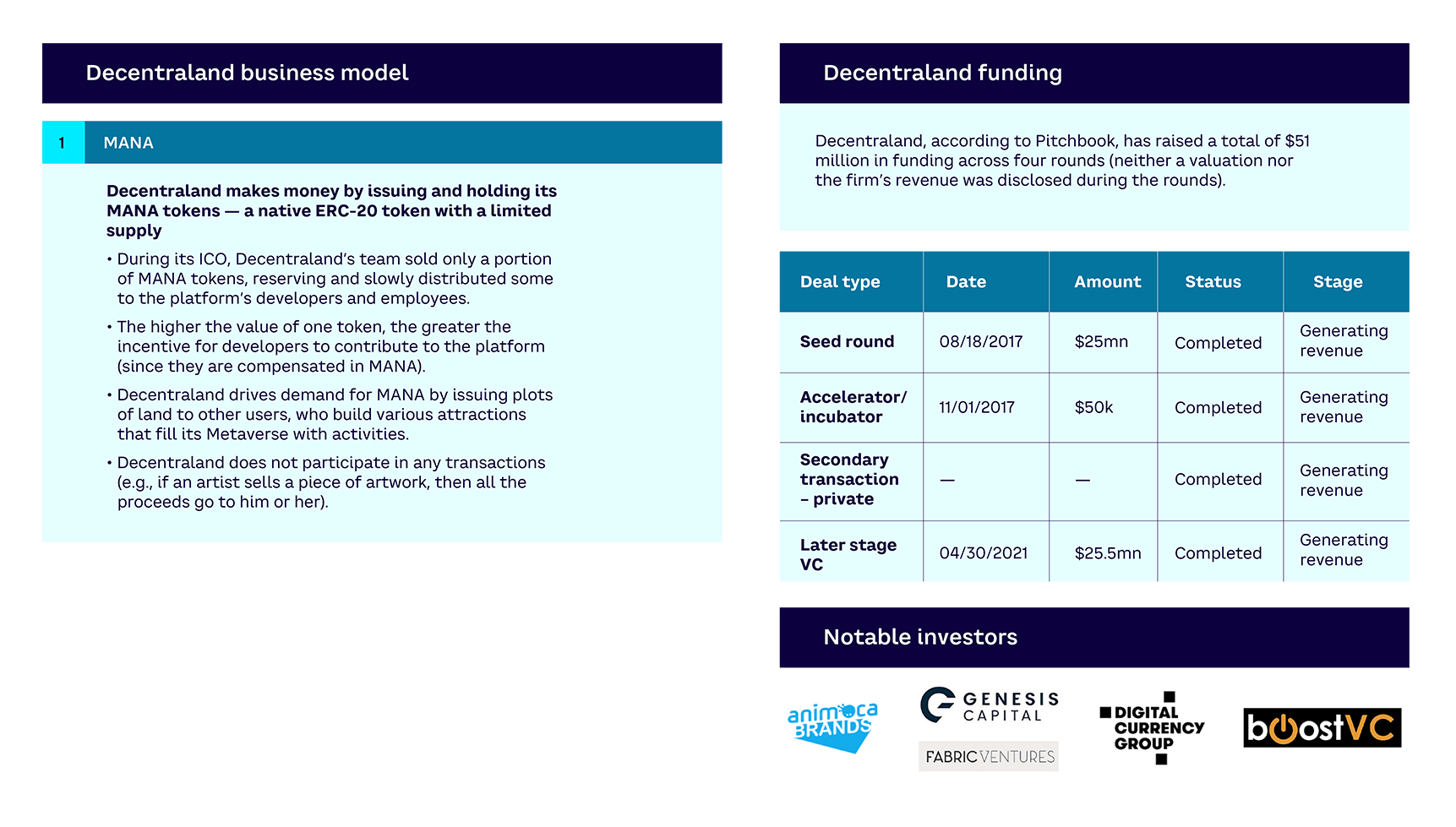

The Metaverse is in the same state as the Internet in the mid-1990s. Today, there is not a Metaverse, but a whole set of proto-metaverses — walled-garden Metaverses. The majority of companies aspiring to develop the Metaverse — such as Roblox, Epic Games, NVIDIA, Microsoft, Decentraland, or Meta — are actually developing noninteroperable proprietary platforms. This means that currently it is impossible to exchange virtual assets or even to communicate between one platform and another. Until there is interoperability, there will be no Metaverse.

Interoperability is one of the factors that may have a strong impact on the development of the Metaverse. At the same time, there is a tension between vendors and users. On one hand, vendors invest massively in the development of the Metaverse and want a return on investment. They will therefore tend to push for a noninteroperable Metaverse to keep users within their own environment. On the other hand, users and brands will maximize value by having an interoperable Metaverse. At this point, as no one knows yet whether or not interoperability will occur; interoperability is a key uncertainty.

A detailed analysis of the full Metaverse architecture shows the path to maturity

Some of the technologies behind the Metaverse, as defined in the previous section, are not particularly new. However, since the Facebook (Meta) announcement in September 2021, many people have had an uneasy feeling: either the Metaverse is indeed something completely new, or else it’s simply a repackaging of a set of technologies that have been under development for several decades.

What is the situation, exactly? What are the building bricks that make up the Metaverse? What are the technologies in each of these building bricks? How mature are they and when will they become mature? When can we hope (or fear) to see the “real” advent of the Metaverse?

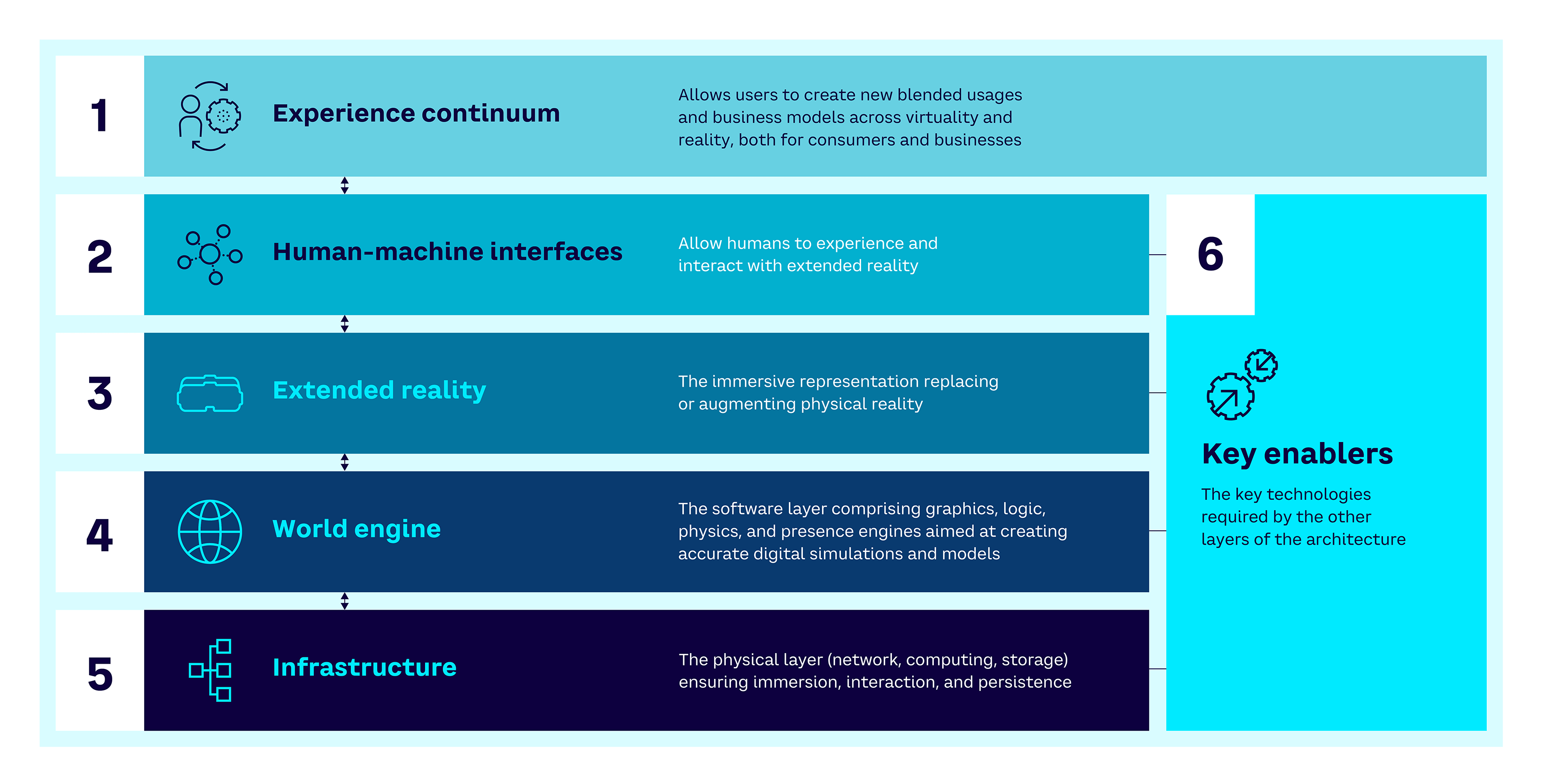

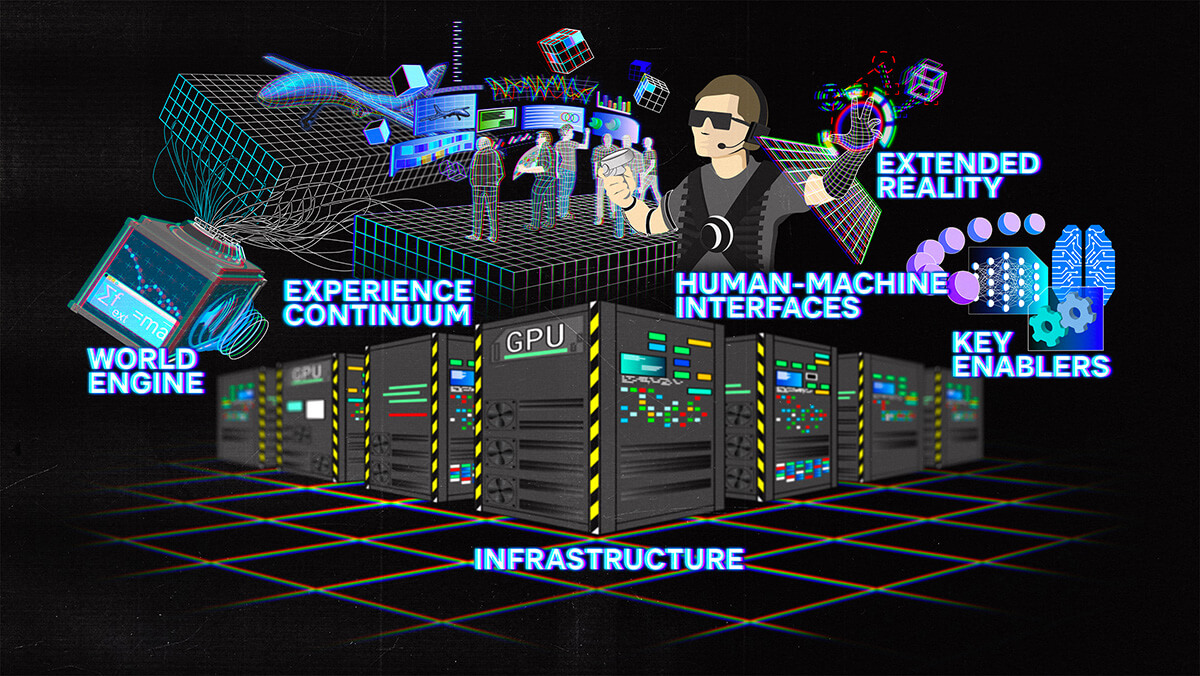

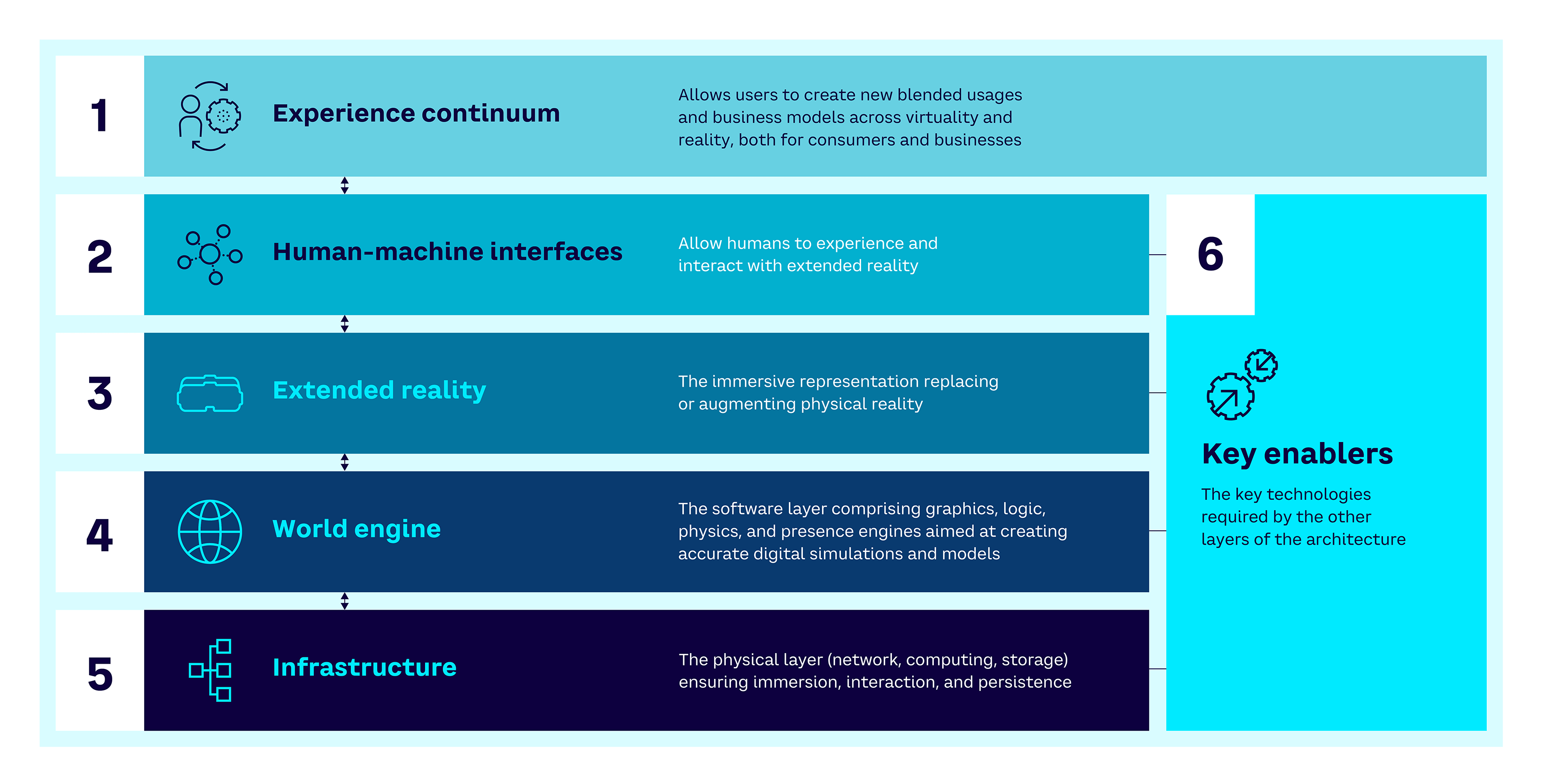

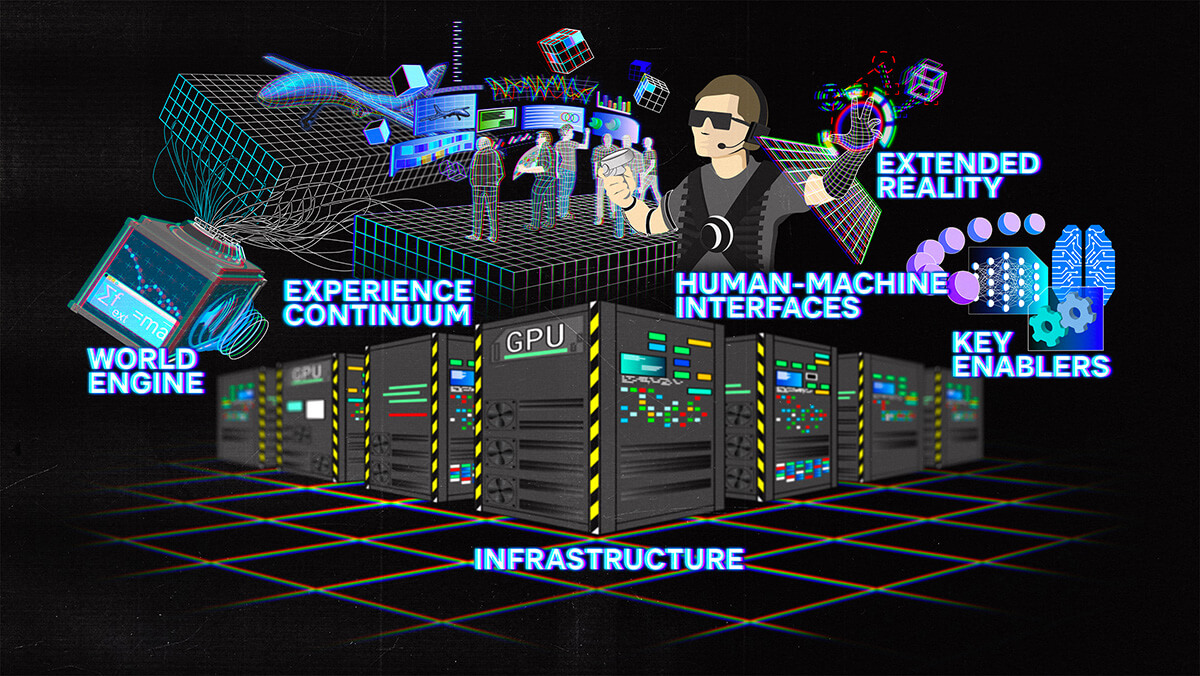

To begin answering these questions, we developed a framework, which aims to represent the architecture of the Metaverse in six layers (see Figure 10). These layers effectively cover the value chain of the Metaverse, in which the top level corresponds to new user experiences and business models, and the lowest level corresponds to the required hardware and software infrastructure.

This is, of course, a simplified view of reality (if we can talk about “reality” when we talk about the Metaverse!). This simplified view, however, helps us to analyze the complexity that underlies the Metaverse. Below, we dive into each of the layers to understand its nature and the maturity of each of the technological elements that compose it. This helps us to answer the questions: Should I be interested in the Metaverse as part of my business? And what are the opportunities today and in the future? As we will see in the final section of this report, even in this embryonic state, many business opportunities can be seized in all the layers.

“The Metaverse is the tool to search, find, and capture value in complexity

by immersing (space) oneself in it and projecting (time) oneself into it.”

— Michel Morvan, President and cofounder, Cosmo Tech

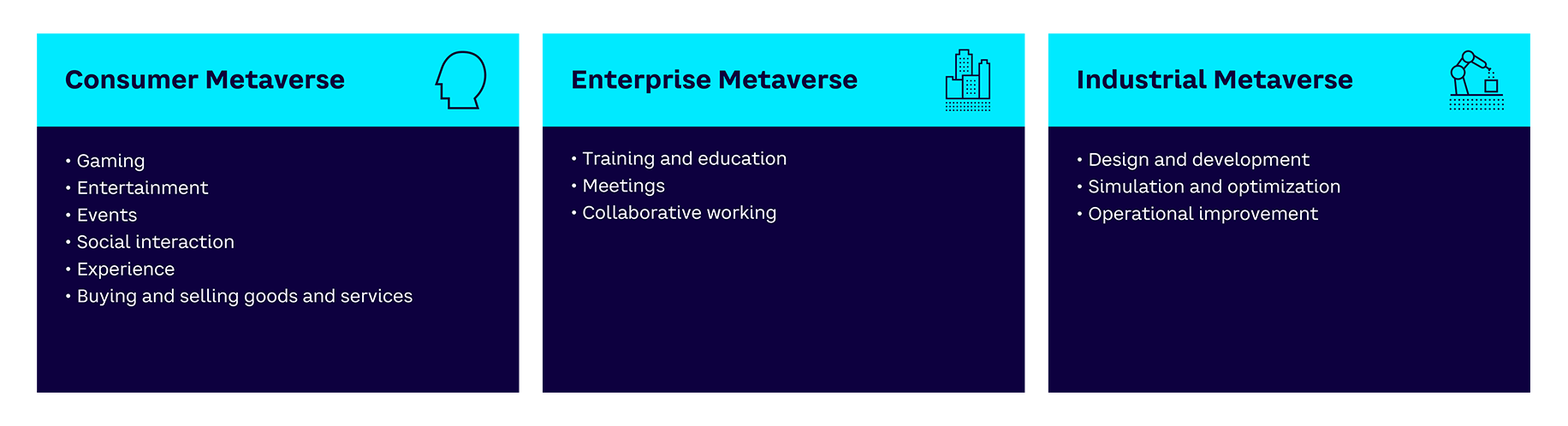

Layer 1: Experience continuum — Use cases and business models

Layer 1, which we call “experience continuum,” is the layer that brings together all the new use cases, experiences, and business models, existing and future. These new use cases and business models blur the boundaries between reality and virtuality. Like the Internet today, use cases can be segmented into three categories: consumer (socializing, entertaining, playing, etc.), enterprise (meeting, exchanging, collaborating, etc.), and industrial (modeling a production line or distribution network, collaborating around a digital twin, etc.). We will describe Layer 1 in more detail in the next chapter when we consider the opportunities and use cases that exist today.

Layer 2: Human-machine interfaces — The gateway

Layer 2, which we call “human-machine interfaces (HMIs)” is, as the name suggests, the layer that allows humans to perceive and interact with Layer 3’s “extended reality” (described below). HMIs are the gateway to the Metaverse. They include a mix of hardware and software that allows users to send inputs to the machine and the machine to send outputs to users, thus forming a consistent interaction loop.

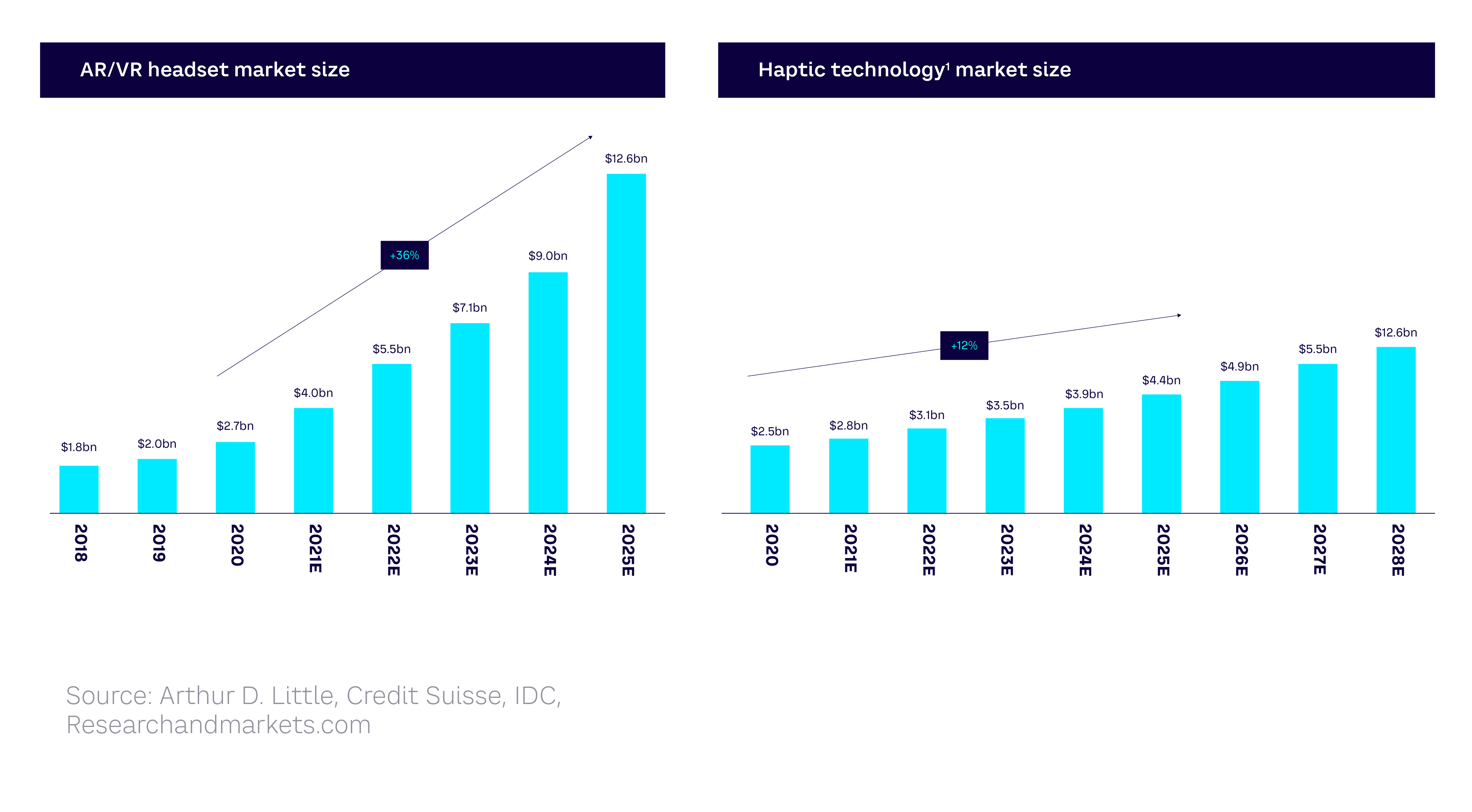

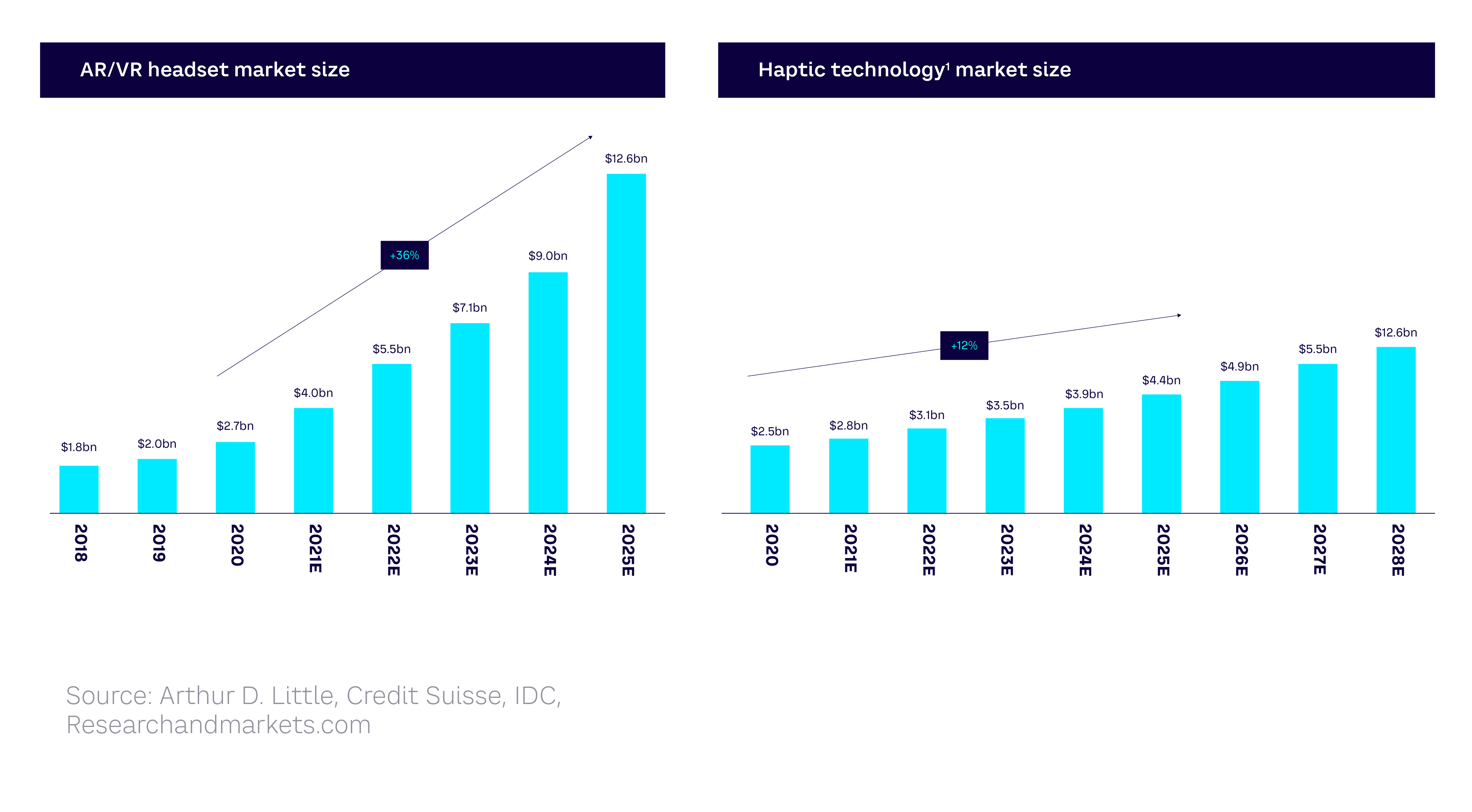

The HMI market is forecast to see rapid expansion over the coming years, with over 35% yearly growth in the AR/VR headset sector up to 2025 and a 12% increase in sales of haptic technologies up to 2028 (see Figure 11).

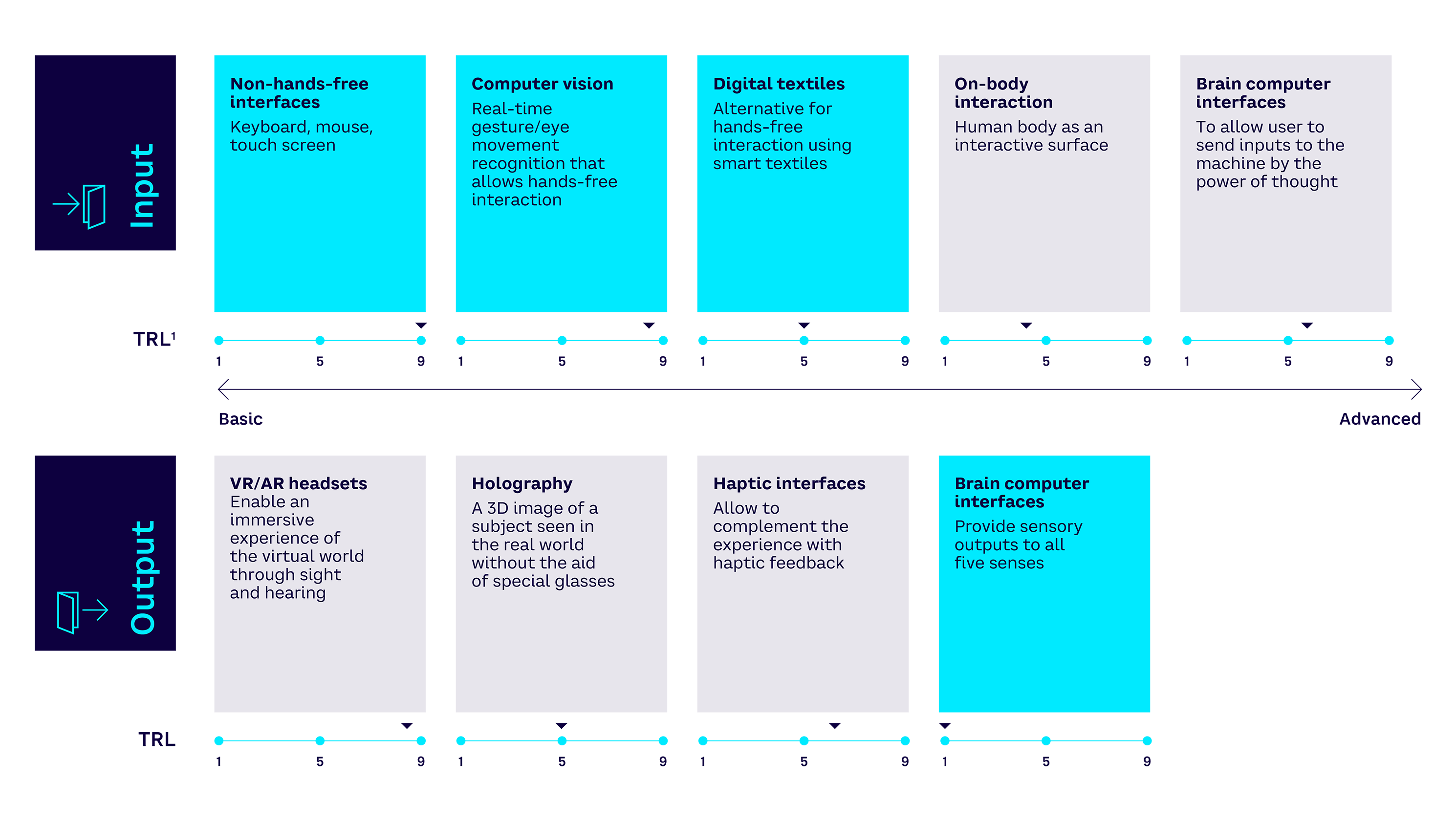

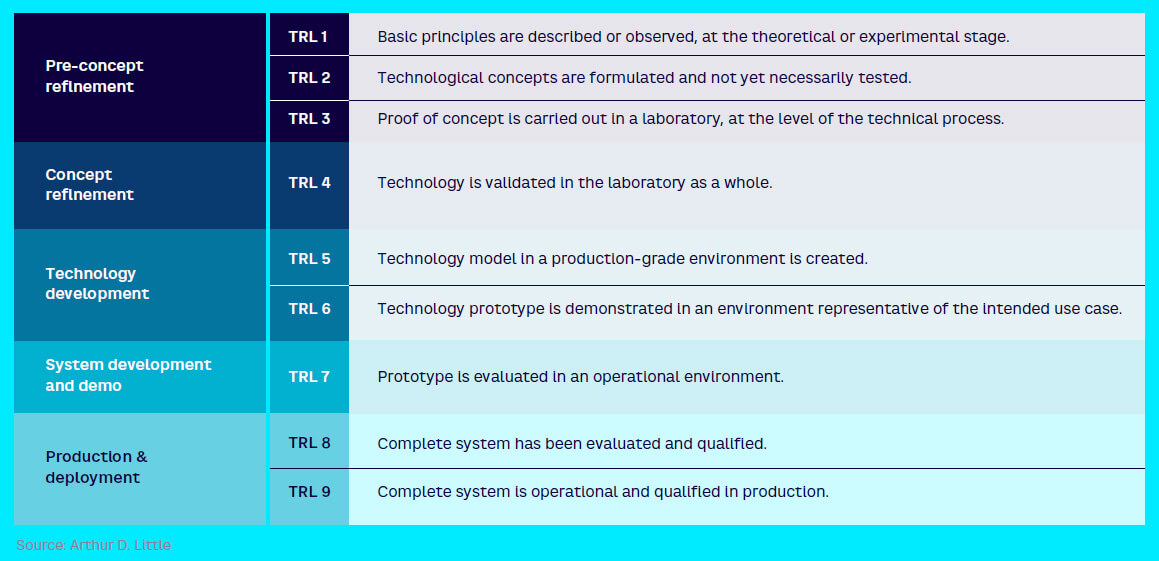

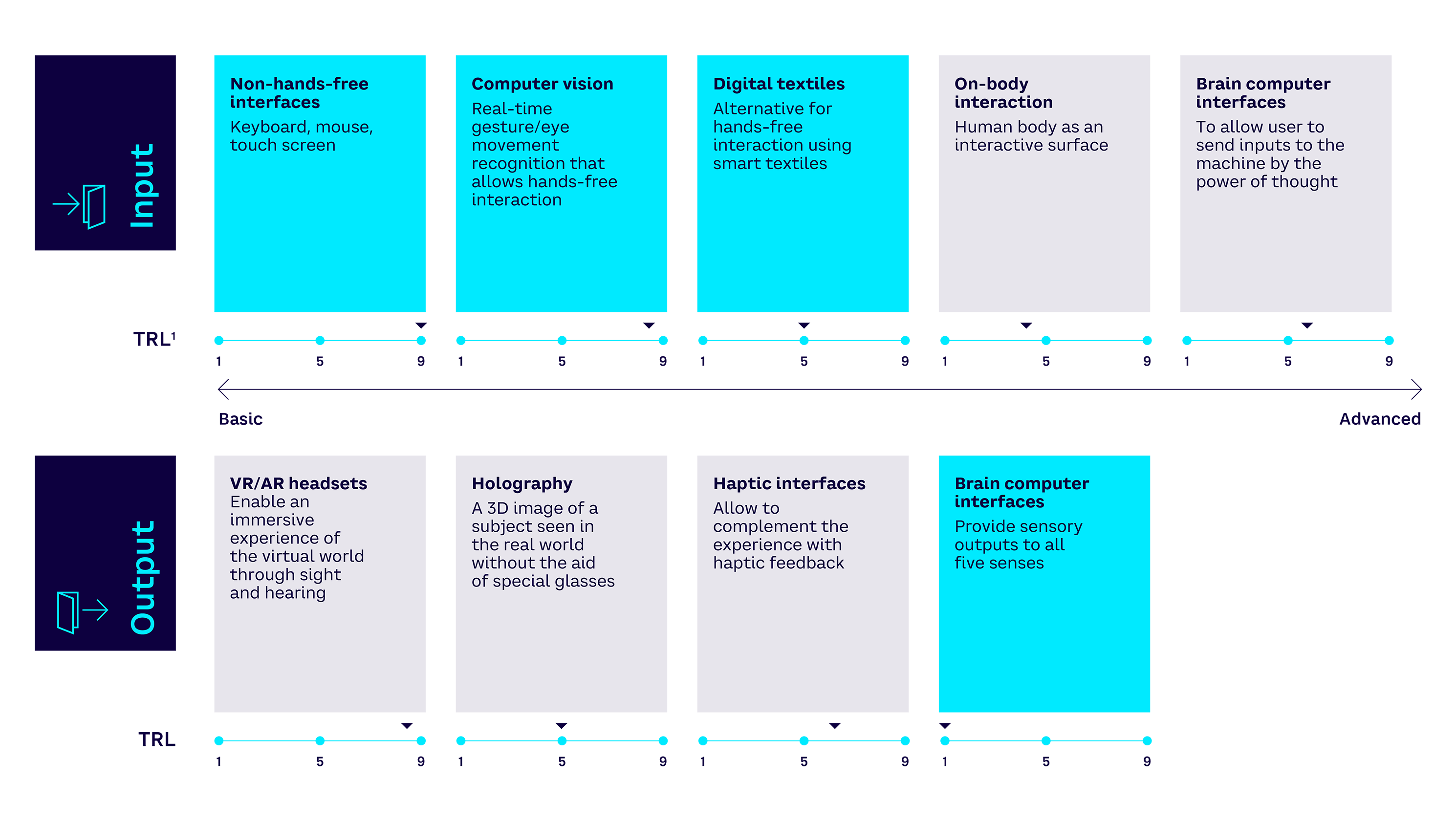

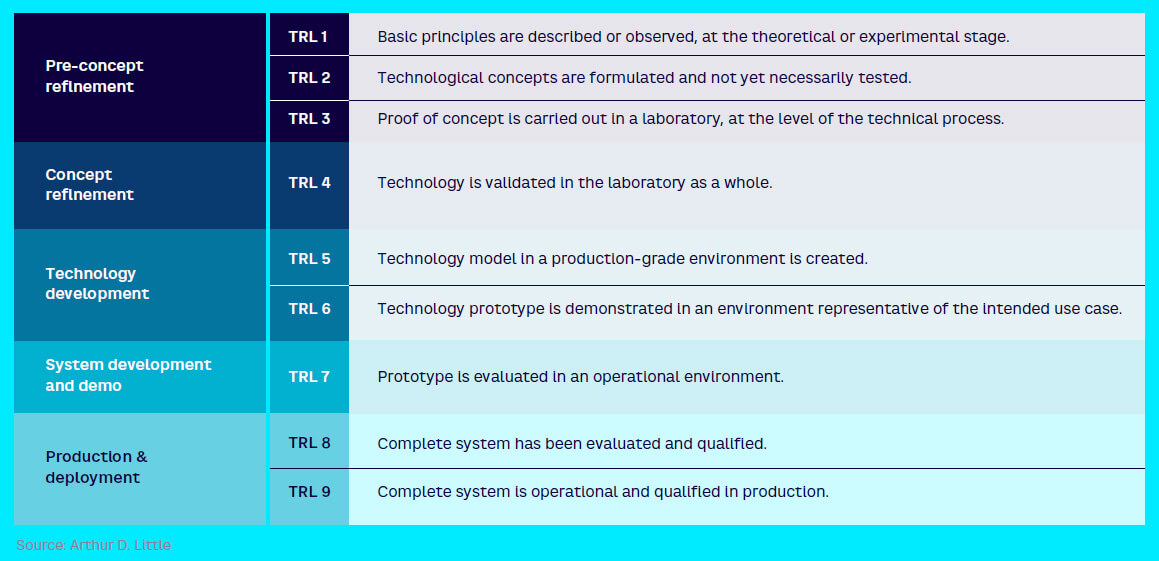

Some of the underlying technologies, such as the keyboard and mouse on the input side, or the screens on the output side, are very mature. In contrast, other technologies, such as brain-computer interfaces, are much less mature. Between the two, there is a whole range of more or less mature HMIs, such as VR and/or AR visors, holography, and haptic interfaces on both the input and output sides (see Figure 12, which shows HMI technologies mapped by technology readiness level [TRL] — see Appendix I for further description).

Overall, we can conclude that the way users will immerse themselves in the Metaverse is a critical uncertainty that will have a major impact on the rate of adoption. The more these technologies advance, the more immersion and interaction with the Metaverse will involve all our five senses. We predict that AR glasses, despite their current immaturity compared to VR headsets, is the interface likely to revolutionize usage and adoption in the coming five years. The real world is becoming the screen.

On-body interaction technology

On-body interaction technology uses the human body as an interactive surface, eliminating the need for a touchscreen or other hardware device. Users can either tap or swipe specific parts of their body to access specific applications or perform location-independent actions anywhere on their body.

It offers the advantage of always-available control, an expanded input space, and additional proprioceptive and tactile cues that support nonvisual use. Companies in this space include Makeability Lab, which has been exploring a suite of sensors mounted on the finger, Soli, and Ultraleap.

Current challenges include being able to demonstrate the accuracy of interactions and overcoming potential embarrassment at performing on-body interactions in public, which could hold back adoption.

Brain-computer interfaces (input type)

BCIs enable users to send inputs to the machine through the power of thought. A BCI system consists of four components: signal acquisition, feature extraction, feature translation, and device output. Two types of BCI exist: invasive and noninvasive.

Prototype input invasive BCIs have already been successfully developed. At Stanford University in May 2021, for example, a microchip implanted in his brain allowed a paralyzed man to communicate by text at speeds that approach the typical smartphone user.[14] Companies and startups developing invasive BCIs include Elon Musk’s venture Neuralink.

BCIs may eventually be used routinely to replace or restore useful functions for people severely disabled by neuromuscular disorders or to improve rehabilitation for those with strokes or head trauma. They could also augment natural motor outputs for pilots, surgeons, and other highly skilled professionals.

And although it may sound like science fiction, some noninvasive BCIs are already available commercially, with companies such as NextMind, Emotiv, and Kernel involved in the space.

The future of BCIs depends on progress in three critical areas: development of comfortable, convenient, and stable signal-acquisition hardware; BCI validation and dissemination; and proven BCI reliability and value for many different user populations.

VR/AR headsets

The most well-established HMIs, VR/AR headsets are already widely used with video games as well as in other applications, including simulators and trainers. They comprise a stereoscopic head-mounted display (providing separate images for each eye), stereo sound, and head-motion-tracking sensors, which may include devices such as gyroscopes, accelerometers, magnetometers, or structured light systems. Some headsets also have eye-tracking sensors and gaming controllers.

Since they were first launched, VR/AR headsets have improved both technically (in terms of resolution and lightness) and cost. However, they are still not yet widely available to the general public due to key challenges related to comfort and affordability. Other challenges include resolution, field of view, movement tracking, and immersivity capabilities. Players in this space include Apple, HP, Oculus (owned by Meta), Valve Index, and HTC Vive.

The three main performance factors that are considered when evaluating VR headsets are resolution (pixels per degree), field of view (FOV), and refresh rate. Resolution has improved year-on-year to ~30 pixels per degree and is getting closer to the eye-limiting resolution of about ~60, or normal sight. These performance factors are key for future mass adoption. Even if AR headsets/glasses are currently at a lower maturity level, it is likely that AR glasses will be the main interface in the coming three to five years.

Manufacturers have reduced the cost of developing devices, although it seems there is room for improvement. In addition to the three performance factors above, the main challenges are related to comfort and affordability. Wired headsets usually have better graphical power, while wireless glasses currently have lower quality. The final challenge, to provide a “sense of embodiment,” is a key research area that focuses on methods to allow the user to see themselves within the virtual scenario without the use of avatars.[15]

Holography

The holography process creates a 3D image of a subject seen in the real world without the aid of special glasses or other intermediate optics. The image can be viewed from any angle, so as the user walks around the display the object will appear to move and shift realistically. Holographic images can be static, such as a picture of a product, or be an animated sequence.

While best known as a method of generating 3D images, holography also has a wide range of other applications. For example, it is already used in data storage (by storing information at high density inside crystals or photopolymers) for applications including art, security, and logistics. And while increasing computing power may enable the creation of digital human models that will render faster and more realistically, this potentially leads to issues around voice cloning and fraudulent impersonation. Leading players include HYPERVSN, MDH Hologram, SeeReal Technologies, and VividQ.

The future of holography lies at the intersection of AI, digital human technology, and voice cloning. Increasing computing power should enable creation of digital human models that will render faster and more realistically. The evolution of holographic technologies is hoped to lead to their increasing availability and portability.

Haptic devices

Haptic devices allow users to touch, feel, and manipulate 3D objects in virtual environments. They are employed for tasks that are usually performed using hands in the real world, such as manual exploration and manipulation of objects. Computer keyboards, mice, and trackballs constitute relatively simple haptic interfaces. Other examples are gloves and exoskeletons that track hand postures and joysticks that can reflect forces back to the user. Companies involved in the area include CyberGlove Systems, Force Dimension, HaptX, and Ultrahaptics.

Key challenges for greater adoption are being able to scale up from laboratory to market readiness, along with overcoming technical challenges such as following or allowing the motion of the user with minimum resistance.

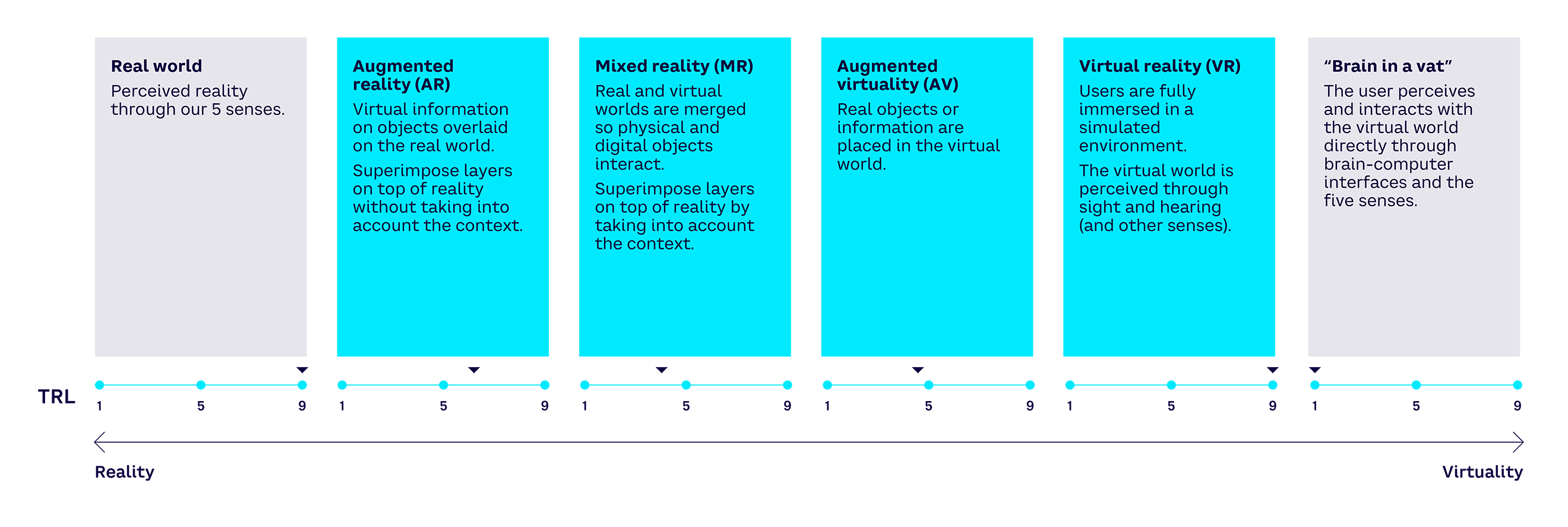

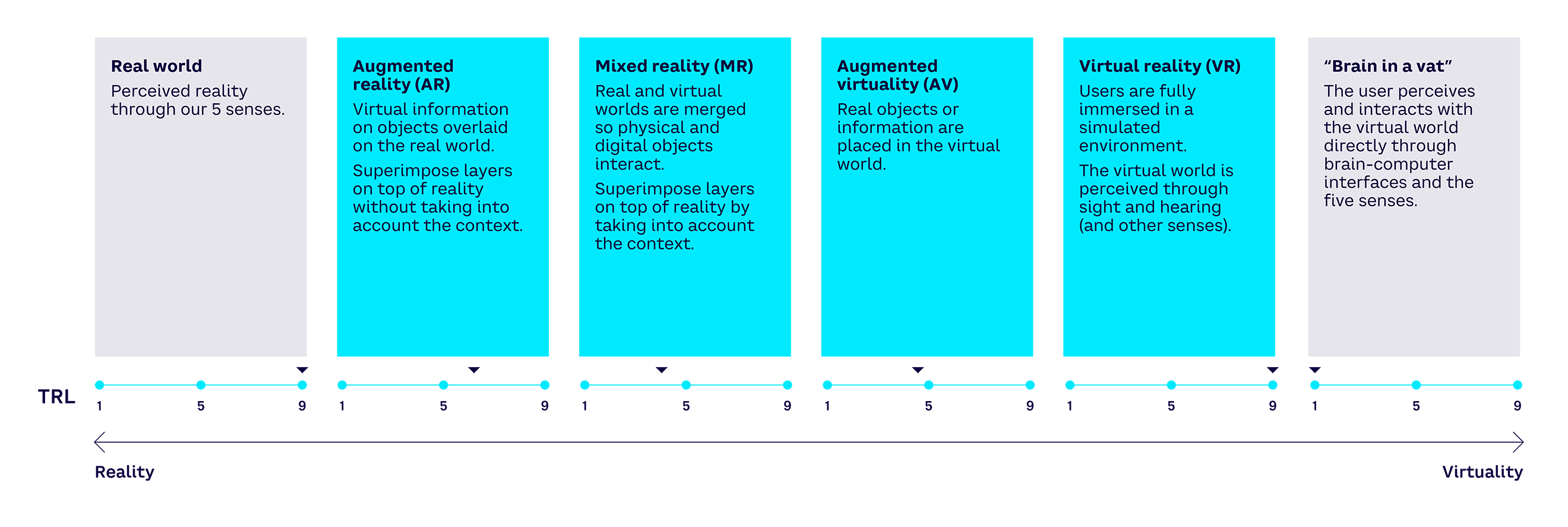

Layer 3: Extended reality — The visible face

Layer 3, which we’ve named “extended reality” (XR), is the immersive representation that augments or replaces reality. It comprises a spectrum ranging from 100% real to 100% virtual. Extended reality combines the world and real objects with one or more layers of computer-generated virtual data, information, or presentation. Thus, XR may be thought of as the visible face of the Metaverse. XR includes AR, MR, augmented virtuality (AV), and VR, reflecting different mixes of real and virtual information along the spectrum (see Figure 13).

The technologies that comprise XR are at varying degrees of maturity. For example, VR today is much more mature than AR. As these technologies develop, the more they will converge and the more the Metaverse will be synonymous with continuity between the real and the virtual.

Augmented reality

Augmented reality enhances the real-world experience by superimposing on it computer-generated contextual data, information, and virtual experiences. AR software works in conjunction with devices such as tablets, phones, headsets, and more. These integrating devices contain sensors, digital projectors, and the appropriate software that enables these computer-generated objects to be projected into the real world. Once a model has been superimposed in the real world, users can interact with and manipulate it.

AR is commonly used for entertainment purposes (such as Niantic’s Pokémon Go mobile game), but also increasingly in enterprise and industrial applications such as training, maintenance, construction, healthcare, and retail, where users can access contextual data superimposed on real-world objects. Although relatively mature, the technology faces challenges related to costs, accessibility, and education as well as potential privacy concerns since it depends on the ability of the device to record and analyze the environment in real time. Major players active in the AR space include Help Lightning, Niantic, Plattar, SightCall, and Streem.

Mixed reality

Mixed reality refers to the intertwining of real and virtual worlds. In contrast to AR, in MR, digital objects are not just overlayed on but are anchored to the physical world, meaning they can be interacted with. Green screen and video chat backgrounds are nonimmersive 2D examples of MR. However, some definitions of MR include both AR and AV.

Organizations across many industries have already begun developing MR applications to make certain processes safer, more efficient, or more collaborative. It is already used in sectors such as manufacturing, healthcare, and architecture for training and development, remote collaboration, and turning concepts into pre-production models. MR headsets like the Microsoft HoloLens allow for efficient sharing of information between doctors. Other players include the US Air Force Research Laboratory and Skywell Software.

Augmented virtuality

Augmented virtuality refers to predominantly virtual spaces into which physical elements (such as objects or people) are dynamically integrated. The objects or people can then interact with the virtual world in real time with the use of techniques such as streaming video from physical spaces (such as webcams) or the 3D digitalization of physical objects.

The use of real-world sensor information, such as gyroscopes, to control a virtual environment is an additional form of AV, in which external inputs provide context for the virtual view. Current use cases include gaming and design applications. For example, using a touchscreen, people can design their own kitchen or bathroom by selecting and moving virtual appliances and fixtures around a digitally created room. Blacksburg Tactical Research Center is a leading player in the AV space.

Virtual reality

Virtual reality refers to an entirely simulated experience that can be similar to, or completely different from, the real world. It uses VR headsets or multi-projected environments to generate realistic images, sounds, and other sensations that simulate a user’s physical presence in a virtual environment, allowing for movement and interaction.

VR headsets commonly comprise a head-mounted display with a small screen in front of the eyes, but can also be created through specially designed rooms with multiple large screens. While seeing increasing adoption, there are health and safety concerns around VR’s prolonged use, especially by children. Leading companies active in the market include Autodesk, France Immersive Learning, Google, SteamVR, and Threekit.

Overall, it is important to realize that the Metaverse is not all about interactions in a completely virtual world, which is typically the type of experience that many observers focus on and is often the source of skepticism about its likely level of adoption. As XR technologies mature, the Metaverse will offer seamless continuity between the real and virtual worlds.

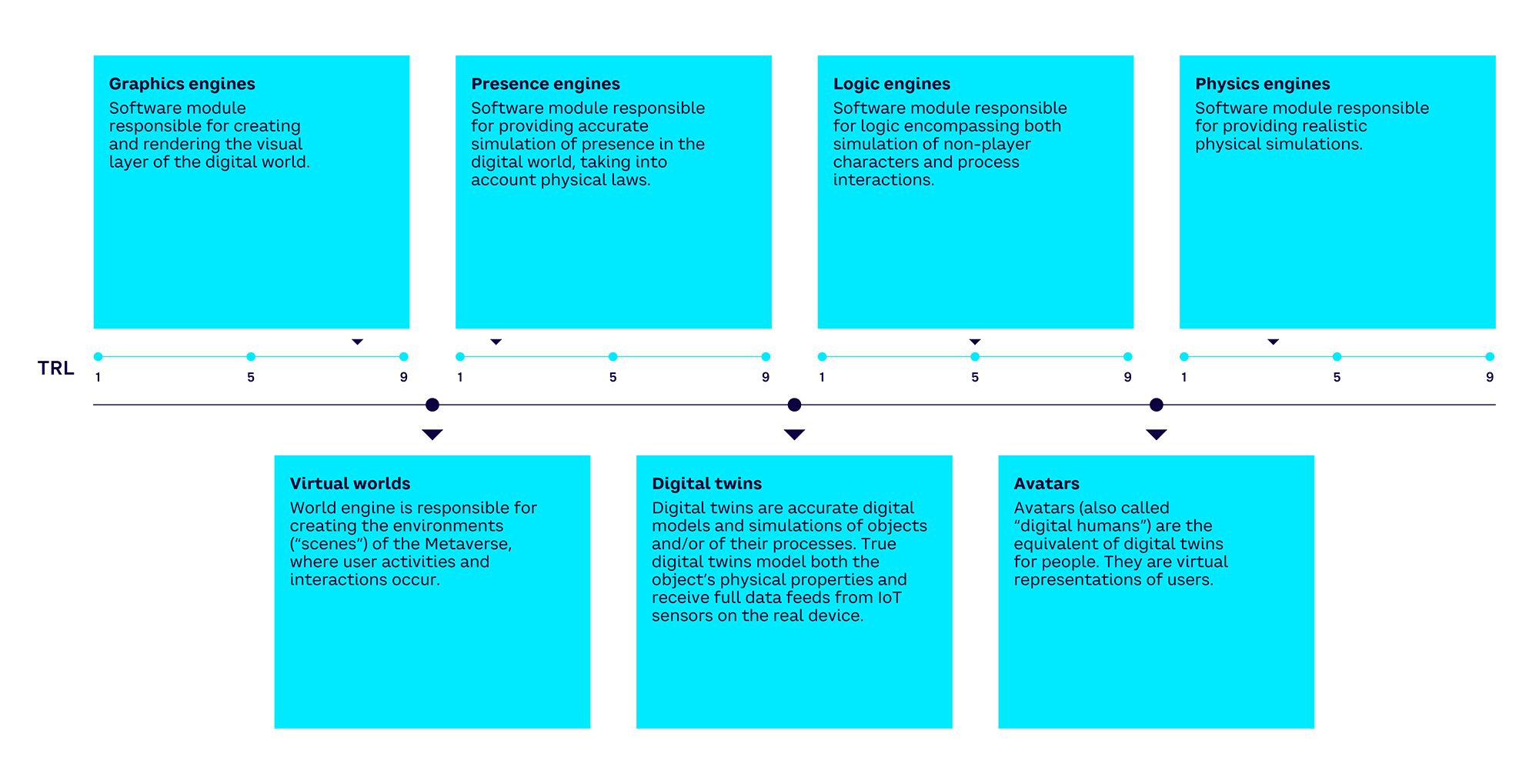

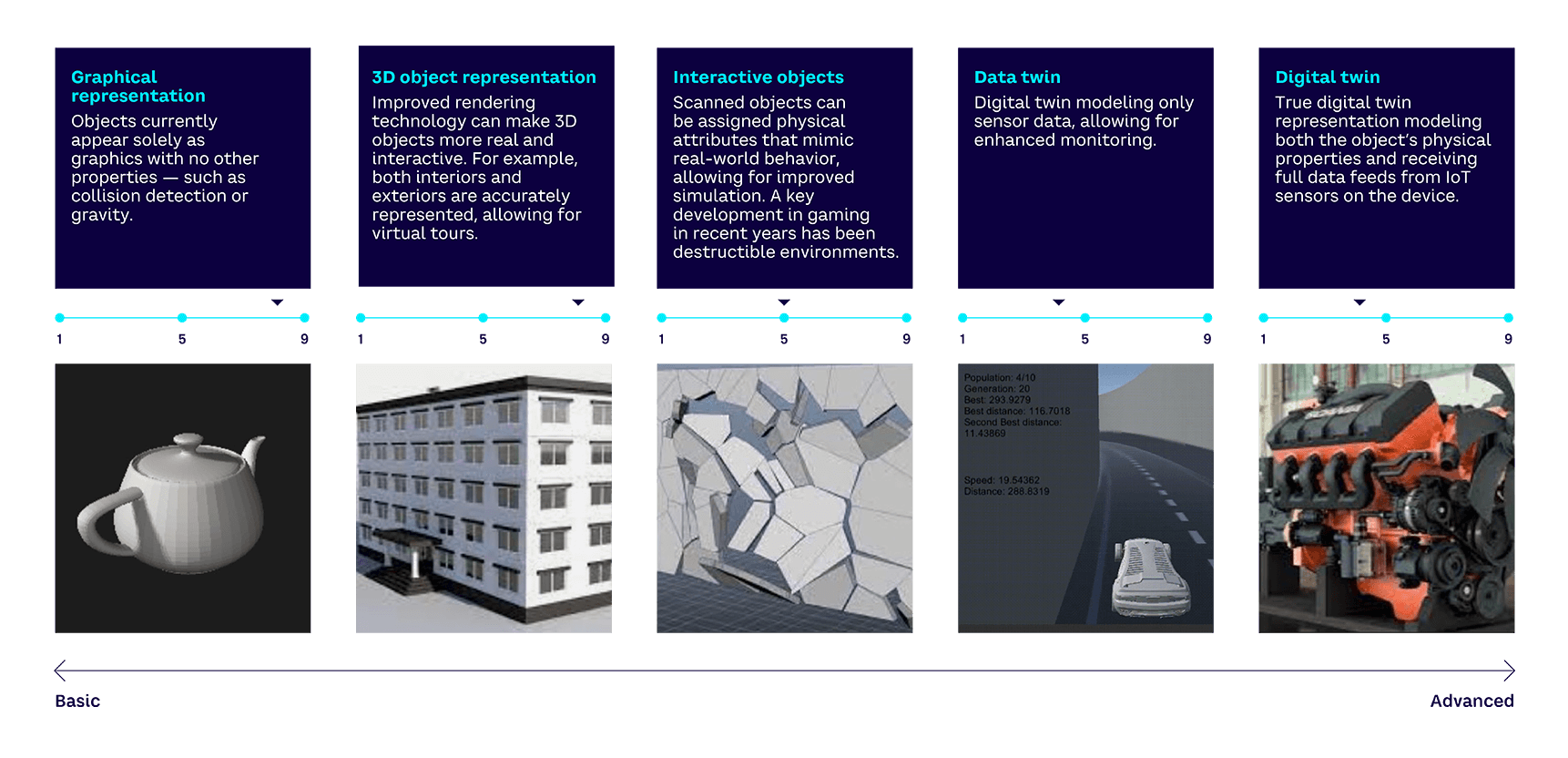

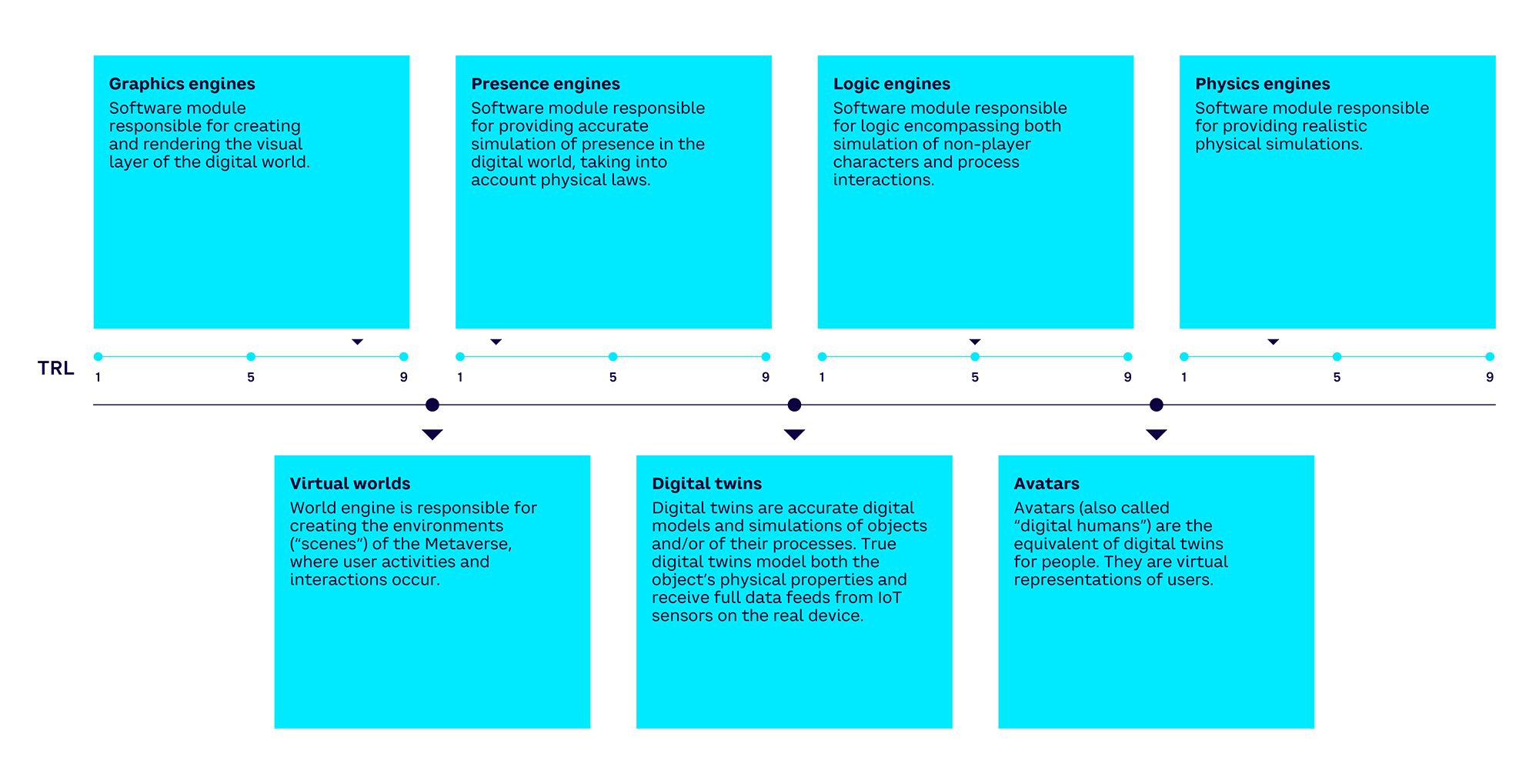

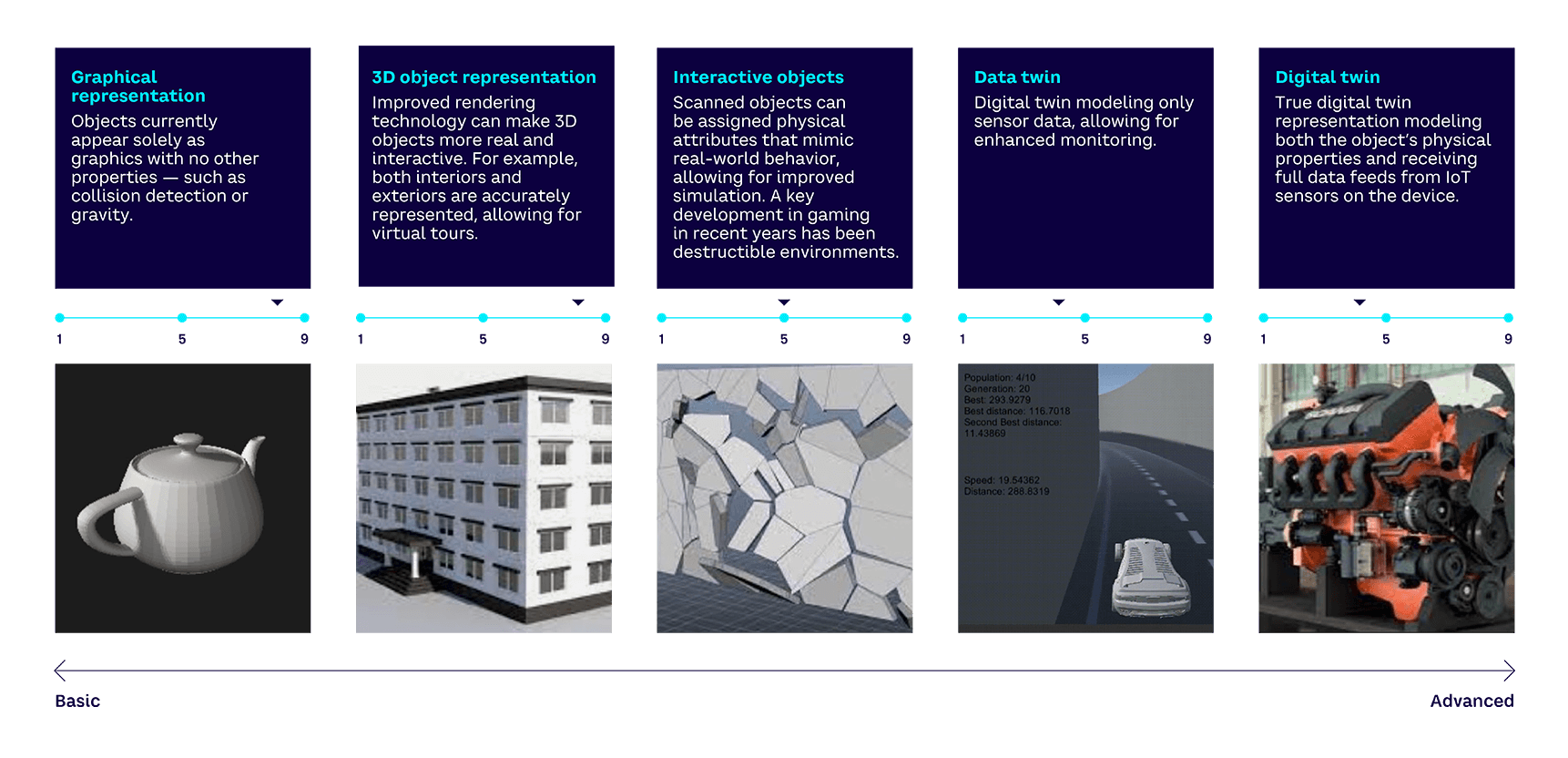

Layer 4: World engine — The engine

Layer 4, which we call “world engine,” corresponds to all the software allowing the development of virtual worlds, virtual objects, and their processes (digital twins) and virtual people (avatars or digital humans). The world engine (see Figure 14) will likely evolve from today’s game engines, such as Unity or Unreal, combined with physics engines such as that of Dassault Systèmes. They will thus have similar core architectures. The world engine is composed of four essential building blocks: graphics engine, presence engine, logic engine, and physics engine.

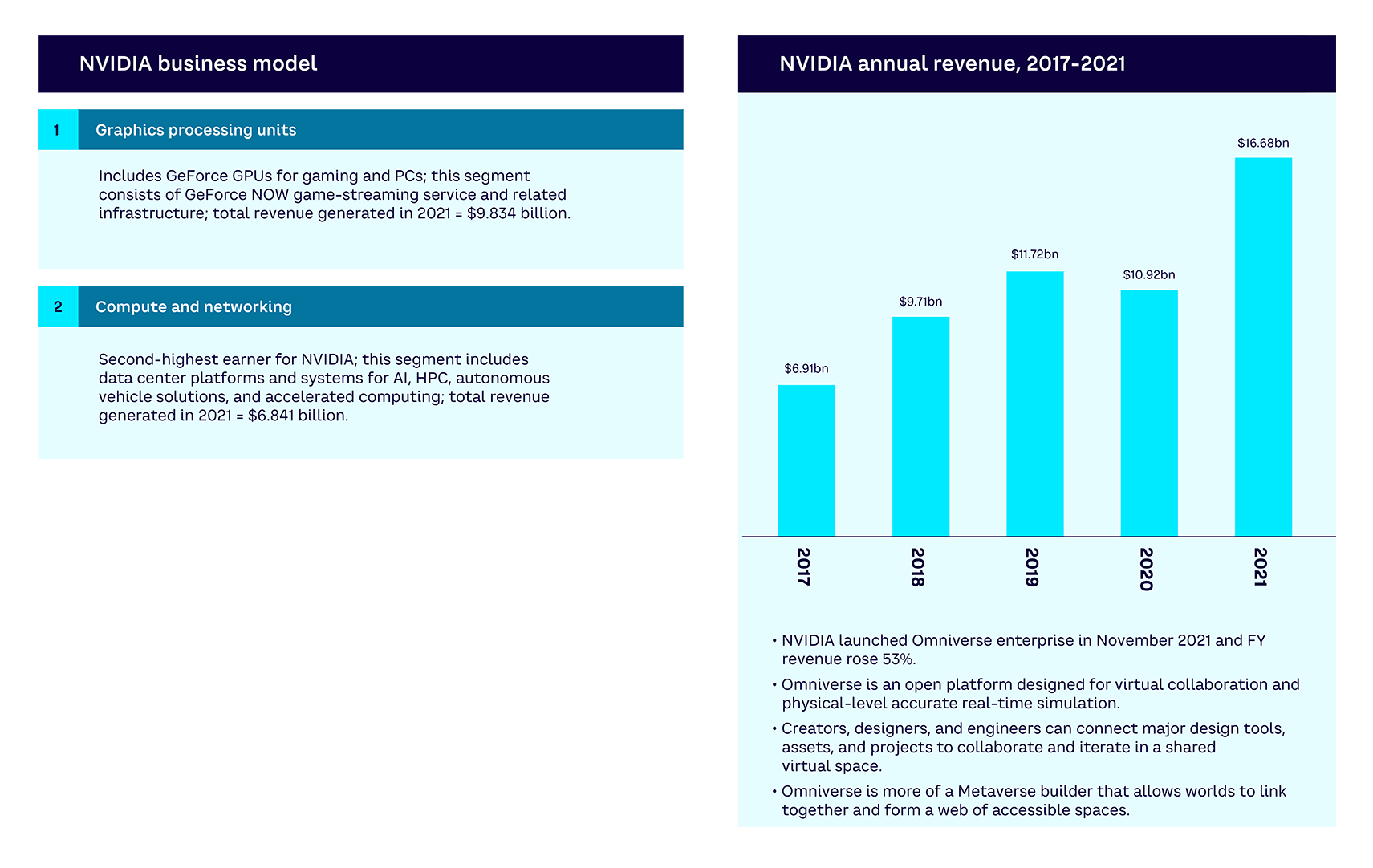

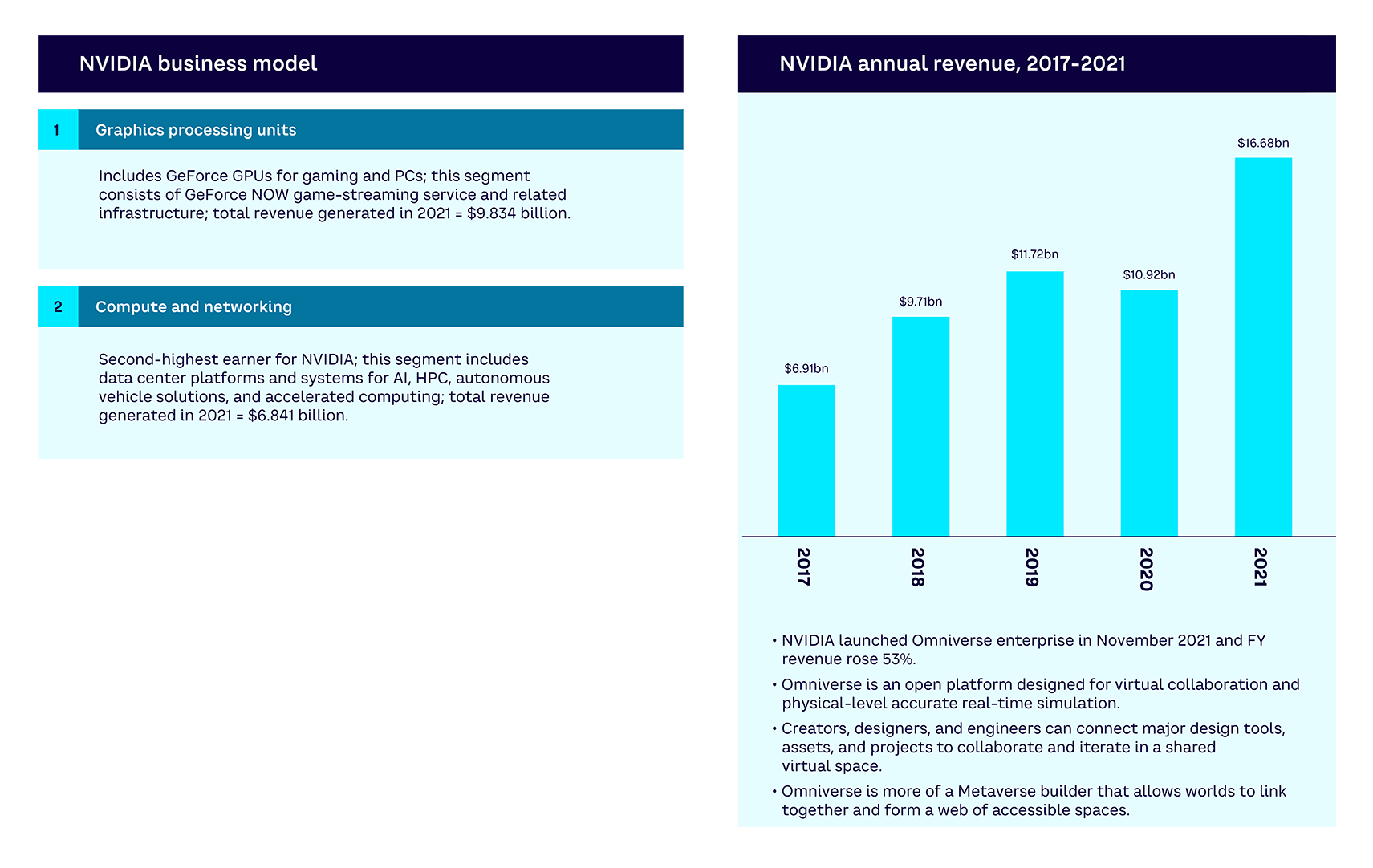

World engine technology development is still at a relatively early stage. It will take several years before the different engines within it combine to enable more complete realism. While there are already solutions for these components, there is still much development necessary and more convergence is expected between gaming and digital twin engines. Players include Dassault Systèmes, Epic Games, Nuke, NVIDIA, and Unity.

Graphics engine

The graphics engine is responsible for creating and rendering the visual layer of the virtual world. The key component of the Metaverse — the integrated world combining the physical and virtual world — will be based on graphical techniques, including the 3D construction of world scenes, digital items, non-player characters (NPCs), and player characters (avatars). Computer graphics engines are likely one of the most advanced and mature components currently available for Metaverse projects, as near-photorealistic 3D computer graphics can be generated in real time for games, albeit using state-of-the-art hardware that is not easily accessible to the average user and at a relatively high energy consumption (powerful desktop graphics processing units [GPUs] can consume over 500 watts of energy at peak load), limiting their mobility. Leaders in this space include Unity, Unreal Engine, CRYENGINE, 3ds Max, and Amazon Lumberyard.

Presence engine

The presence engine enables users to feel present in any location as though they were there physically. For example, a first state of presence technology can now be found in 4DX cinemas, which incorporate on-screen visuals with synchronized motion seats and environmental effects such as water, wind, fog, scent, snow, and more to enhance the on-screen action. Presence engines are currently in the early prototype phase, though significant work is happening in the field, especially in the areas of haptic feedback propelled by both gaming and training simulators.

Logic engine

The logic engine is responsible for managing interactions between various virtual entities. It encompasses both simulation of NPCs and process interactions. Logic engines currently are driven mainly by game development, as the most successful Metaverse-like environments currently in existence are computer games. In most cases, logic engines allow the attachment of additional “components” or “behaviors” to a 2D or 3D model. At this time, logic engine components are relatively simplistic and focus on highly specific behaviors such as the ability for a digital model to emit light, move, or play sounds. Today’s logic engines are highly deterministic and control the bits and pieces that attempt to give the digital model verisimilitude. In future, logic engines will likely have more advanced and free-form components based on AI and machine learning (ML). For example, AI components will synthesize speech and responses rather than relying on pre-recorded text. Leaders are much the same as those in the graphics engine space, and include Unity, Unreal Engine, CRYENGINE, 3ds Max, and Amazon Lumberyard.

Physics engine

The physics engine allows the creation of realistic multi-physics modeling and simulations (e.g., concerning fluid dynamics or gravity). It describes the physical behavior of the materials supporting actions like heat, bending, or chemical reactions. The physics engine is responsible for providing an approximate simulation of physical systems to enable the verisimilitude of the virtual world to help with immersion, and will handle tasks such as collision detection and body dynamics.

Physics engines tend to be broadly categorized in high-precision and real time, although the distinction is already becoming somewhat blurred due to better algorithms and the increase of computational power. High-precision engines are typically used to calculate very precise physics, such as fluid dynamics. Real-time engines, on the other hand, tend to have simplified algorithms and reduced accuracy but allow for real-time computation. Real-time engines are a key requirement to maintain verisimilitude as, for example, too slow computation of collision detection will result in objects passing through each other and potentially being repelled with abnormal correction force when the computation catches up.

Players in the scientific space are developing numerous physics engines for a wide variety of purposes, with each tending to focus on a particular high-precision physics challenge. From a Metaverse perspective, where at least the initial versions will likely be collaborative spaces where physics will be an approximation of reality sufficient to maintain realism, the usual game creation engines are key players in the space, including Unity, Unreal, CRYENGINE, Amazon Lumberyard, 3ds Max, and Dassault Systèmes.

Virtual worlds

Looking now at the output side of the world engine, the user in a virtual world accesses a computer-simulated world that presents perceptual stimuli to them, allowing users to manipulate elements of the modeled world and thus experience a degree of presence. Communication between users can range from text, graphical icons, visual gesture, sound, and, rarely, forms using touch, voice command, and balance senses.

While virtual worlds have made impressive steps forward in terms of immersivity, they currently require robust hardware and fast connectivity to operate effectively. Notable players in the space include IMVU, Kaneva, and Second Life.

“Virtual objects are more expensive than real ones because they give access to knowledge.”

— Pascal Daloz, COO, Dassault Systèmes

Digital twins

Digital twins create a virtual copy of a physical object, such as a machine. Sensors produce data about different aspects of the physical object’s performance, such as energy output, temperature, weather conditions, and more. This data is then relayed to a processing system and applied to the digital copy.

This virtual model can be used to run simulations, study performance issues, and generate possible improvements — all with the goal of generating valuable insights that can then be applied back to the original physical object.

While digital twins are increasingly being adopted, there is still significant development needed to fully model all properties of a complex object in the digital domain. Companies involved in the market include Ansys, Cosmo Tech, Dassault Systèmes, IBM, and Siemens.

Avatars

Avatars are developing toward becoming photorealistic 3D renditions of human beings in the virtual world that are nearly indistinguishable from the real thing. They rely on a complex combination of technologies for their functionality. These include AI to process input and provide feedback, natural language processing to understand voice commands, advanced 3D modeling to replicate expressions of human emotion with precision, and natural language generation so that the digital human can respond via voice.

While they already have business applications, such as acting as the face of customer experience chatbots, the introduction of truly realistic physical and mental simulations is still a long way in the future. Current challenges include, for example, achieving real-time response without latency and enabling avatar autonomy. There are also ethical issues associated with an avatar, which both is and isn’t the same thing as the real person it is representing. Companies active in the market include Banuba, Emova, Imverse, Soul Machines, Uneeq, and Unity.

Avatars can be projected into the Metaverse in one of two ways — either through real-time 3D video (which is bandwidth intensive) or through photorealistic models that only transfer changes (such as body movements) to the Metaverse, reducing the network capacity required. For example, Emova is working to deliver photorealistic 3D models that capture movement and also control lighting effects to enhance realism. The first target market is online fashion, enabling consumer avatars to digitally try on clothes or jewelry to get a realistic impression of how an item will look when worn. The aim is to reduce return rates (a third of clothes purchased online currently are returned), thus increasing efficiency and lowering environmental impacts.

While certain areas, such as photorealism and movement capture are now mature, techniques such as emotion capture and avatar autonomy are not. This limits the usefulness of avatars for applications such as online meetings in the Metaverse, which are also currently held back by a lack of sufficient bandwidth.

Layer 5: Infrastructure — The piping

Layer 5, which we have named “infrastructure,” corresponds, as its name suggests, to the physical infrastructure — network, computing power, and storage — that enables the real-time collection and processing of data, communications, representations, and reactions (see Figure 16).

Infrastructure is in a sense the “piping” that enables achievement of the three essential properties of the Metaverse described earlier: immersion, interaction, and persistence.

Infrastructure is probably the least interesting layer for the nonexpert, so it is not often discussed in the media. However, infrastructure is critically important to the development of the Metaverse. The infrastructure we have defined does not yet exist, and probably won’t be realized for around a decade due to the technical challenges involved.

A localized high-bandwidth, low-latency infrastructure is needed, requiring development in gigabit speeds, millisecond latency, and local and cloud compute. To achieve anything close to what Metaverse advocates promise, most experts believe nearly every kind of chip will have to be more powerful by an order of magnitude than it is today.

This means there are huge opportunities for players at the infrastructure level. For example, some estimates suggest that the market will be worth more than $700 billion for telecom operators by 2030. Some relevant telcos and local infrastructure players have already entered the Metaverse by themselves or through different partnerships, including e&, MTN Group, SK Telecom, Telefónica, T-Mobile, Turkcell, Verizon, and Vodafone.

Areas where infrastructure will need to be expanded include:

- Local computing power. Significant local computing power is needed to achieve an immersive VR/AR experience and will require an immense improvement in performance to achieve levels required by the Metaverse.

- Communications. New, low-latency, near-instantaneous communications methods will need to be developed to achieve the interaction levels needed for truly immersive Metaverses to exist. Networks, including Internet backbones, will require perhaps an order of magnitude in throughput increase to handle the new data streams.

- Cloud computing. Current massively multiplayer games have limited populations or offer very limited simulation of specific aspects of life. Cloud computing farms will need an order of magnitude performance increase to accommodate the needs of the Metaverse and to ensure the world is always “on.”

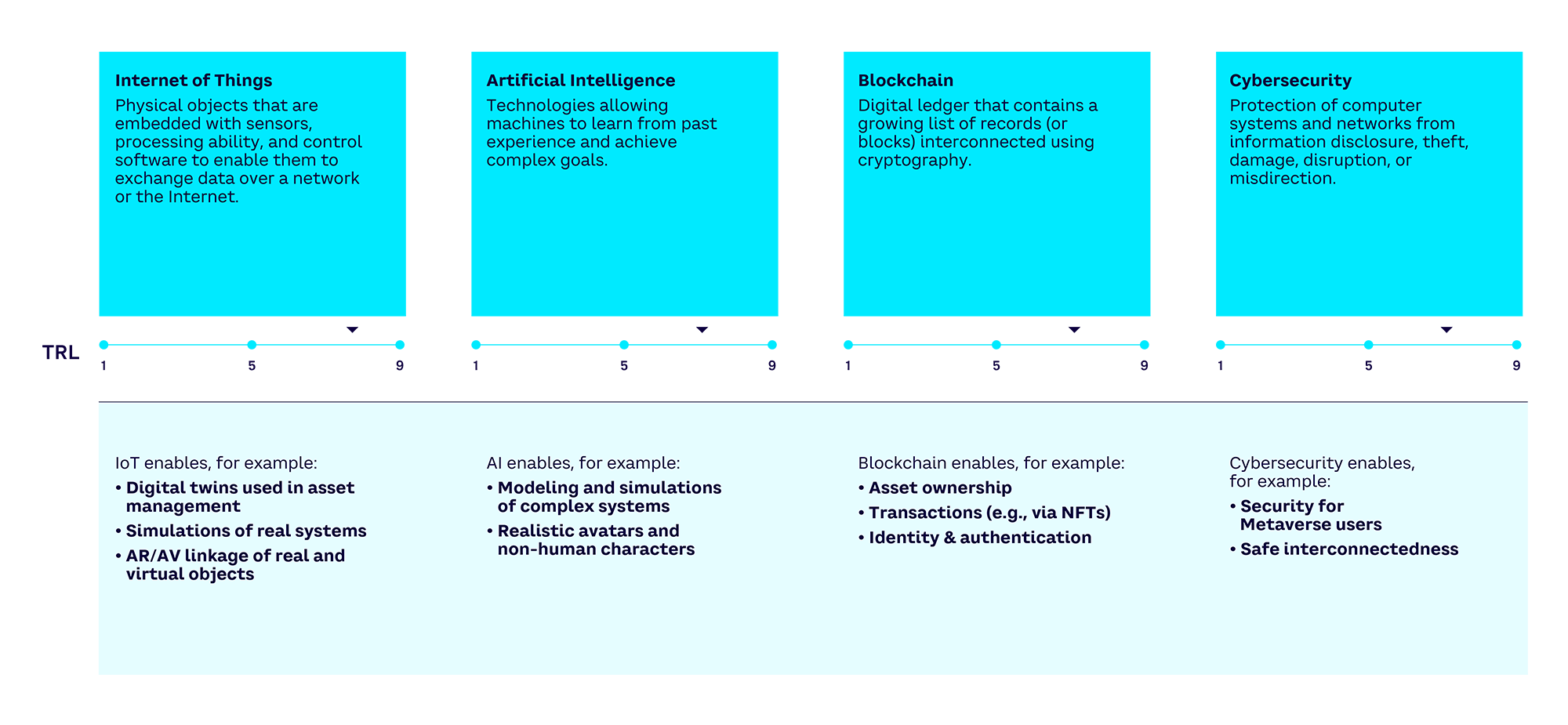

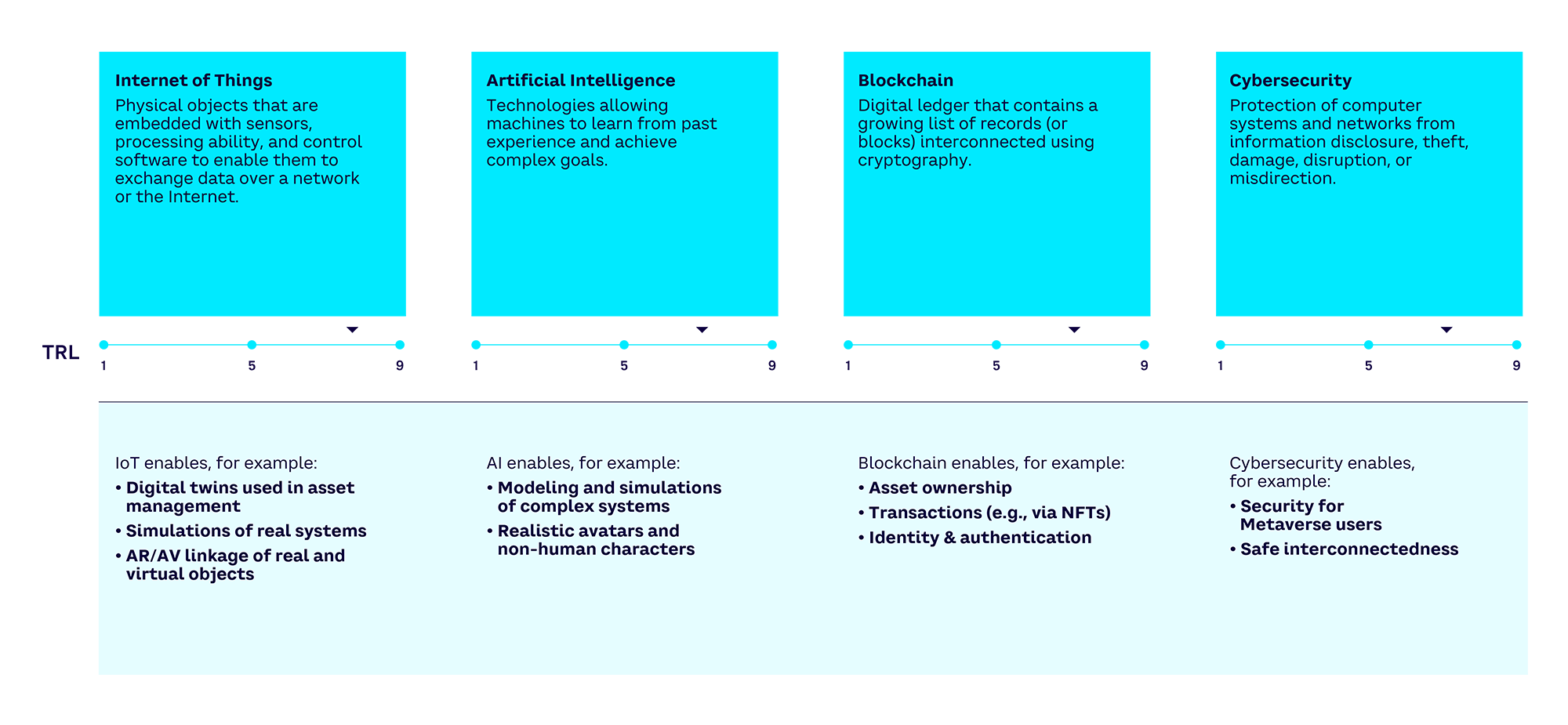

Layer 6: Key enablers — Oiling the wheels

Layer 6, which we call “key enablers,” brings together a set of technologies, mostly software, that is essential to the proper functioning of the other layers. This sixth and final layer may be thought of as the oil that lubricates the wheels. It brings together IoT, blockchain, cybersecurity, and AI (see Figure 17). The latter, for example, is necessary for the automatic generation of digital twins or for the creation of realistic avatars with realistic attitudes. The technologies below are already mature in many existing applications but will require further development to enable new Metaverse applications. The TRL for each type of technology shown in Figure 17 is therefore a simplification.

Internet of Things

IoT refers to physical things that are embedded with sensors, processing ability, and control software to enable them to exchange data over a network or the Internet.

IoT enables, for example:

- Digital twins. Allows complete end-to-end asset management of interconnected devices.

- Simulation. Enables customers to create and simulate hundreds of virtual connected devices, without having to configure and manage physical devices.

- AR/AV. Allows real data to link virtual and real objects in different applications along the MR spectrum.

The global market size has been estimated at $750 billion in 2020 and $4,500 billion in 2030, with a CAGR of 20%.

Artificial intelligence

AI refers to technologies allowing machines to learn from past experience and achieve complex goals and enables, for example:

- Simulation of digital twins. Allows better modeling and simulation of complex systems such as industrial equipment or living entities, leveraging larger amounts of heterogenous data that could not be processed manually.

- Realistic avatars. Together with generative adversarial networks (GAN), improving the realism of avatars (in both representation and behavior).

- Computer agents. Mimicking the behavior of nonhuman characters.

The global AI market size has been estimated at $94 billion in 2021, and $1,000 billion in 2028 with a CAGR of 40%.

Blockchain

Blockchain is a digital ledger that contains a growing list of records (or blocks) interconnected using cryptography. Blockchain enables, for example:

- Asset ownership. Its immutability allows for a record of NFTs within Metaverse economies as proof of digital asset ownership, allowing quick, efficient, and cost-effective transactions.

- Identity and authentication. The technology can effectively keep track of digital identities, bringing trust to identity challenges.

The global blockchain market size has been estimated at $4.7 billion in 2021 and an estimated $165 billion in 2029, with a CAGR of 55%.

Cybersecurity

Cybersecurity refers to the protection of computer systems and networks from information disclosure and theft of or damage to hardware, software, or electronic data, as well as from the disruption or misdirection of the services they provide. Cybersecurity enables, for example:

- Security. Must be guaranteed before any platform can attract users.

- Interconnectedness. Essential to allow the Metaverse to offer new, secure paths for the connections between humans that may be required to enable new applications and capabilities.

INTERLUDE#2 — Building the metaverse

With this work, I sought to represent the complexity of the Metaverse with various intricacies: the intricacy of both the physical and the real, the intricacy of both the hardware and the software, and finally the intricacy of the six layers of the architectural model of the Metaverse developed by Arthur D. Little.

— Samuel Babinet, artist

— Samuel Babinet, artist

3

Proto-metaverses: Virtual worlds for real economy, today

Dimensioning and forecasting the Metaverse market is challenging because it depends on what is included in the calculations. Some analysts project a market as large as $5 trillion by 2030, based on assumptions around the proportion of the global digital economy that will shift toward the Metaverse. These headline-grabbing numbers are very speculative. Instead, we propose a more conservative approach that suggests new markets, excluding enabling technologies such as IoT, AI, and blockchain, in the hundreds of billions of dollars by 2030 with a 30%-40% annual growth rate. Even though the Metaverse, as envisioned and defined previously, is not yet a reality, a large number of business opportunities already exist and can be seized in today’s proto-metaverses. Just like the Internet, in considering the opportunities it is useful to segment the Metaverse market into three types: consumer, enterprise, and industrial.

Market: Very significant, but consider multi-trillion-dollar forecasts with caution

Several analysts have already come up with numbers to quantify the Metaverse’s market size and dynamics. Some, such as Citibank, estimate the market size in a top-down manner, producing very large figures.[16] For their predictions, they considered the overall global GDP, the percentage of this attributable to the digital economy, and the percentage of the digital economy attributable to the Metaverse. Working on the assumption that the digital economy makes up between 25%-30% of global GDP and that 10%-50% of this is attributable to the Metaverse puts forecasts in a range of market sizes between $2-$20 trillion. However, this does include supporting digital infrastructure and enabling technologies, which as we explained above, will not be driven or used solely by the Metaverse.

Others, such as McKinsey, get to a $5 trillion market in 2030 with a more bottom-up approach.[17] Their approach is based on assumptions about future use cases. In practice, a large proportion of these use cases represent a shift in the already rapidly growing digital economy from the classical Web to the Metaverse, rather than being genuinely new market space — much in the same way that a very significant part of the digital economy shifted from computers to smartphones.

The point is not to claim that one approach is better than the other, but to stress the fact that forecasting the future size of the Metaverse market accurately is difficult for three main reasons:

- Scope. Delivering the Metaverse will require extensive and expensive digital infrastructure (such as high-speed, high-capacity networks) to be in place. However, these enabling technologies will not be driven by the Metaverse alone. In other words, sizing the market depends on what we decide to include in its scope, which is somewhat arbitrary.

- Lack of maturity. As with any immature market, predicting when (or if) growth will occur is hard. When will the inflection point be reached when consumer demand grows exponentially, for example?

- Substitution. Some market activity in the Metaverse will be a substitute for activity that would have taken place anyway in the conventional digital economy, and is thus substitution rather than new growth.

Given these factors, our analysis provides a cautious, low-end forecast, which estimates that the current Metaverse market, excluding infrastructure and enabling technologies, is estimated to be worth $50 billion.

These numbers are from ADL analysis based on recent credible forecasts for AR, VR, and MR software and hardware markets across multiple consumer, enterprise, and industrial segments. Taking into account the current technological challenges that still need to be overcome, we have conservatively assumed 10%-30% new market space created by further progress in Metaverse adoption up to 2025, over and above recent forecasts. Importantly, these figures exclude revenues from the new digital infrastructure and enabling technologies such as blockchain, AI, and IoT required for Metaverse growth.

A conservative forecast suggests that it will increase to around $110-$125 billion by 2025 (see Figure 18),

and we expect it could reach around $500 billion by 2030, assuming linear growth.

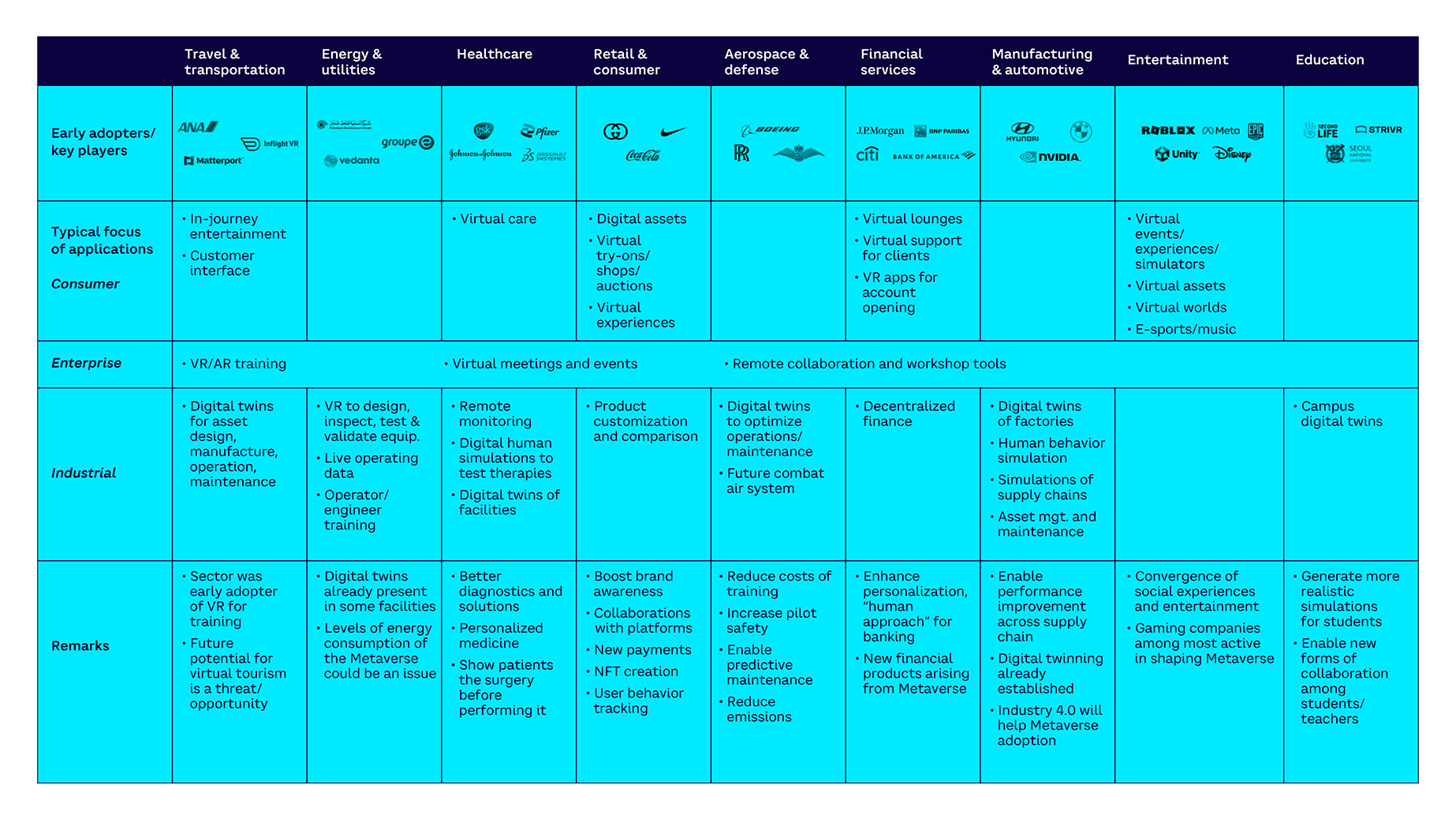

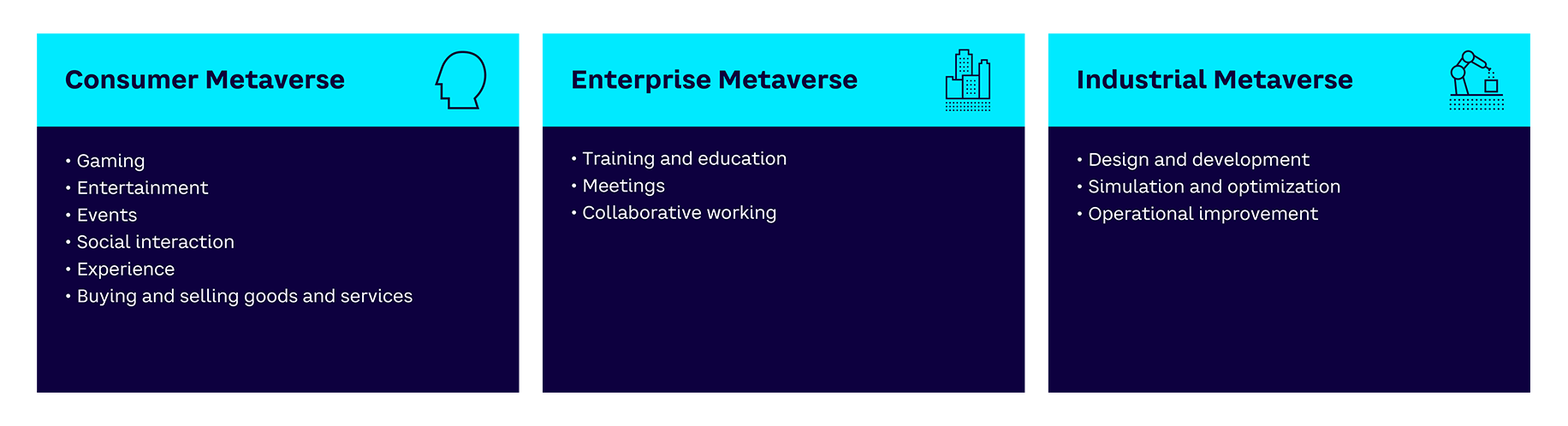

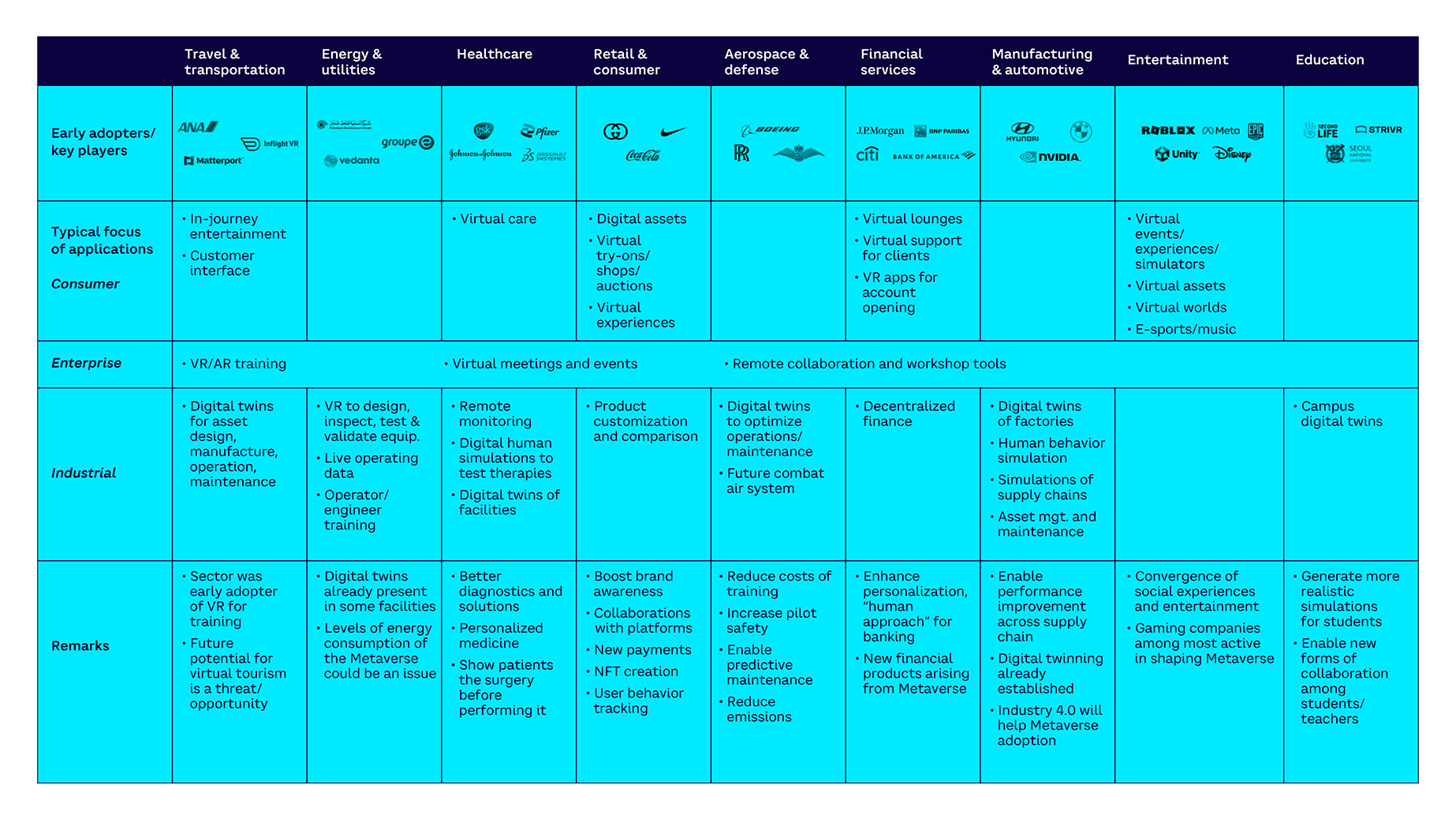

Three types of Metaverse: Consumer, enterprise, & industrial

In this section we focus on the experience continuum — the layer that contains new usages and business models across virtuality and reality. As we mentioned before, these applications can broadly be split into three areas (see Figure 19):

- Consumer Metaverse. Referring to all applications and experiences designed for and accessed by accessed by individual consumers.

- Enterprise Metaverse. Referring to non-industry-specific applications used across businesses for interaction. These are driven mainly by the need for corporate collaboration among employees.

- Industrial Metaverse. (including concepts such as digital twins). Focused on technical collaboration among employees and machines. These applications are often industry- or business-specific.

Many real applications for the Metaverse already exist in most sectors for consumers, enterprises, and industry. However, these are currently implemented as proto-metaverses — the walled gardens we referred to earlier. Additionally, there are current infrastructure opportunities. We have shared a range of current use case examples in Appendix 1. Here, we share a topline summary with some illustrative examples from across different industries.

Current opportunities: Proto-metaverses across all industries

Consumer Metaverse

The consumer Metaverse provides many of the opportunities that first come to mind, given the Metaverse’s partial origins in the gaming industry. There are opportunities across virtually every consumer sector, for example:

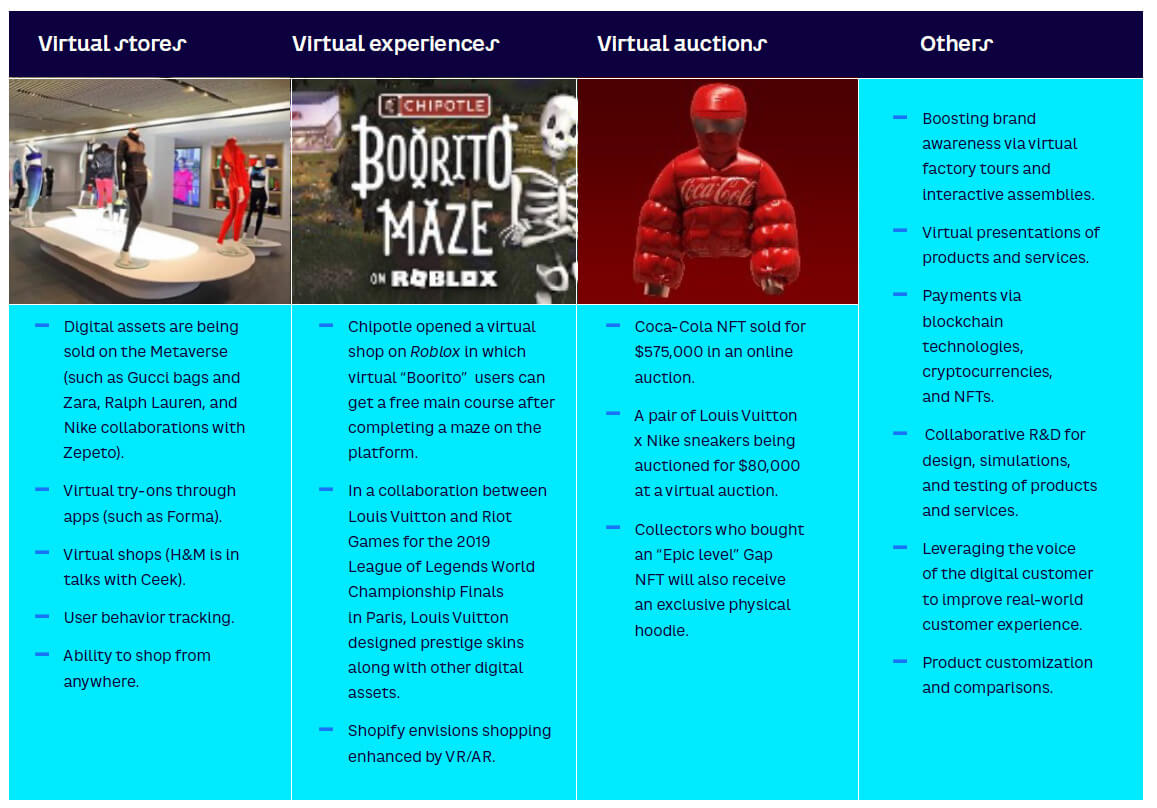

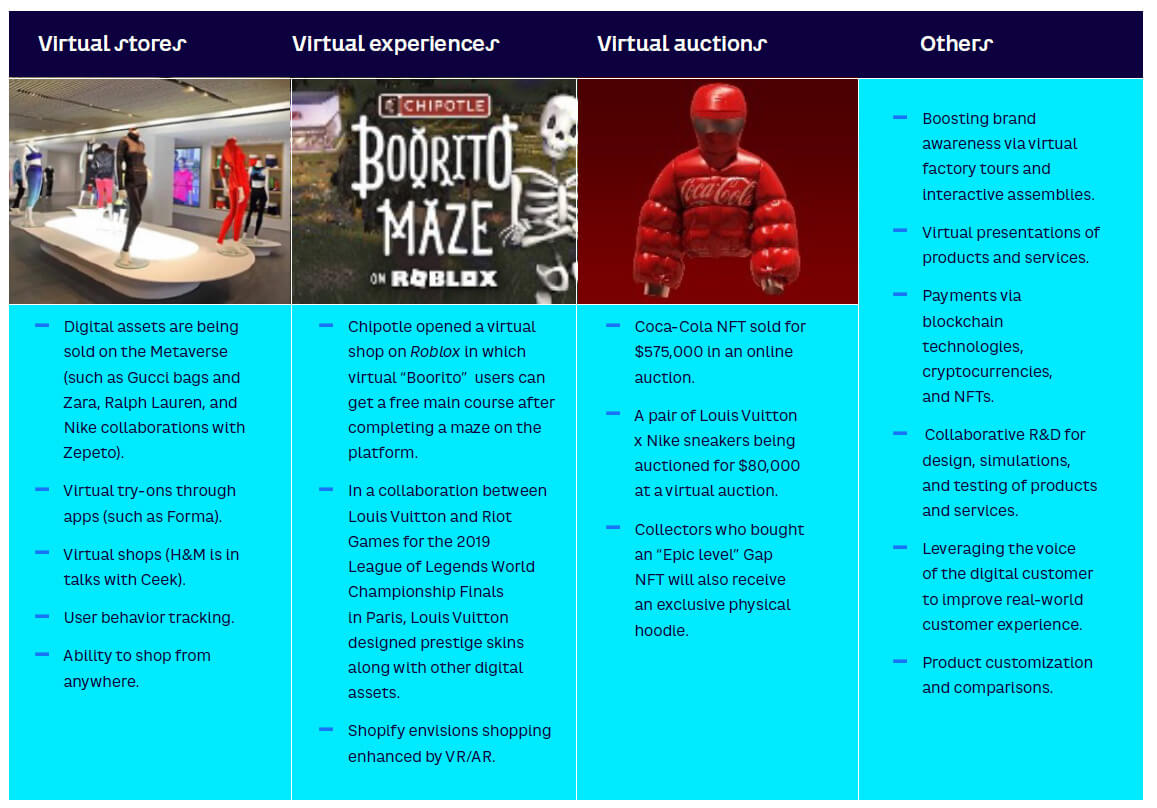

Retail and consumer goods:

- Digital assets — including branded, virtual only, replicas of physical assets, or add-ons.

- Virtual try-ons/shops/auctions/retail experiences — aimed at enhancing consumer experience, engagement, loyalty, touchpoints, and brand awareness.

- New payment models.

- Enhanced product customization and comparison through digital modeling.

- Improved customer tracking.

Entertainment:

- Virtual events/experiences/simulators — a vast range of applications that could be further enabled by new HMI technologies, which would allow for additional merging of gaming/entertainment and social/collaborative applications.

- Digital assets — building on the existing global digital entertainment market.

- Virtual worlds/virtual tourism — development of what are currently “gaming-only” opportunities into what could be an almost endless array of virtual experiences.

- E-sports/music — New opportunities for sports and music content creation, and new ways for consumers to virtually attend and participate in sports and music events.

Travel:

- In-journey entertainment — new immersive entertainment opportunities (see above).

- Customer interface enhancement — virtual interactions and facilities to revolutionize the customer experience along all stages of the journey from pre-travel to post-arrival.

Financial services:

- Virtual support for clients — enhancing customer engagement, personalization, and quality of interaction along the customer journey.

Healthcare:

- Virtual healthcare provision — enhanced “telecare” offerings for patient consultation, diagnosis, and treatment from a distance and from virtual hospitals.

Enterprise Metaverse

This category includes nonspecialist virtual training courses, virtual meeting and event tools, and remote collaboration and workshop tools. The category is of course already well established in the 2D environment, although technical shortcomings still prevent more widespread application in truly immersive environments. Improvements in the quality of the immersive experience could lead to a step change in the adoption of enterprise tools.

Industrial Metaverse

The industrial category has one of the longest histories and is already extensive in scale. Examples of typical applications include the following:

Manufacturing:

- Digital twins of factories, plants, and other operational facilities — used to enhance and optimize design, operations, and maintenance.

- Human behavior simulation — integrating realistic human behavior models into digitalized manufacturing process models.

- Simulations of complex supply chains — the ability to model entire supply chain networks, from suppliers to end customers, continually balancing supply and demand in real-time, virtual environments to collaborate between supply and demand.

- Asset management and maintenance — tools to enhance and optimize asset management and maintenance, enabled by Industry 4.0 technologies such as IoT, AI, ML, and AR.

Travel and transport:

- Digital twins of assets and infrastructures — as per asset management and maintenance, above.

- Asset design, manufacture, operation, and maintenance — tools to enable enhanced design of assets such as complex travel infrastructure, integrated mobility systems, etc.

Healthcare:

- Remote monitoring of patient health conditions.

- Digital human simulations to test therapies — reducing cost and improving safety.

- Digital twins of manufacturing facilities — as with manufacturing, above.

- Better diagnostics and solutions — leveraging “in silico” approaches for drug discovery and development in a digital environment.

Energy and utilities:

- Design, inspection, testing, and validation of equipment — using digital twins and models, using AR to provide real-time data on asset conditions, etc.

- Modeling and visualization of operating data — using virtual 3D simulations to enhance the ability to optimize and make decisions based on complex and changing data.

- Operator/engineer training — virtual training environments.

Aerospace and defense:

- Digital twins to optimize operations and maintenance — as above.

- Future combat air systems — combining manned and unmanned systems.

Financial services:

- Decentralized finance approaches.

- New financial products — new financial and payment models to suit the emerging Metaverse economy.

- New security approaches — suitable for the virtual world.

Education:

- Campus digital twins — extending the concept of virtual training environments.

- More realistic simulations — to enhance learning.

- New forms of collaboration — among students and teachers.

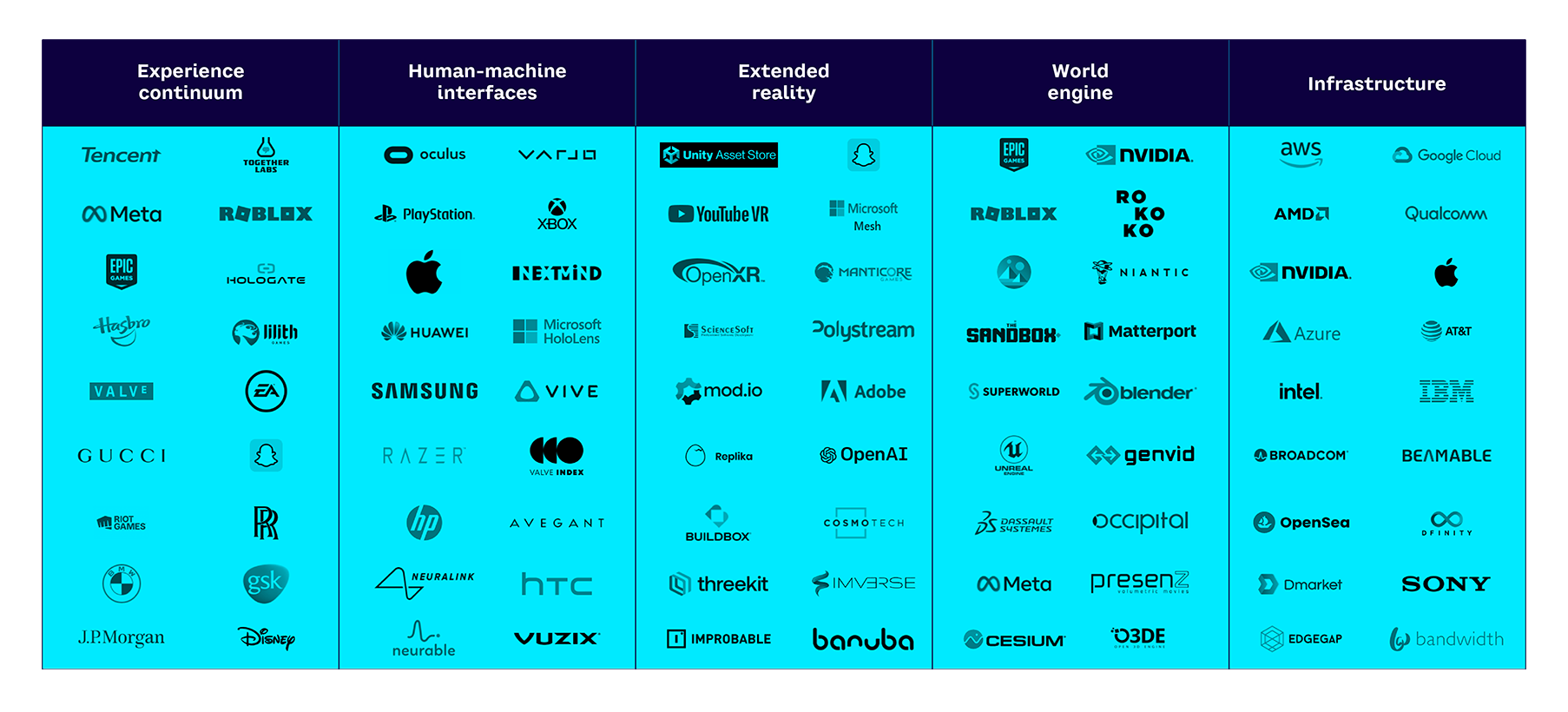

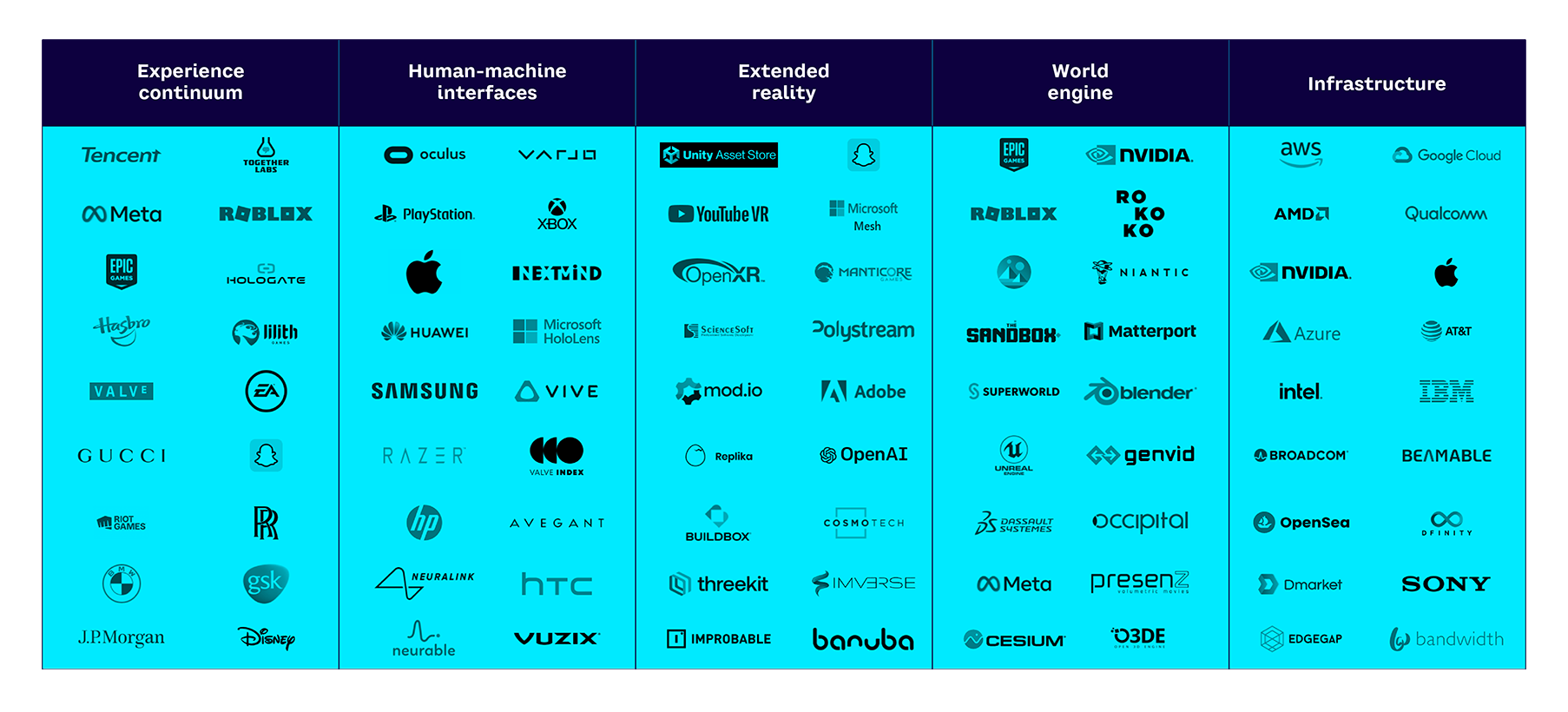

Key players in the Metaverse

There are perhaps 100 companies that are prominent today in shaping the future of the Metaverse, although the total number of companies involved is much greater. Figure 20 shows a selection across the top five layers of the Metaverse framework.

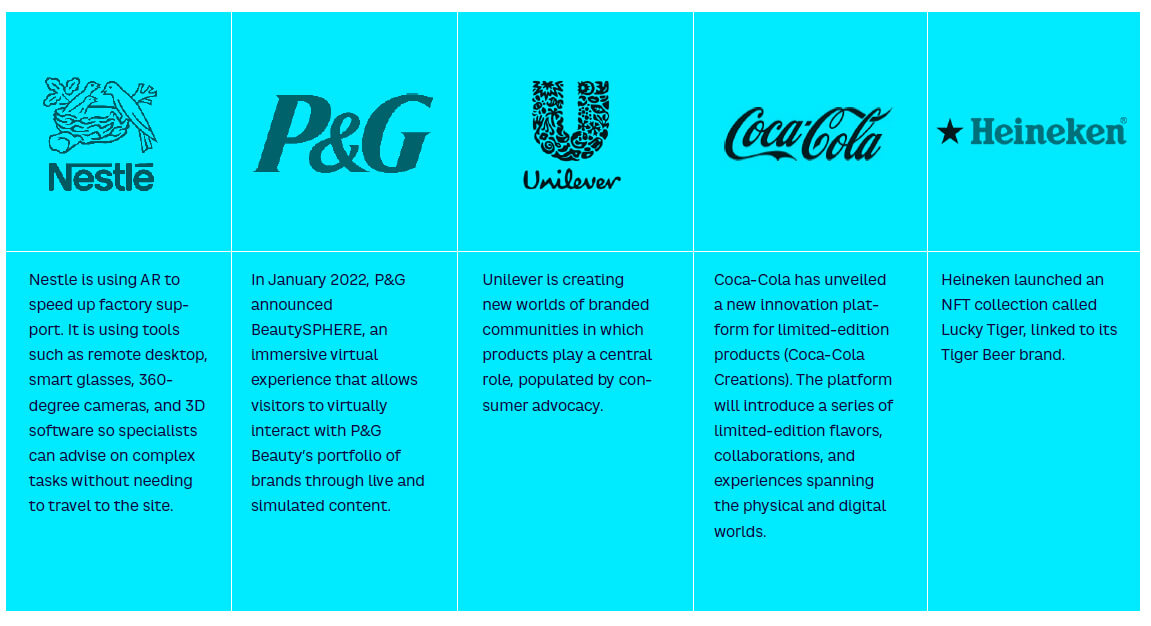

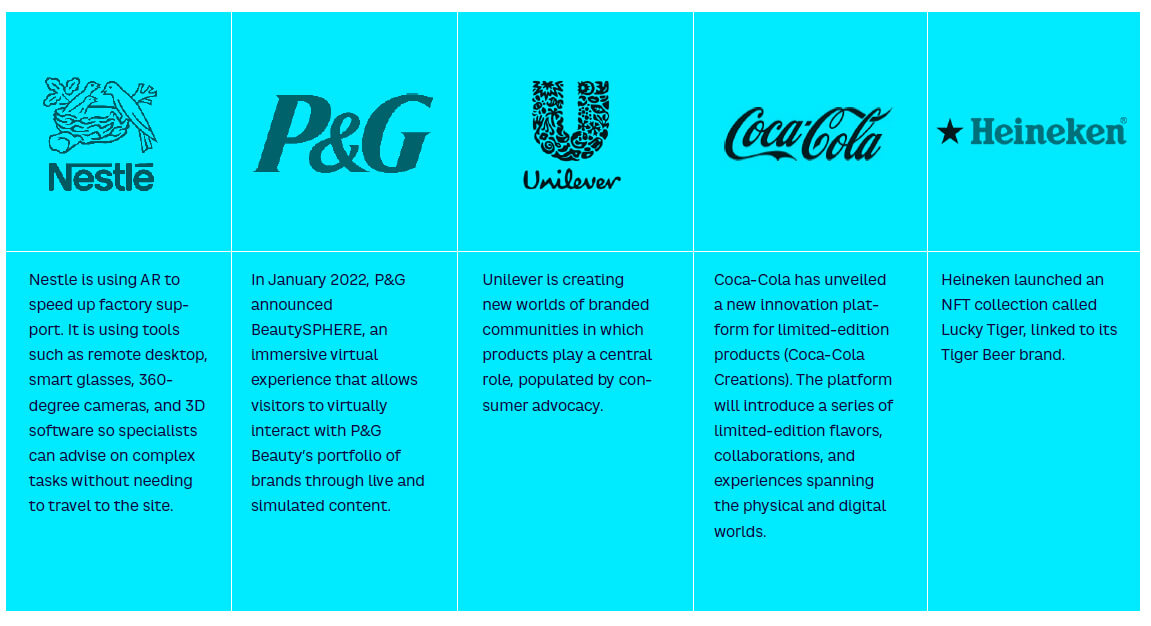

The large gaming companies such as Roblox, Epic Games, and Niantic have been leading the way in shaping the Metaverse, together with the tech giants such as Meta and Microsoft. At each layer of the Metaverse architecture, multiple large and well-funded players are active, as well as hundreds of smaller players. Companies investing in Metaverse activities are to be found in nearly every sector, from fast food to football.

By way of illustration, selected key players include the following:

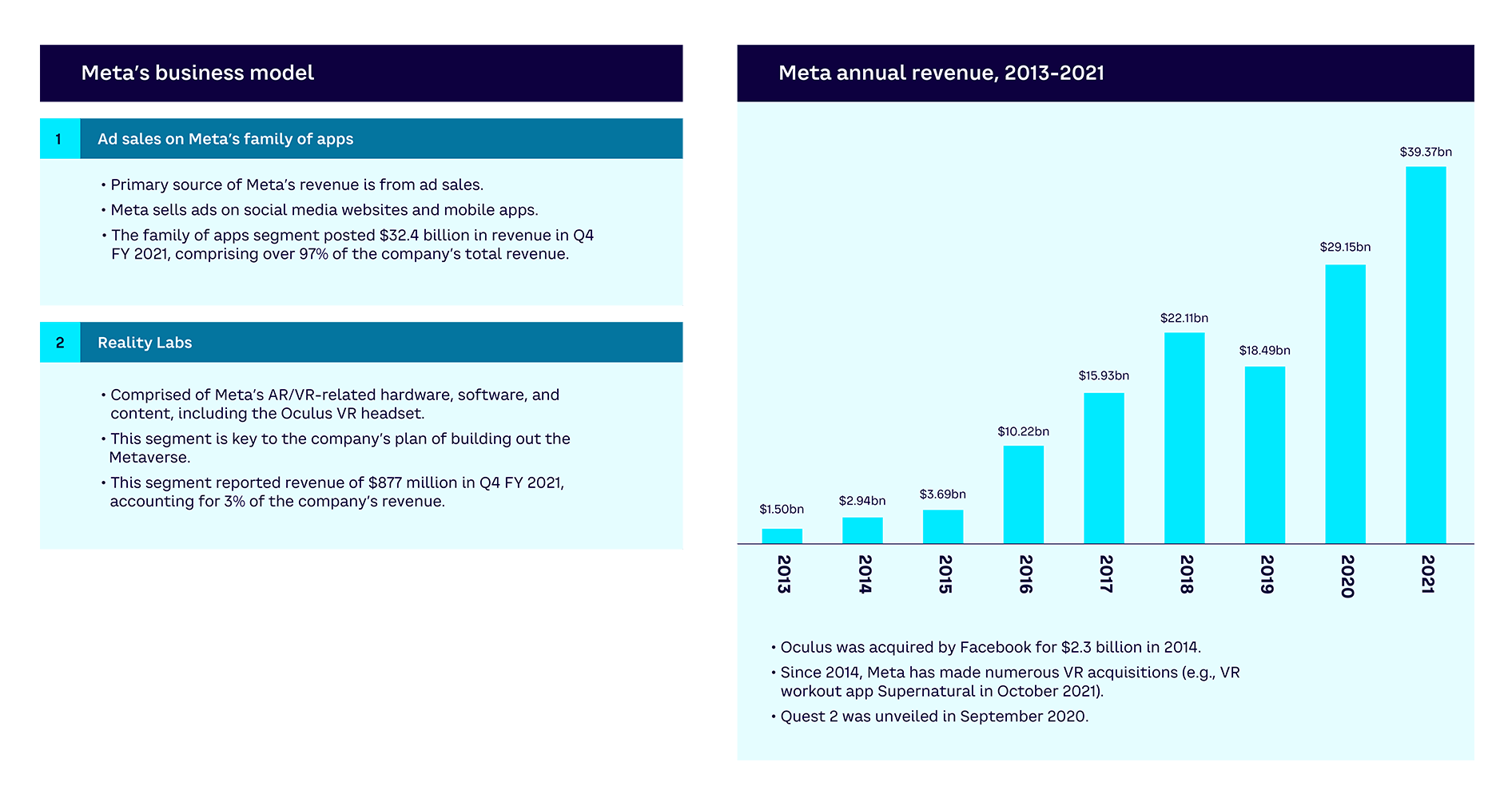

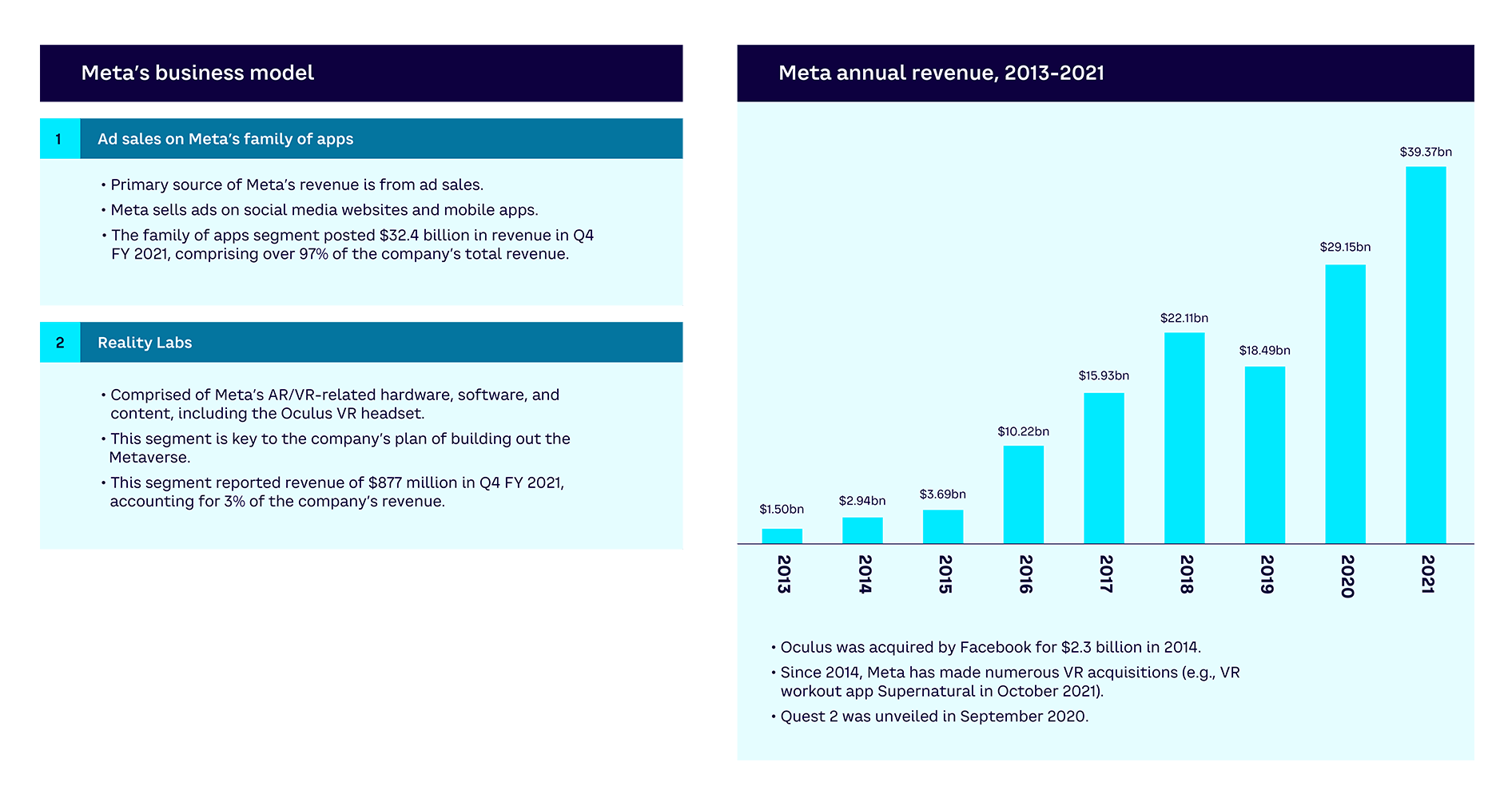

Meta

For many, the term Metaverse first came to prominence when Facebook changed its name to Meta in 2021. The company is investing heavily in the Metaverse, focused on three current initiatives (see Figure 21):

- VR hardware. Since acquiring Oculus in 2014 for $2 billion, Meta has focused on creating best-in-class hardware and complementary software & services to support VR experiences. Currently this allows users to play games, try fitness classes, play sports, and watch concerts in virtual environments. One of the biggest differentiators for Oculus is its large array of nongaming experiences designed for the headset. For instance, users can explore extreme terrain in National Geographic Explore VR, join virtual fitness classes, or simulate being a chef.

- AR lenses. Meta has built AR lenses within the Instagram chat and Messenger platforms. There is clearly interest in Meta’s AR platform, with the company stating that there are currently 600,000 AR creators in 190 countries working with the technology, who have so far produced 2 million AR filters.

- Horizon Workrooms. Meta has launched a VR experience for the Oculus Quest 2 headset that allows users to join collaborative workspaces virtually. Horizon Workrooms creates a virtual office space that can be accessed by up to 16 people who can join as their avatar. While the experience is Metaverse-like, there are still many technical limitations, including the number of people in the space, the ability to dynamically alter the environment, and the cost and complexity of hardware required to access it.

Partnerships include:

- Verizon (infrastructure layer) — developing 5G ultrawideband networks with lower latency and higher upload and download speeds to deliver a high-quality Metaverse experience.

- VNTANA (world engine) — allowing brands to upload 3D models of their products to Facebook and Instagram and easily convert them into ads.

- Microsoft (experience continuum) — integrating between Meta’s Workplace enterprise social network software and Microsoft Teams, allowing Meta customers to access Workplace content inside the Teams app, and vice versa.

- Xiaomi (human-machine interfaces) — producing a Chinese variant of the Oculus Go VR headset, and establishing the foundations of a VR ecosystem that straddles North American and Chinese markets.

Meta’s revenues are still overwhelmingly based on ad sales on its social media networks. The Reality Labs division, which comprises AR- and VR-related hardware, software, and content, including the Oculus VR headset, provided just 3% of revenues ($877 million) in Q4 FY 2021 and is not currently profitable, losing $2.96 billion in Q1 2022 alone.[18]

Epic Games

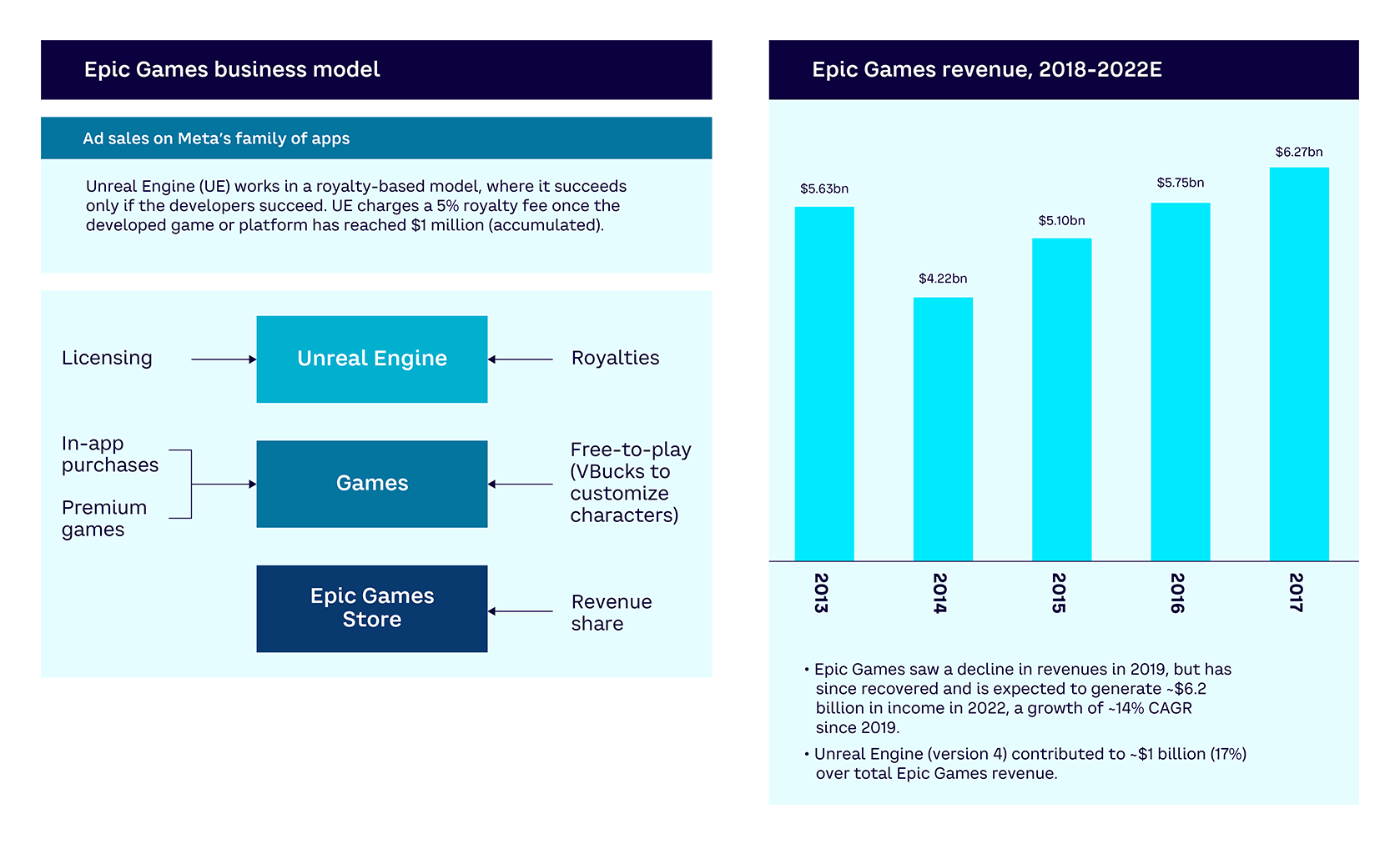

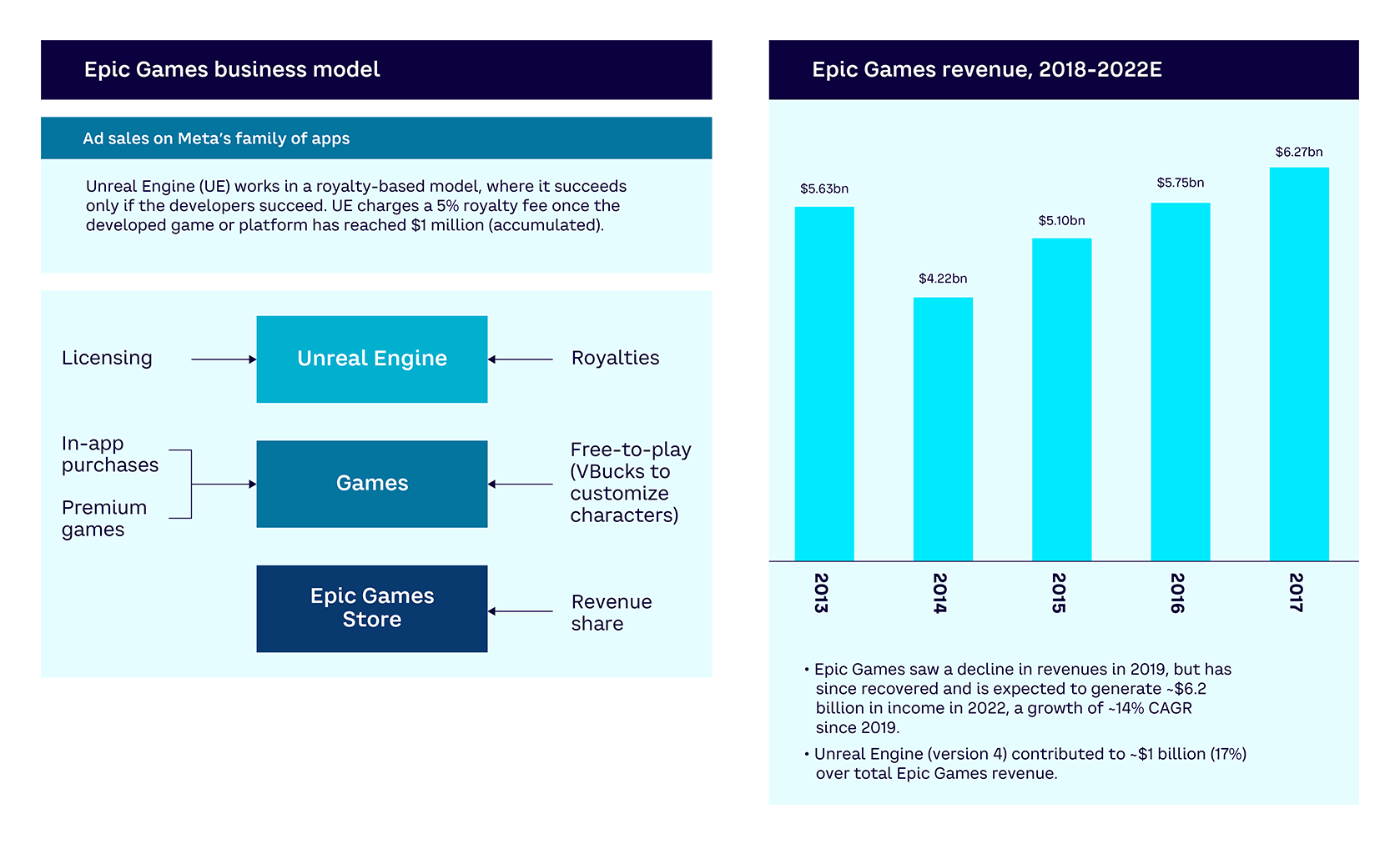

Epic Games is a US-based multinational technology company best known for developing the Fortnite game, which has emerged as a proto-Metaverse used for concerts and brand partnerships alongside gameplay features (see Figure 22).

To diversify revenues beyond its own games, Epic Games now provides its Unreal Engine world engine to third-party developers. Due to its availability and feature set, many industries have adopted the Unreal Engine, including film and television as well as noncreative fields. For example, it has been used as a basis for a virtual reality tool to explore pharmaceutical drug molecules in collaboration with other researchers, as a virtual environment to explore and design new buildings and automobiles, and by cable news networks to support real-time graphics.

Pricing for the Unreal Engine works through a royalty-based model, with Epic Games charging a 5% royalty fee once the developed game or platform has reached accumulated revenues of $1 million.

Partnerships include:

- WPP (experience continuum) — partnership to help WPP agencies deliver new digital experiences for brands in the Metaverse through a comprehensive training program.

- LEGO (experience continuum) — long-term partnership to shape the future of the Metaverse to make it safe and fun for children and families.

- NVIDIA (infrastructure layer) — the NVIDIA Edge Program provides high-end hardware to individuals and teams to create content with Unreal Engine.

- Intel (world engine) — collaboration to bring game developers low-power and mobile-optimized support for Windows and Android + in Unreal Engine.

Roblox